Automate Health Care Information Processing With EMR Data Extraction - Our Workflow

We dive deep into the challenges we face in EMR data extraction and explain the pipelines, techniques, and models we use to solve them.

Understanding facial expressions is a key part of communication that we can tap into with recent advances in artificial intelligence technology. Recognizing the user’s emotions can enable better automated service, market research, patient monitoring in healthcare and overall personalization of experience that customers value. Recent advances in Facial Expression Recognition (FER) technology, primarily using deep learning, have enabled viable real-life use cases of some of the techniques we will outline, specifically on the example of automated market research.

Automatic facial expression recognition is a technology that allows a digitized system to interpret and analyze human facial expressions. It involves the use of algorithms and machine learning techniques to analyze and interpret the various facial features and gestures of a person, such as the shape of the mouth, the position of the eyebrows, and the movement of the eyes. The goal of facial expression recognition is to automatically detect and interpret the emotions and intentions of a person based on their facial expressions.

Facial expression recognition has advanced significantly in recent years with the development of more advanced techniques of deep learning in computer vision, such as the development of the attention transformer networks and better techniques for video processing with CNN-BiLSTM, which we will outline below. Additionally, publicly released datasets related to FER like the CASIA Webface, FEEDB , RaFD datasets have enabled more research to address the problem. These datasets consist of images or video frames that are labeled with the corresponding facial expressions, as well as encoded facial feature shapes, allowing the algorithms to learn to recognize patterns and relationships in the data. While the first successes were achieved before the rise of deep learning technology, their adoption has made the performance of FER systems accurate and robust enough to allow for real-world use.

Facial expression recognition using Ai can be incorporated into systems that require an accurate assessment of a person’s emotional state, which is not always possible by asking the person. People are often reluctant to reveal their emotional state, which meanwhile is guiding their conscious and subconscious decision making.

At the same time, users are unsatisfied with rigid, unresponsive systems like automatic chatbot assistance that can not recognize distress and nuance the way a human support specialist would. It is therefore fair to say many instances of human-technology communication would benefit from detecting emotion through FER.

In our market research example, a large marketing agency would like to evaluate customer reactions to their new ad videos at scale and across different countries. Instead of manually questioning individuals and showing them the ads, they have a model developed which can read market research participant’s emotions using a webcam video feed, allowing them to perform the reaction evaluation automatically and at large scale with participants all over the world using just their screens and webcams.

More potential use cases include:

Having established the importance of FER, we will go on to outline some current implementations that aim to accurately classify emotional states of people from facial image and video data. While some algorithms use additional sensors, like electrodes to detect facial muscle movements, we will not be covering these due to impracticality outside of lab settings. Rather, we will use the example of automating market research with FER described above to show how these techniques (or a combination of them) can allow for reliable insight into customers' emotions.

Let’s imagine we are curating a dataset of webcam videos of people reacting to advertisement videos and labeling the data with their perceived facial emotions. Our data will be broken down frame by frame with 30-60 images forming one second of video. While labeling the target data to train our algorithm on, we see that when the subject’s facial expression changes, there are moments between expressions that are ambiguous and can't really be attributed to an emotion, like the start of a smile or return to a neutral expression after surprise.

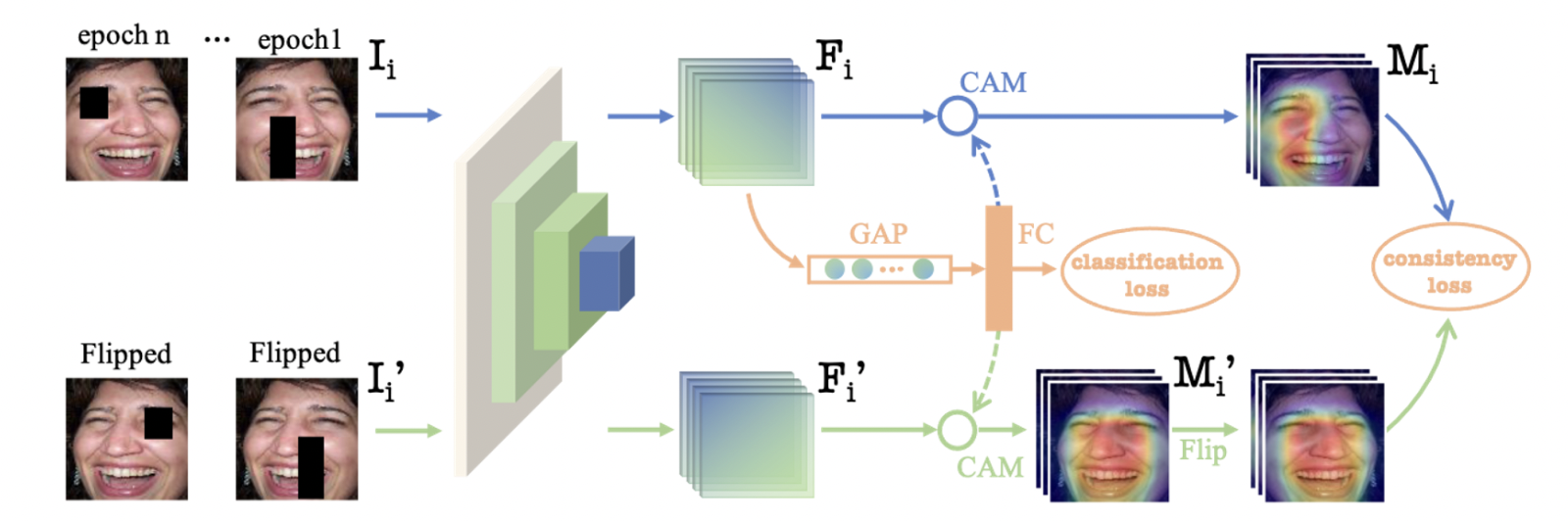

Displays of emotions can be both subtle and obvious with strong or weak visual cues, and emotional states can blend into each other or transition fluently. When constructing a ground truth, this makes the labeling of target data, which all supervised machine learning relies on, challenging as a video most often doesn't have a single frame where an emotion appears or disappears on an individual's face. This presents a unique challenge in training the network where the labels are “noisy”, meaning not precise. This issue is addressed by combining an attention network with feature engineering to guide training on less informative labels. To understand this process, we must first examine the attention mechanism, which along with Convolutional Neural Networks presents a current State-of-the-Art technique for most visual data-related AI models. The method also increases generalization ability by using random masking during training, blacking out some patches of the face to make the network learn features related to the entire face, not just a single portion of it.

To cover a sufficient range of possible subjects, we need a dataset of diverse individuals and a sufficient size. And since the dataset we are constructing for the task needs to be labeled on a frame-by-frame basis, labeling is also quite laborious. We want to reduce the requirements on the training dataset as the most cost-intensive part, and choose an architecture that learns much faster than regular CNN architecture, the so-called self-attention network.

The self-attention mechanism is a model component that enables the weighing of features as they are propagated through a network, allowing smaller but crucial details in the input data to have a larger impact on the overall result. This allows a model to attend to specific parts of an image or to capture long-range dependencies between image features. The self-attention mechanism outputs a feature map of weights that encodes the importance of specific sections of data, such as a small patch of pixels in an image.

EAC, which we discussed previously, uses this weight map on mirrored images to identify inconsistent feature weights and eliminate noisy samples during training, improving model performance down the line.

While this technique provides state-of-the-art results for FER and self-attention networks are generally one of the first tools to consider for a computer vision task due to their robust high performance, it is important to note that the performance of this approach was developed for an academic dataset and setting, while a potential real-life use case may not need the extensive processing and alteration of the training data. Depending on how the model output is interpreted and used in the final system, manual rules for system behavior or good choice of threshold for actions may also help address the issue of noise in labeling.

Since we are working with video data from webcams instead of single images, our input has an implied logical sequence, as the frames always happen in a particular order. The emotional display of our subjects is also not independent, meaning the person will not switch between happy and angry from one single moment to another but build up an emotion over time. Therefore, it might be useful to not consider each frame independently, but feed them into a network architecture that can accommodate the previously seen inputs as well as the current input to increase accuracy.

For video applications, a model architecture focused on temporal features can be used, as sequential frames of video and their differences can offer more information about an emotion displayed than just a single image in isolation. Regular CNN architectures are not able to infer from the order of data passed into them, leading to the design of specific architectures for sequential data. These architectures retain some data from previous input and are thus able to learn to infer from data that has an inherent progression, such as video. A common technique is to combine a regular CNN architecture with a Long-Short Term Memory block that retains information across inputs.

![[Combining a CNN architecture with an LSTM network can allow leveraging the information inherent to sequential data, such as the frames of a video. ]](https://assets-global.website-files.com/5fdc17d51dc102ed1cf87c05/63a4b5813e028af70f77418e_ktInYXVRmnaXMsKZZlIase7z7XadsKC_wVAs8LiMjyDVt1j7YLspF7UaeghKYk-5pgRw6OASsH3Yn2rL9Oc7j530TjJ4a-xpEEwz4LXOUkkHeHRGcZnVh2wobW-D_proEhddZEsBZEcVx0iLvulNZ3KTE3MPGkEw_fxrtt-1T4O7olAGsh9dkzoau2p44g.png)

An LSTM network consists of a series of LSTM cells, each of which is designed to remember and update information over a longer period of time than a traditional neural network. Each LSTM cell has three main components: an input gate, a forget gate, and an output gate. These gates control the flow of information into and out of the cell and allow the cell to selectively remember or forget information from previous time steps.

The input gate determines which information from the current time step should be allowed to enter the cell and be stored in the cell's memory. The forget gate determines which information from the previous time step should be discarded, or "forgotten." The output gate determines which information from the cell's memory should be outputted at the current time step. These gates are controlled by weights, which are learned by the network during training. The weights are adjusted to optimize the network's performance in terms of accuracy on FER.

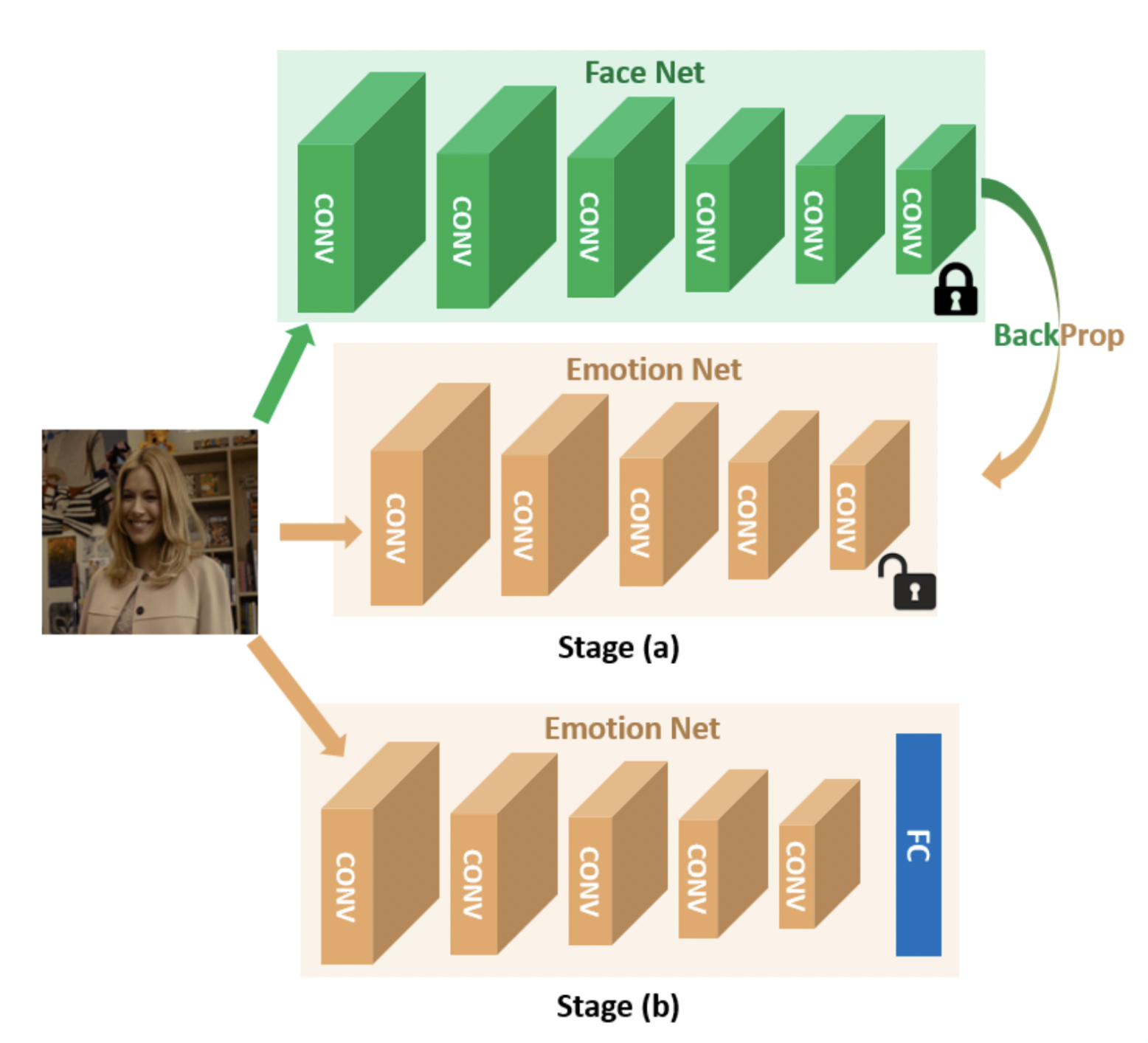

Still, we realize that training a network from scratch for a purpose as common as using facial data of many individuals, especially when diversity is required, would benefit from a pre-trained network that is specifically trained to work on faces, saving us the trouble of having to cover a very wide range of possible individual appearances in our dataset.

Models are commonly fine-tuned from prior training, which speeds up training and allows for smaller datasets to achieve comparable results to larger, more elaborate deep learning training pipelines. With FER, we have the benefit of a very common and powerful architecture being available, namely FaceNet, a pre-trained neural net published by Google that is trained to recognize individuals across different images. The network is trained using triplet loss, which is a training technique of minimizing embedding distance in the network between the same class and maximizing embedding distance between different classes. Usually, models are trained by using a cross-entropy loss function, which converges the model toward producing the correct labels without giving attention to how the model chooses to encode the data. However for some applications, it makes sense to manipulate the loss function in a way that forces the network to embed the data points in a certain relationship to each other.

Since kernels of the pre-trained Facenet are already attuned to facial features, allowing for faster training of the network to another goal related to facial data. This allows for faster prototyping and working with smaller datasets, collecting which is often the most laborious process in the design of a deep learning system. The network is also smaller than other common architectures, allowing for lighter hardware requirements.

Width.ai builds custom computer vision software like facial expression recognition for businesses and products. Schedule a call today and let’s talk about how we can get you going with customer FER software or any other of our popular CV models.