The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

Image inpainting, the process of filling in missing or corrupted parts of images, is a challenging task in the field of computer vision. A recent development in this area is the Aggregated Contextual Transformations for High-Resolution Image Inpainting (AOT-GAN), a model that has shown promising results in handling large missing regions in high-resolution images.

Developed by Yanhong Zeng, Jianlong Fu, Hongyang Chao, and Baining Guo, the AOT-GAN is a Generative Adversarial Network (GAN)-based model that enhances both context reasoning and texture synthesis. The model and its applications are detailed in their Arxiv paper, and the code is available on GitHub for anyone interested in exploring this technology further.

The AOT-GAN model is a significant contribution to the field of image inpainting, offering a solution to the challenges of inferring missing contents and synthesizing fine-grained textures for large missing regions in high-resolution images. This blog post will delve into the details of the AOT-GAN, exploring its challenges, solutions, results, and how to use it.

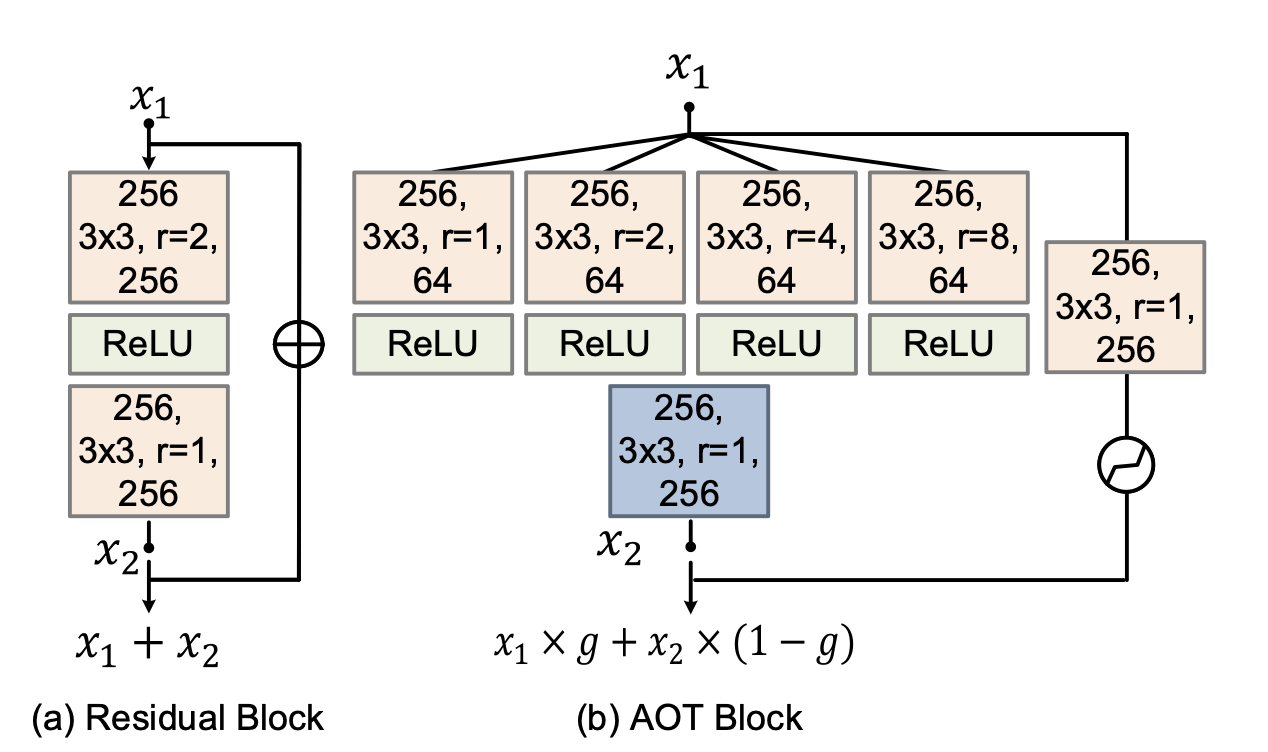

The AOT-GAN model introduces two key enhancements to address the challenges of high-resolution image inpainting: the AOT Block and SoftGAN.

The AOT Block, used in the generator, aggregates contextual transformations with different receptive fields. This means that it combines information from different scales or areas of the image, allowing it to capture both distant contexts and detailed patterns. This is crucial for inferring the missing contents in the image, as it allows the model to consider a wider range of information when deciding how to fill in the missing region.

On the other hand, SoftGAN, used in the discriminator, improves the training of the discriminator by a tailored mask-prediction task. This task is designed to enhance the discriminator's ability to distinguish between real and synthesized patches. By improving the discriminator in this way, the generator is in turn better able to synthesize more realistic textures.

These two enhancements make the AOT-GAN model a powerful tool for high-resolution image inpainting, capable of filling in large missing regions with realistic textures and accurate context.

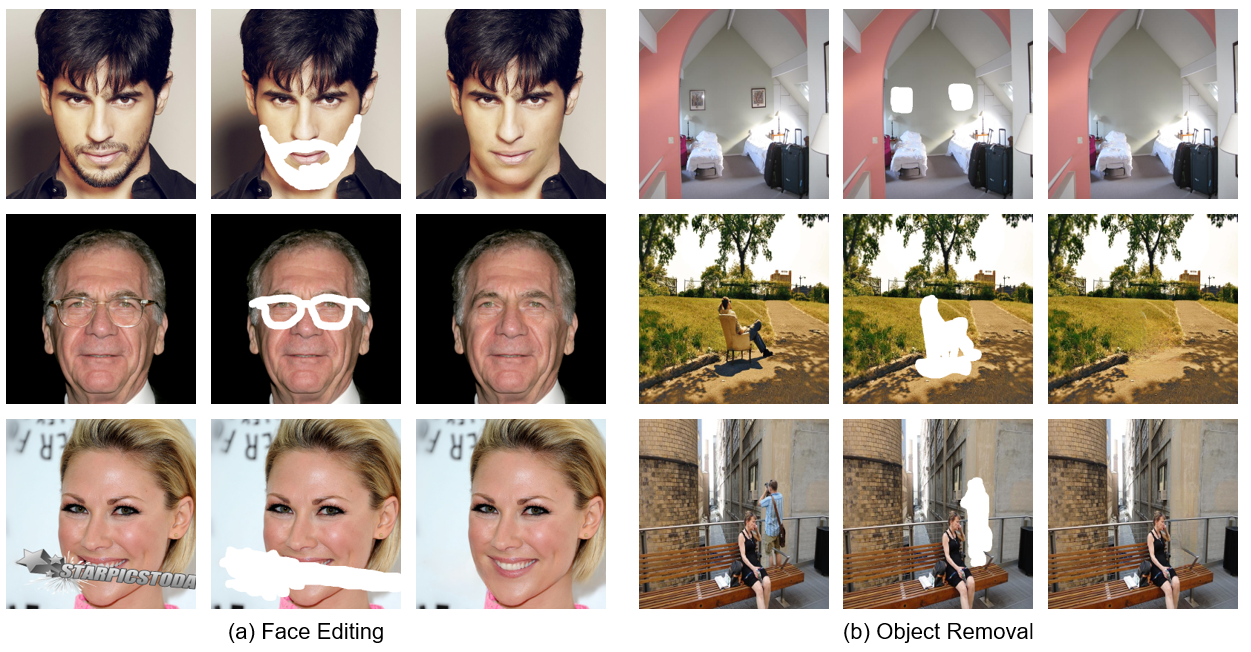

The effectiveness of the AOT-GAN model is best demonstrated through visual results. Here’s a look at the impressive capabilities of the AOT-GAN model in high-resolution image inpainting.

One of the images shows a face with a large missing region, which the AOT-GAN model successfully fills in with realistic textures and accurate context. Another image shows a logo with missing parts, which the model also successfully inpaints. These images demonstrate the AOT-GAN model's ability to handle large missing regions in high-resolution images, synthesizing fine-grained textures and inferring missing contents with high accuracy.

The results achieved by the AOT-GAN model are impressive, offering a promising solution to the challenges of high-resolution image inpainting.

The AOT-GAN model is implemented using PyTorch, a popular open-source machine learning library. PyTorch provides a wide range of functionalities for building and training neural networks, making it a suitable choice for implementing the AOT-GAN model. The AOT-GAN model requires Python 3.8.8 and PyTorch 1.8.1.

One of the key features of PyTorch used in the AOT-GAN model is its support for tensor computations. Tensors, which are similar to arrays, are a fundamental data structure in machine learning. The AOT-GAN model uses tensors to represent images and masks, and performs computations on these tensors to train the model and generate inpainted images.

Once your system meets the prerequisites, you can proceed with the installation of the AOT-GAN model. The first step is to clone the GitHub repository. This can be done using the following command:

```

git clone git@github.com:researchmm/AOT-GAN-for-Inpainting.git

cd AOT-GAN-for-Inpainting

```

After cloning the repository, it's recommended to create a Conda environment from the provided YAML. This ensures that all the necessary Python packages are installed. You can create the Conda environment using the following commands:

```

conda env create -f environment.yml

conda activate inpainting

```

With these steps, the AOT-GAN model should be successfully installed on your system, ready for use.

The AOT-GAN model requires datasets of images and masks for training. These datasets can be downloaded and used with the model.

To download the images and masks, you can use the download links provided in the GitHub repository. Once the datasets are downloaded, you need to specify the path to the training data. This can be done using the `--dir_image` and `--dir_mask` options.

For example, if your images are located in a folder named 'images' and your masks are located in a folder named 'masks', you can specify the paths to these folders as follows:

```

--dir_image ./images --dir_mask ./masks

```

Understanding and using the datasets correctly is crucial for the successful training of the AOT-GAN model.

Once the AOT-GAN model is installed and the datasets are ready, you can start using the model. The first step is to train the model. This can be done using the following commands:

```

cd src

python train.py

```

If you need to resume training at any point, you can do so using the following command:

```

cd src

python train.py --resume

```

After training the model, you can test it using the following command:

```

cd src

python test.py --pre_train [path to pretrained model]

```

Finally, you can evaluate the model using the following command:

```

cd src

python eval.py --real_dir [ground truths] --fake_dir [inpainting results] --metric mae psnr ssim fid

```

These steps should guide you through the process of getting started with the AOT-GAN model, from training to evaluation.

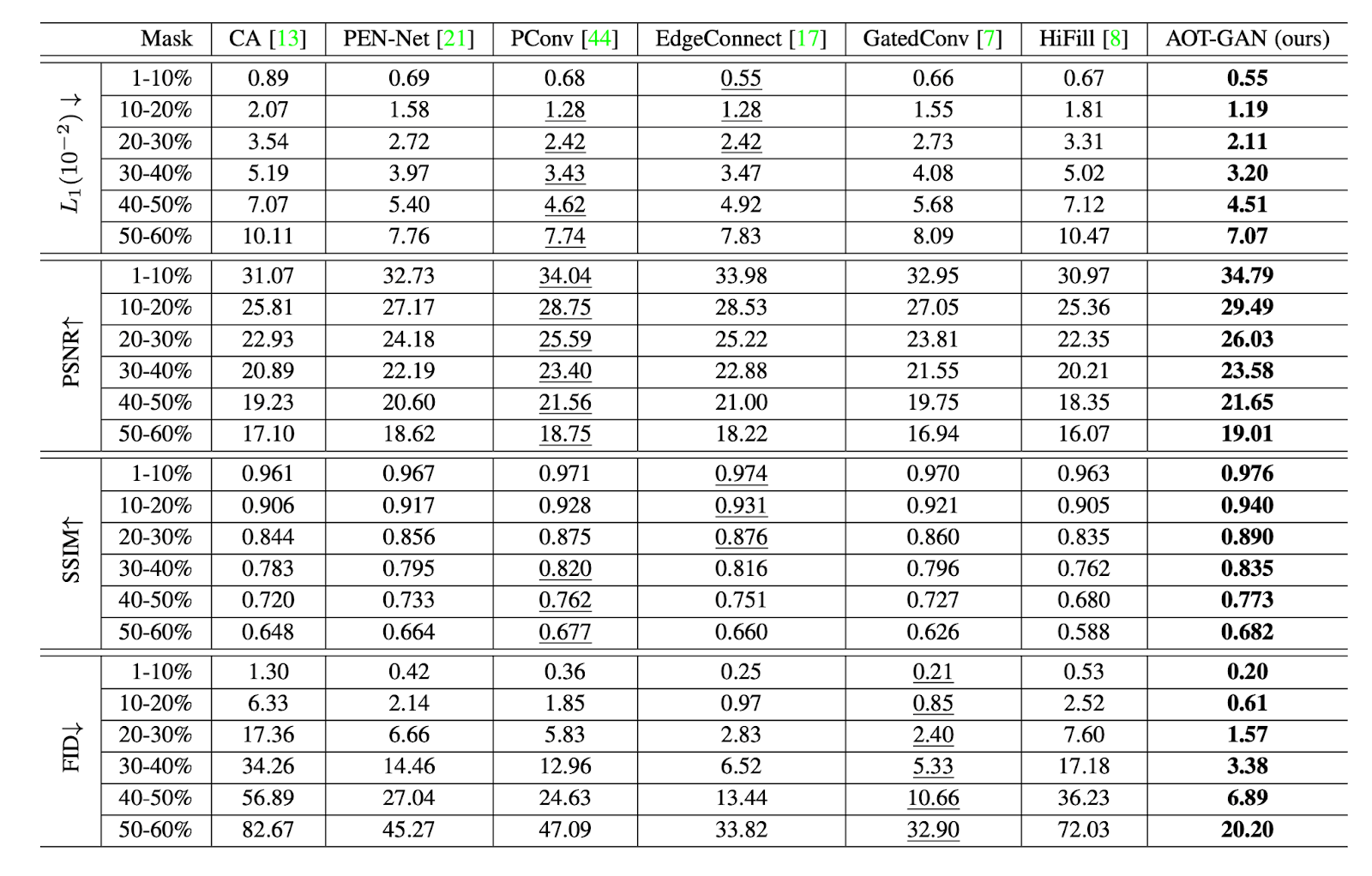

The AOT-GAN model is evaluated using several metrics, including Mean Absolute Error (MAE), Peak Signal-to-Noise Ratio (PSNR), Structural Similarity Index Measure (SSIM), and Frechet Inception Distance (FID). These metrics provide a quantitative measure of the model's performance, allowing you to assess how well the model is able to inpaint images.

MAE measures the average absolute difference between the inpainted image and the original image, while PSNR measures the ratio between the maximum possible power of a signal and the power of corrupting noise. SSIM measures the similarity between two images, and FID measures the distance between the feature distributions of real and generated images.

Understanding these metrics is crucial for interpreting the results of the model and assessing its performance.

The AOT-GAN model provides pretrained models for the CELEBA-HQ and Places2 datasets. These pretrained models can be used to save time on training and get started with the model more quickly. You can download the pretrained models from the provided links in the GitHub repository. Once the models are downloaded, you should put the model directories under the `experiments/` directory.

Using the pretrained models can be a great way to explore the capabilities of the AOT-GAN model without having to train the model from scratch.

The AOT-GAN model provides a demo that allows you to explore the capabilities of the model in a hands-on manner. To explore the demo, you first need to download the pre-trained model parameters and put them under the `experiments/` directory.

You can then run the demo using the following command:

```

cd src

python demo.py --dir_image [folder to images] --pre_train [path to pre_trained model] --painter [bbox|freeform]

```

During the demo, you can press '+' or '-' to control the thickness of the painter. You can also press 'r' to reset the mask, 'k' to keep existing modifications, 's' to save results, and space to perform inpainting.

Exploring the AOT-GAN demo can be a great way to understand the capabilities of the model and see it in action.

TensorBoard is a tool for providing the measurements and visualizations needed during the machine learning workflow. It allows you to track and visualize metrics such as loss and accuracy, visualize the model graph, view histograms, and more.

The AOT-GAN model supports visualization on TensorBoard for training. To run TensorBoard, you can use the following command:

```

tensorboard --logdir [log_folder] --bind_all

```

You can then open your browser to view the training progress. Visualizing the training progress with TensorBoard can be a great way to understand how the model is learning and improving over time.

The AOT-GAN model represents a significant advancement in the field of high-resolution image inpainting. However, there is always room for improvement and further development. Future work could focus on improving the model's performance on specific types of images, or on developing new enhancements to address other challenges in image inpainting.

In terms of applications, the AOT-GAN model has potential uses in a wide range of fields. The key use cases we’ve seen it used for are:

The AOT-GAN project would like to acknowledge the contributions of the edge-connect and EDSR_PyTorch projects. These projects have made significant contributions to the field of image inpainting and have been instrumental in the development of the AOT-GAN model.

Width.ai has built a ton of inpainting architectures for a ton of use cases. We fine-tune these models on customer specific datasets to create a high accuracy system that is production ready. Contact us today to talk to a computer vision consultant.