Automate Health Care Information Processing With EMR Data Extraction - Our Workflow

We dive deep into the challenges we face in EMR data extraction and explain the pipelines, techniques, and models we use to solve them.

Crowd surveillance and law enforcement are the stereotypical uses cited for activity recognition. In reality, it’s useful to many other verticals and businesses. For example, you can use it to analyze customer behavior in retail businesses, improve training in sports, implement better primary health care, and more.

In this article, you’ll learn what human activity recognition means, how it works, and how it’s being implemented in different industries using the latest advances in artificial intelligence.

Human activity recognition (HAR) is a machine learning task to identify what a person is doing. Traditionally, it’s a classification task that produces a fixed label (like “person running” or “person driving,” but a popular HAR dataset even has “using inhaler” and “milking goat”). However, thanks to recent advances in large language models, HAR is increasingly moving towards producing rich natural language descriptions.

HAR can be vision-based using images, videos or camera feeds, or it can be sensor-based using sensor data from smartphone sensors or wearable sensors.

We’ll focus on vision-based HAR in videos and camera feeds, and explain how it works in the next section.

HAR is most useful when it’s capable of semantic, fine-grained, context-aware understanding of the actions in a video. For example, the latest HAR techniques can produce rich descriptions like “women players play tennis on a green lawn in Wimbledon while spectators watch.” In contrast, older HAR techniques produce less useful, literal labels like “humans running; swinging hands.”

We give high-level overviews of these latest approaches here and explore them in depth in later sections.

Visual language models extract three different types of data from videos and learn to associate them with each other:

By learning their associations, these models can recognize and describe human actions using rich descriptive language instead of a predetermined fixed set of labels. They are also called language-image or visual-textual models and are at the forefront of recent HAR research.

Some tasks expect concise labels and don’t need the rich descriptions that visual language models generate. For example, automated workflows that trigger when some action is recognized are often more reliable when working with a fixed set of concise labels.

For such tasks, spatio-temporal models are better. They learn to associate visual and temporal features, just like visual language models, but without rich textual descriptions.

Next, we’ll explore some HAR use cases and their models in depth.

Video search enables users to enter text queries and find videos whose visual content semantically matches those queries. For example, an oil rig worker can search for training videos with specific queries like, “What should I do if circulation is lost while drilling?” The search engine then looks for videos where the scenes — not just text descriptions — demonstrate what activities a worker is supposed to do during lost circulation events.

We explore some deep learning models that help your business implement such semantic search in videos.

In this section, we explore the techniques used in the X-CLIP paper. But to understand them, you should know about the two earlier transformer models they build on: CLIP and Florence.

Contrastive language-image pre-training (CLIP) learns to associate visual concepts in images with text descriptions to generate natural language captions for new images. A key idea of its approach is contrastive learning, a type of semantic clustering where conceptually similar image-text pairs are nearby in the embedding space while dissimilar pairs are distant. So given a new photo of a dog that is conceptually similar to a training image with a dog, CLIP returns the latter’s image caption as the label.

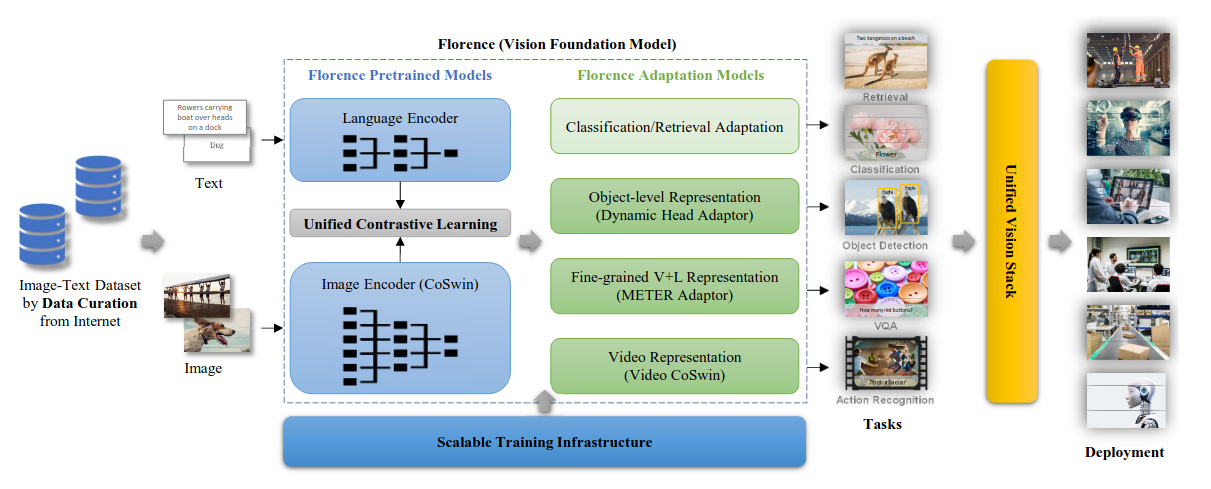

Florence extends that approach to videos to create a visual language model that can be fine-tuned for action recognition (and other vision tasks) in videos.

These two X- models enhance CLIP and Florence with two innovations:

We explore these innovations next.

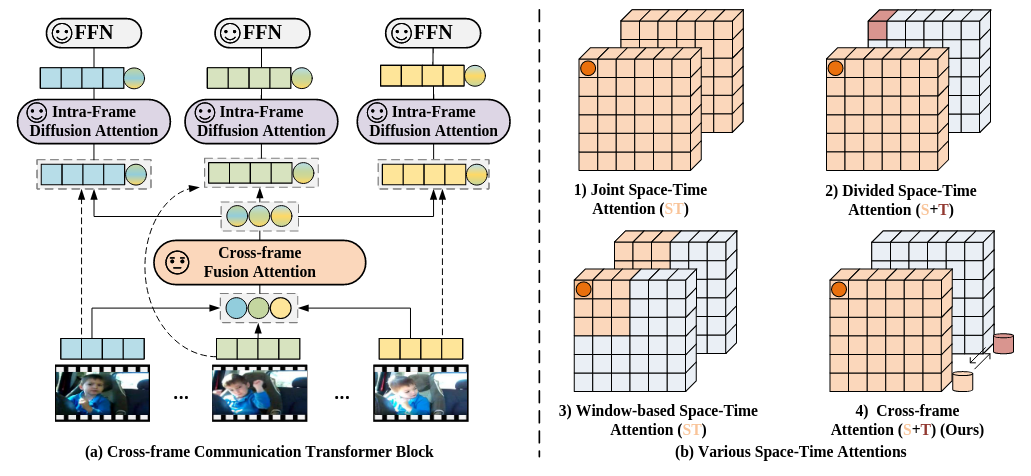

Transformers surpass other architectures because they can exploit long-range dependencies in any kind of data. For videos, that means associations between visual and temporal features across multiple frames that are not necessarily adjacent. For instance, frame-level HAR can identify that a person is running in multiple frames but can’t say anything about their pace. However, cross-frame associations can help a video search engine differentiate between a trot and a sprint.

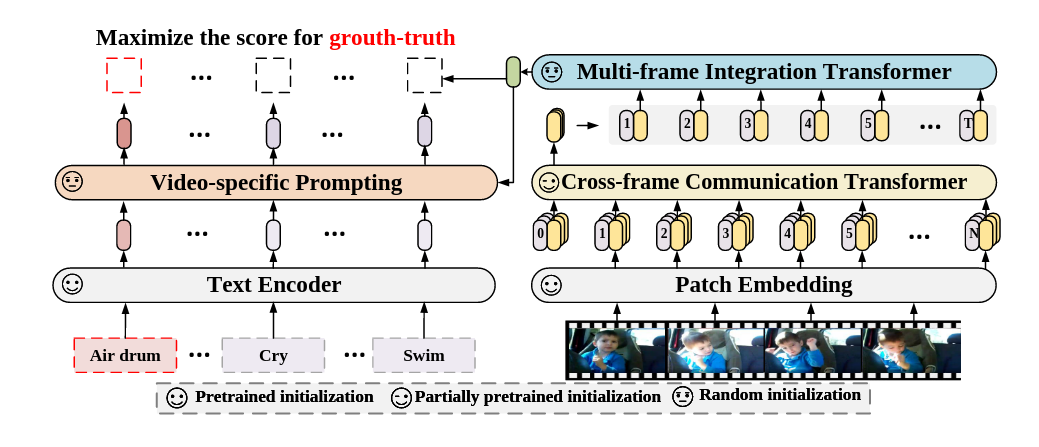

The X-encoder’s algorithms exploit cross-frame associations using two transformer networks:

In this way, the X-encoder generates embeddings that encode a video’s characteristic spatial, temporal, and textual features. These are used for action recognition through fine-tuning as explained later.

The drawback of classification datasets is that their classes are often a limited set of simple terms like “swimming” or “running.” Plus, multiple videos share the same simple class though they may differ greatly in their content. So given a complex search query, how can a video search engine find the exact videos whose visual content matches that query?

This is the second problem that X-CLIP addresses. It proposes a language-frame model that generates unique textual descriptions for every video based on its visual content.

It works like this: During training, the text encoder generates text embeddings from the text associated with a video. Meanwhile, the X-encoder generates video embeddings that encode spatial and temporal features. A video-prompting self-attention block combines these two embeddings. In doing so, it learns to associate the text of all the videos with frames that are similar across all the videos. When given a new video, it can generate rich text descriptions using this language-frame model.

The generated rich descriptions are used to create more capable HAR models that generate rich activity details instead of simple labels.

For action recognition that generates rich descriptions, a transformer decoder is attached to generate text sequences from the video encoder’s embeddings.

For action recognition that generates simple labels, a softmax layer is attached after the video encoder.

These combined models are fine-tuned end-to-end for the downstream task of action recognition using datasets like HMDB51, Kinetics 600, and UCF101.

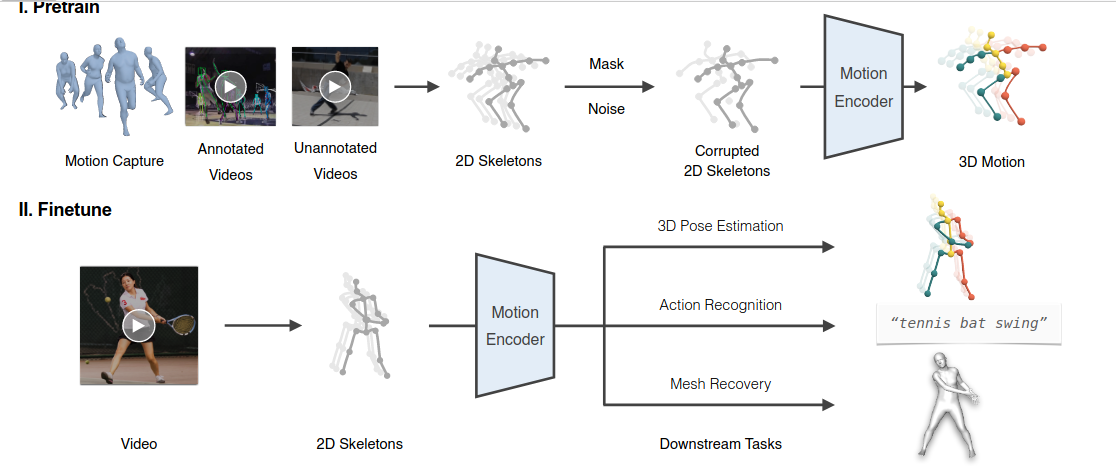

The latest spatio-temporal models like MotionBERT and UniFormer are transformer models that combine visual and temporal features just like visual language models. But they don’t learn any text features during pre-training. The only text here is the activity labels when fine-tuning for action recognition.

Despite its name, MotionBERT is not a language-image model; its name just acknowledges its use of BERT’s pre-training and fine-tuning approach.

Its pre-training involves extracting 2D skeletons from a variety of motion capture and video data. This data is randomly masked, analogous to BERT’s masking of input tokens. A motion encoder then learns to generate a masked 3D motion model.

For HAR, this masked 3D motion model is combined with a linear classifier and fine-tuned end-to-end on action recognition datasets to generate activity labels.

In team sports like volleyball and football, players must learn both individual and group tactics to play well. In volleyball, for example, players use tactics like digging, blocking, and spiking. At the same time, sets of players use group tactics like the 6-2 offense or the W-formation that determine their positions and movements on the court.

Perfect execution of both individual and group roles is essential. Coaches and team managers can use HAR and group activity recognition (GAR) to identify the activities of players while training or playing, review mistakes, and plan new tactics.

GAR is difficult because it should identify groups based on spatial movements, identify each individual’s activity, and label their collective activity. In this section, we explore a transformer model suited for this task.

The specialty of the GroupFormer model is its ability to identify both individual and group activity simultaneously just like people can.

GroupFormer is an end-to-end architecture that implements GAR using a stack of special transformers called clustered spatial-temporal transformers (CSTT).

First, they use a pre-trained I3D convolutional deep neural network (CNN) to extract the visual features of each player. They combine these visual features with human pose estimation features generated by AlphaPose to generate the final features for each player. These player features form the inputs to a stack of CSTTs.

Each CSTT is a transformer model with a spatial encoder-decoder pair, a temporal encoder-decoder pair, and a group decoder. The spatial encoder learns the spatial features and arrangements of the players. The temporal encoder learns changes in spatial features and arrangements over time.

Both spatial and temporal embeddings are sent to both decoders and then combined so that player features are augmented by their spatial and temporal contexts.

The group decoder combines these player features with a group representation to learn intra-group and inter-group dynamics.

The stack of CSTTs generates a final set of player features and group features. The player features are passed to an individual classification layer for player activity detection while the group features are passed to a group classification layer for GAR.

The model is trained end-to-end on the volleyball dataset that has both individual and group action labels.

Retail businesses make considerable investments trying to understand customer behavior. Strategies like product placement, merchandising, and product assortment are used to appeal to customer psychology and get them to buy.

Since retail customers often browse, interact, discuss, and decide in groups (e.g., friends, couples, or families), one way retail businesses can understand customer behavior is through their individual, group, and global (surrounding) activities. Such a process is called panoramic activity recognition (PAR).

PAR refers to doing human, group, and global activity recognition simultaneously. Global activity here is the activity that a majority of people in the scene are doing. We explore the PAR model in this section.

PAR is a hierarchical graph neural network that models individual actions and social interactions from a video feed.

First, the visual features of each subject in the scene are extracted using a regular Inception-3 CNN model.

The graph network is a graph convolutional network (GCN) with three levels. Subject nodes are the first level, represented by their visual features and a spatial context vector based on edge weights to other subject nodes.

Next in the hierarchy are group nodes. A relation matrix is determined using the spatial context matrix from earlier and a spatial distance matrix between subjects. An all-in-one feature aggregation model is used to aggregate subject nodes into group nodes.

The last level in the hierarchy is the global node representing the global activity of a majority of subjects. It uses all-in-one feature aggregation to combine group nodes and ungrouped subject nodes into a global node.

Finally, top-down feedback is used to cascade contextual information down the hierarchy of nodes.

A subject’s action is predicted using subject features and global features. A group’s action is predicted using group features (to which subject features were aggregated earlier) and global features. Finally, the global action is predicted using global features (to which group and subject features were aggregated).

The network is trained using the JRDB dataset that contains multimodal data captured in indoor environments, making it suitable for recognition models that monitor retail spaces.

Correct form, posture, and technique are essential in fitness training, exercising, bodybuilding, yoga, and dancing. Getting them wrong can cause serious injuries. Fortunately, nowadays, practitioners can just run fitness apps on their smartphones for real-time analysis and guidance. For these analyses, such apps use machine learning techniques like pose detection and activity recognition on the device.

Similarly, people who need assistance due to health conditions also benefit from real-time pose detection and activity recognition running on their smartphones. In this section, we explore such a technique.

BlazePose is a lightweight network for human pose estimation that can achieve 30+ frames per second on mobile devices, making it suitable for real-time use cases like fitness training. It returns the image coordinates of 33 key points on the body — like the left shoulder, right knee, left heel, and more.

Most pose estimation techniques produce heat maps that predict the pixel-level likelihood of a joint. This approach is accurate but too heavy for a smartphone. An alternative approach uses regression to output keypoint coordinates, but its accuracy can be poor.

BlazePose is a simple convolutional neural network that combines both of these approaches. During training, it produces heat maps using an encoder-decoder network. The embeddings from this encoder-decoder are combined with input image features and sent to a second encoder, a regression network, to produce the coordinates.

The key idea that makes it so fast is that the heavyweight heatmap network isn’t needed at all during inference. The regression network learns to produce accurate coordinates just from the input images and training-time embeddings.

Activity recognition like fall detection or epileptic fit detection using smartphones can help alert caregivers and emergency services.

One approach to implementing activity recognition is using pose estimation coordinates. A lightweight sequential network — like a long short-term memory from recurrent neural networks — receives BlazePose’s coordinates as inputs, analyzes the sequence of changes in coordinates, and predicts an activity label.

You saw how HAR can be useful in many verticals. Applications like video search and HAR in videos are useful in any industry that produces educational, training, safety, product, sales, marketing, servicing, repair, and other such videos. With our expertise in deep learning and computer vision, we can help your business implement them. Contact us!