The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

Traditional chatbots are evolving to become far more powerful artificial intelligence (AI)-enabled chatbots, thanks to the rise of powerful AI technology like GPT-4 and Llama 2. These large language model (LLM)-based chatbots can have human-like natural language conversations with your customers in just about any domain and industry.

However, we shouldn't forget that they're still software tools meant for use by people. So chatbot implementations should follow all the latest understandings about user interface (UI) and user experience design, rooted in the fields of human psychology and human-computer interaction (HCI).

In this article, we’ll explore the question: Do users prefer chatbots or menu-based format UIs for businesses like online shopping and travel planning? We’ll learn what the latest in psychology and HCI research have to say, and how to integrate their lessons into our chatbots.

What do we mean by menu-based interfaces? They're the typical UIs you see on online shopping and other e-commerce websites and mobile apps.

Here are the top-level menu choices of a popular online retailer:

A typical filtered search UI of these websites shows product-specific filters like these:

Their smartphone app interfaces show similar filters but with different layouts:

To be honest, such menu-based interfaces haven't significantly changed in more than two decades. Most users are likely to be familiar with a variety of them.

There are different types of chatbots and trends going around. Below is a typical customer service chatbot UI, often with a live agent:

Traditionally, before LLMs, the chatbots’ responses tended to be restricted to a small set of predefined choices, after which they'd seamlessly switch from automated responses to responses from human agents. But the versatility of LLMs has reduced the necessity for this.

Nowadays, some businesses choose to integrate their chatbots as keyword recognition-based chatbots into popular messaging apps like Facebook Messenger, WhatsApp, Slack, Skype, and Telegram:

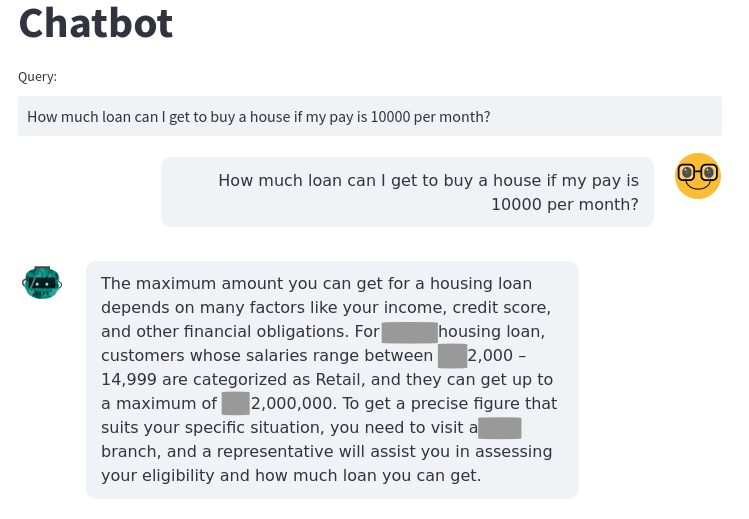

Today’s LLMs are good enough to answer complex customer queries. In the example below, a customer support chatbot for a bank answers questions based on frequently asked questions (FAQs), knowledge bases, or business documents:

Chatbot UIs may also provide functionality by mixing natural language queries and responses with menu-based visual choices like this button-based chatbot below:

Voice bots prefer speech recognition interfaces over typing and often integrate with virtual assistants like Amazon's Alexa or Apple's Siri.

But how effective are all these chatbot UIs? Do your customers actually like them, and do they prefer them to speaking to an actual human?

In the paper User Interactions With Chatbot Interfaces vs. Menu-Based Interfaces: An Empirical Study, the researchers Quynh N. Nguyen, Anna Sidorova, and Russell Torres studied user interface preferences from the perspective of a psychological theory of motivation called the self-determination theory (SDT).

SDT explores the role of motivation behind people’s actions and the factors that influence motivation. The researchers proposed a theory of how these factors influence our user interface preferences for websites and apps. They then proved aspects of their theory through surveys and data analysis.

We'll outline their theory and its underlying concepts next. We'll also infer practical lessons of use to conversational interface implementers, machine learning engineers, natural language processing (NLP) specialists, and artificial intelligence practitioners.

Motivation comes in two varieties:

UI preferences for a certain task may be related to both. For example, a comprehensive search UI may feel more thorough and satisfying for some users, but mastery over talking to a complex chatbot may bring more praise from peers in some situations.

In this article, we'll focus on intrinsic motivation and the aspects that influence it.

SDT identifies six psychological aspects — three needs, two outcomes, and an additional factor — that influence a user's sense of satisfaction, and thereby foster intrinsic motivation. These six aspects and their relevance to user interfaces are explained below.

Perceived autonomy is the level of control a person feels over a task and its outcomes. It's one of the three psychological needs that influences satisfaction.

With visual choices like menu selections and buttons, a menu-based interface gives users a lot of control over how they translate their intentions into outcomes. Such interfaces have higher perceived autonomy.

In contrast, a chatbot interface has a textbox where the user can type anything relevant to the website. The idea is to make the interaction natural and unrestricted, like talking to a person. A user is expected to provide useful high-level descriptions of their needs in human language. The chatbot technology assumes responsibility for translating those needs into appropriate choices.

However, chatbots typically don't provide any hints on what to say or what the set of possible outcomes are. Therefore, users may feel lower autonomy when interacting with them.

Cognitive effort is the mental capacity allocated for obtaining and processing the information required for a task.

Menu-based interfaces may require relatively more cognitive effort because more visual elements increase the visual cognitive load. Plus, understanding the number of possible menu choices, inputs, and behaviors just adds to that load.

It's generally assumed that chatbots require lower levels of cognitive effort because users can talk naturally to them without being distracted by visual elements or restricted by a small number of choices. Indeed, the AI industry often sells simplicity of interaction as the biggest benefit of chatbots.

Perceived competence is one of the three psychological needs and is related to the level of confidence a person feels about achieving a goal or completing a task. If a user feels incompetent, they may abandon a task or refuse to engage with it in the future.

Both autonomy and cognitive effort are expected to influence perceived competence. If a user has more autonomy, they feel in control and confident that they can do the task. However, if the task involves high cognitive effort, they may feel incompetent.

Relatedness is the ability to relate to a system based on characteristics like personality, tone, voice, attitude, and similar traits.

It's actually one of the three psychological needs, but it's not in the scope of the researchers' study or this article. So we move on to the outcome aspects.

This is the level of satisfaction that the user feels from achieving the goal, as per their plan. Users may evaluate their performance subjectively based on the time it took, the number of mistakes made, the adequacy of the information that was provided, and other such factors.

With more autonomy, users are likely to take responsibility for the outcomes, whether positive or negative. But when they feel lower autonomy, they're likely to attribute both successes and errors to the system rather than themselves.

Competence and cognitive effort are also likely to influence performance satisfaction.

System satisfaction is the extent to which users are pleased with a system and its interactions. Here, too, we can guess that the other aspects like autonomy and cognitive effort impact the level of satisfaction with the system.

All six of these aspects are obviously interconnected in many ways. Nguyen, Sidorova, and Torres proposed these 10 hypotheses to model their relationships:

A positive effect means that as the first aspect increases, the other aspect also increases. A negative effect means that as the first aspect increases, the other aspect decreases.

These hypotheses are illustrated visually below:

Nguyen, Sidorova, and Torres recruited university students to experiment with the two interfaces. They chose online travel planning tasks because most people were likely to be familiar with them.

Users were asked to complete two tasks.

1. Business task — Acting as office administrators, the study participants were required to search for flight tickets and hotel rooms for a coworker. The departure and destination airports, and the budgets, were fixed by the experimenters.

2. Personal task — Study participants had to plan their personal vacations. They were free to decide on airports and their budget.

The participants had to do these tasks first using a menu-based travel search website and then repeat them using the same website's chatbot. The now-defunct Hipmunk travel website and chatbot were used.

The chatbot supported natural language queries like "I’d like to book a flight from New York to Los Angeles and stay there from June 17 to 21. I prefer American Airlines."

After completing the tasks, users had to fill out questionnaires on perceived autonomy, cognitive effort, competence, performance satisfaction, and system satisfaction of each interface.

The researchers tested their model using partial least squares path modeling, a variance-based structural equation modeling approach. Hypothesis testing was used to examine if the relationships were statistically significant.

The results threw up a couple of surprises. The results of the hypotheses are shown below:

A quick summary before diving in:

1. Chatbots need more cognitive effort than menu-based interfaces.

2. Chatbots result in lower perceived autonomy.

3. Chatbots lead to lower user satisfaction.

4. Cognitive effort did not have a negative effect on performance satisfaction.

5. Cognitive effort has a strong negative effect on system satisfaction when using chatbots.

6. Cognitive effort did not show a significant negative effect on system satisfaction with menu-based interfaces.

7. All the other hypotheses were supported by the data.

Let’s talk a bit about these results.

We’ll explain the above observations in more detail here and also cover some of the limitations that may play a part in the results.

Why did the study show that chatbots require more cognitive effort, not less? The researchers suggested some possibilities.

One possibility is that the experimental setup failed to account for the differences in the study participants’ familiarity with the two interfaces. About 75% of the participants had never used chatbots for travel planning. But only 12%-16% were new to travel and hotel booking websites. Although the participants got rudimentary training on both interfaces, their past experiences with websites would have helped to greatly reduce their cognitive effort during this exercise.

But another possibility is that the cognitive effort for chatbots is actually higher, even if we control for familiarity. Providing proper commands and necessary details without any helpful visual elements may indeed be more difficult for our minds. We’ll suggest some techniques to address this possibility in the next section.

What about the surprising observation that higher cognitive effort did not lead to lower performance satisfaction? One possibility is that when we expend more cognitive effort, we feel a need to justify it by believing that we are more satisfied with our performance. Believing otherwise would be a form of cognitive dissonance.

Higher cognitive effort had a strong negative effect on system satisfaction when using chatbots, but the effect was not significant with menu-based interfaces.

One reason could be that since most participants were already familiar with menu interfaces for booking travel and reported lower cognitive effort, there wasn't sufficient variability in effort to detect a statistically significant effect on system satisfaction.

Another possibility the researchers theorize is that users attribute their high cognitive effort to the system only when using chatbots but to something else, such as task difficulty, when using menu-based interfaces. Further studies are needed to test this theory.

The menu-based interface showed high system satisfaction for the business task with fixed criteria — that is, predetermined airports and budgets — but scored low for the personal task with open-ended criteria — where participants were able to choose their airports and budget.

This suggests that providing improved chatbot experiences for tasks with open-ended criteria may lead to higher overall satisfaction for your users.

Demographic factors like age, gender, and education level showed no correlation with performance or system satisfaction with any interface or task. However, the researchers pointed out that their sample size was quite skewed because almost 50% of the study participants were 18-21 years old.

The research studies just one industry use case — travel — and one pair of menu-based and chatbot interfaces. These explanations and theories may not apply to other industries like online shopping or financial services.

Given all these observations, how can you improve your chatbot and conversational AI implementations, either proactively or reactively? We draw some important lessons below.

Most software and AI engineers tend to assume that users find chatbots simpler because the interaction is conversational. But that assumption may not hold for either your novice or your power users. It greatly depends on their prior experiences with chatbot interfaces, and possibly even their domain knowledge.

So assume the opposite, and look for ways to deliberately reduce the cognitive load with chatbots. For example:

Offering some training to new users on using your chatbot may help some of them reduce their cognitive effort.

The example below shows a chatbot that reduces the cognitive load by asking simple questions to elicit the criteria the user has in mind and even offers choices that the user can pick from.

In the second example below, we demonstrate another technique that may help your users reduce their cognitive load. Basically, implement your chatbot using an introspective prompting strategy like ReAct so that it's no longer a blackbox but something whose decisions and thinking are transparent to your users.

By revealing how your chatbot is thinking at every step, you're reducing your user's cognitive load, enabling them to provide better descriptions or backtrack to an earlier point, and even increasing the degree of control they perceive over your chatbot.

Assume that significant sections of your user base feel that your chatbots are reducing their autonomy. Provide ways to make them feel in control. The suggestions in the previous section to reduce cognitive load also help improve autonomy. In addition:

In this actual online shopping chatbot from Width.ai, notice how we incorporate visual elements and choices in the chatbot UI:

If your chatbots are self-deployed and self-managed, you may have access to rich data about your user interactions from log files or other metrics. This includes data like:

Use such data to optimize your chatbot dialogue flows.

In this article, you saw how chatbots are much more than frontend UIs for GPT-4. Building high-quality contextual chatbots requires knowledge of research from fields like human-computer interaction and psychology.

At Width.ai, we pay close attention to such interdisciplinary research to implement the most effective chatbots for your business needs in terms of customer engagement and customer satisfaction. Contact us to learn more about integrating AI chatbots into your business workflows.