The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

New large language models (LLMs) with various architectural improvements are being created and published practically every month. In this shootout, we try to find which is the best open-source LLM for summarization and chatbot use cases as of November 2023.

First, an overview of the terminology we'll be using since the term "open-source LLM" can mean different things to different people:

In this article, we use "open-source LLM" in two senses. First, we use it as an umbrella term that covers all these possibilities.

Second, we use the term "open-source" if the LLM's actual code, like the example given above, is available publicly on GitHub or some other repository. In addition, it implies that such code also includes scripts to train, test, or fine-tune the model based on the same methodology used by the authors. However, when required in tables and elsewhere, we use it to specifically imply that its code is published.

We also employ two other terms to disambiguate:

Combinations of these terms enable accurate descriptions of any model. For example, an open-source, closed-access, non-commercial LLM implies that its code is published but the model weights aren't, and even if you train your own model using that code, its commercial use isn't allowed.

For long text summarization, a pretrained, foundation model LLM must be fine-tuned for instruction-following. The goal of this is to help the LLM have a better understanding of what a quality output for a given instruction set looks like. This helps the model differentiate the summaries between prompt language such as:

Write a 5 word summary that focuses on “xyz”

Write a 5 word extractive summary that focuses on “xyz” in the tone “x”

The underlying model probably doesn’t have a very deep understanding of what differentiates the two based on tone and the type of summary. This fine-tuning of showing the instructions and the summary output improves this relationship.

Preferably, the LLM should be further fine-tuned for human alignment — that is, the ability to match typical human preferences — using techniques like reinforcement learning from human feedback (RLHF) or direct preference optimization.

In the following sections, we explain how we tested prominent open-source LLMs on long text abstractive and extractive summarization tasks and what the results look like.

First, we had to shortlist the LLMs to test. For this, we first categorized LLMs into three size-based groups based on how they're used by different customer segments:

Next, the models under each category were shortlisted using public leaderboards that compare their performance on various tasks and benchmarks using automated testing.

With the models decided, we selected three domains where summarization is frequently necessary for both business and personal uses:

For each domain, we selected a long-text dataset and picked an example that is representative of documents and reports in its respective field.

Finally, we requested the shortlisted models to summarize our test documents with the same prompts and the same chunk size for a recursive chunking strategy. The prompts asked the models to ensure factual accuracy and completeness.

Our prompt for abstractive summarization was: "Write a summary that is factually accurate and covers all important information for the following text."

For extractive summarization, our prompt was: "Select between 2 and 6 important sentences verbatim from every section of this text that convey all the main ideas of the section."

Unfortunately, the deployment of models isn't a solved problem as yet. There's no single service where every LLM is readily available or easily deployable. Hugging Face comes close, but other LLM service providers like Replicate or SageMaker can be more convenient or performant for some models. We used the most convenient option for each model.

We explain the testing in more detail in the following sections.

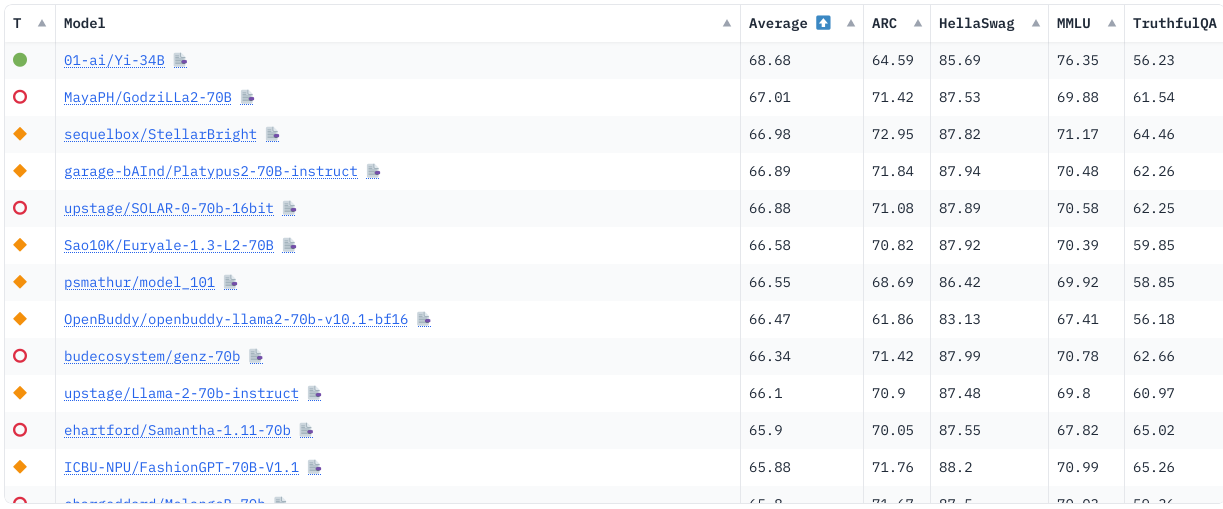

We used the scores on two popular leaderboards as qualifying criteria to shortlist the open-source LLMs to test.

The Hugging Face open LLM leaderboard measures LLMs on a variety of tasks including:

Many of the names on this leaderboard may seem unfamiliar. But when you dig into their details and descriptions, you find that they're fine-tuned versions of popular foundation models like Llama 2.

Another helpful leaderboard for the best open-source LLMs is the GPT4All project since it focuses on the optimization of open-source LLMs for everyday use on consumer-grade hardware and mobile devices.

These sections list the datasets and sample documents we selected for our tests.

We selected the following medical report from the Kaggle medical transcriptions dataset because it was the longest at just above 4,000 tokens:

The longest legal document in the contract understanding Atticus dataset (CUAD) was selected. This article has 60,000+ tokens, making it a challenge for good quality summarization with ample coverage:

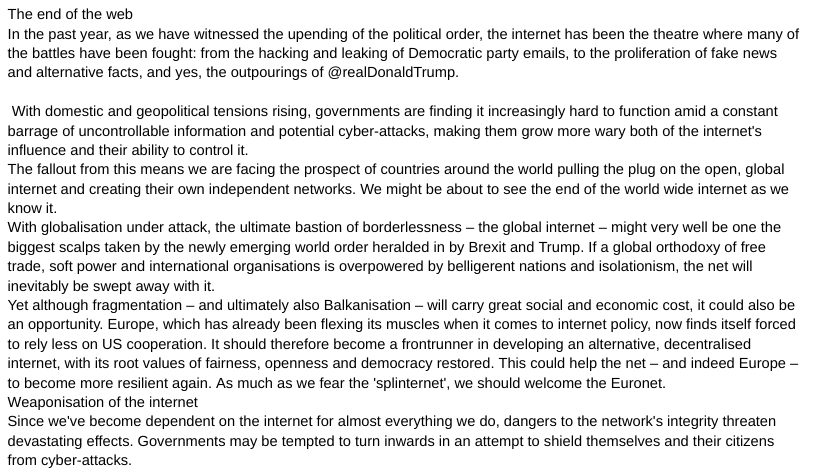

We selected the following article, titled "The end of the web," from the "question answering with long input texts, yes!" (QuALITY) dataset because such a vague yet ominous title compels many readers to seek a summary of what the article is all about.

We describe how the 30B+ LLMs, favored by serious businesses that want high-quality results all the time, fared.

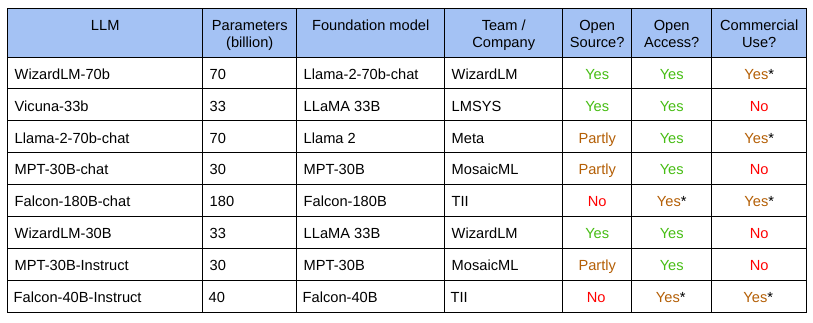

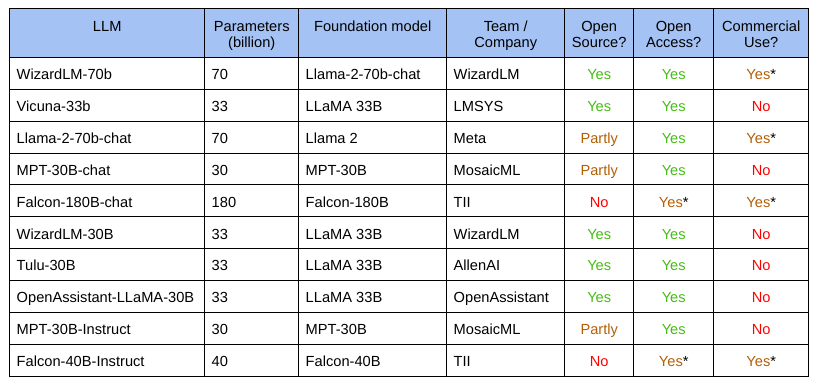

Training an LLM with 30 billion or more parameters isn't trivial in terms of infrastructure requirements and expenses. That's why there aren't too many of them. The available contenders are listed below:

The asterisks imply that commercial use may come with riders. For example, Llama 2 doesn't use a well-known open-source license like the Apache 2.0 license but instead specifies its own Llama 2 Community License. For some models, you need to submit a form to obtain access.

Except for MPT-30B, none of the medical report summaries turned out well. Two of the models failed completely while the Llama 2 70b model didn't fare well on completeness.

The extractive summaries for the medical report came out like this:

The summaries for the legal document are shown below:

The extractive legal summaries looked like this:

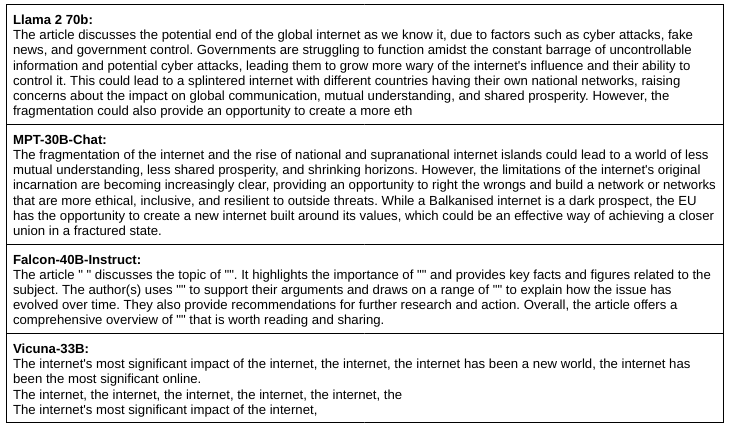

A qualitative analysis of the summaries for the long-form articles brings up the following observations:

The extractive summaries looked like this:

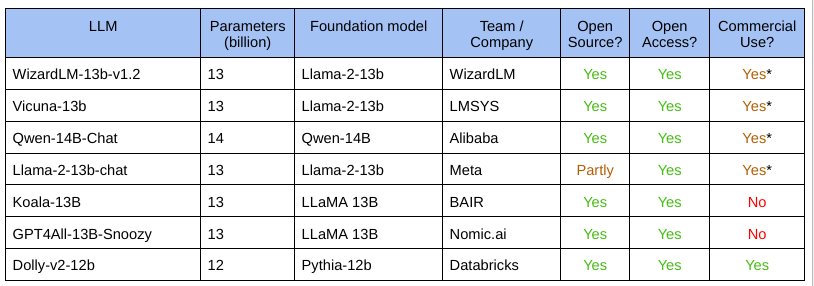

These LLMs are popular because they can run low-end server-grade and high-end consumer-grade hardware. But how do they fare on quality compared to the largest LLMs? We explored this question in the sections below.

Most of these models are instruction-tuned, or even RLHF-aligned, improvements on foundation models like the Llama 2 13B model. Some of the important contenders are shown below:

The list is heavily dominated by the Llama 2 13-billion parameter model. An interesting entry here is the Dolly v2 model that we've analyzed before.

The qualitative analysis of these summaries is interested in two aspects:

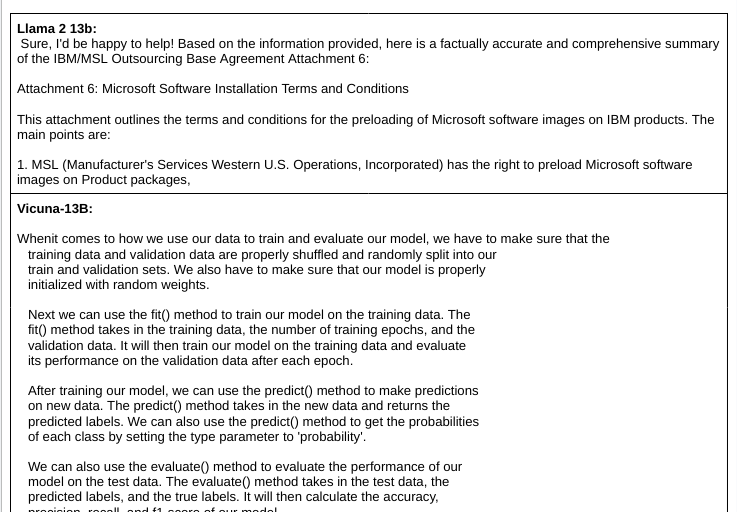

The medical summaries looked like this:

The extractive medical summaries came out like this:

Both Llama 2 13-billion model and its fine-tuned Vicuna-13b did badly on the legal summaries. The Llama 2 model was very incomplete. The Vicuna model produced something entirely irrelevant.

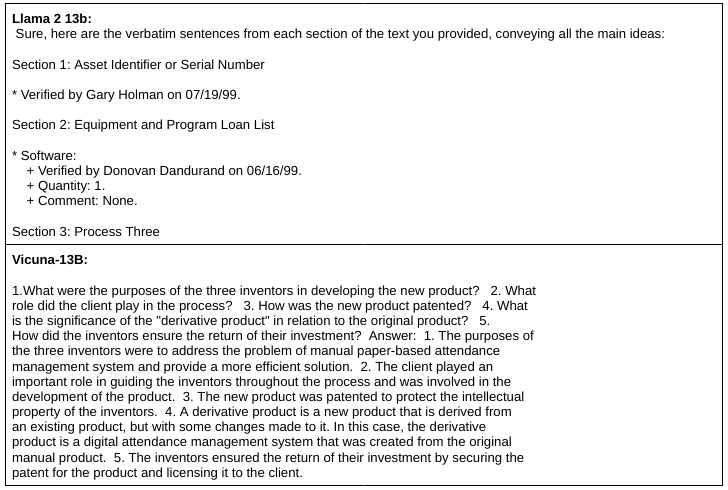

The 10-20B LLMs generated these extractive summaries for the same legal agreement:

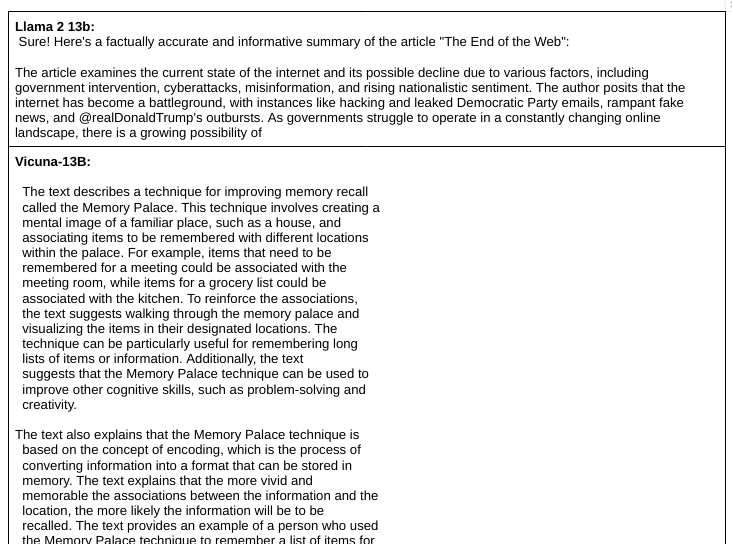

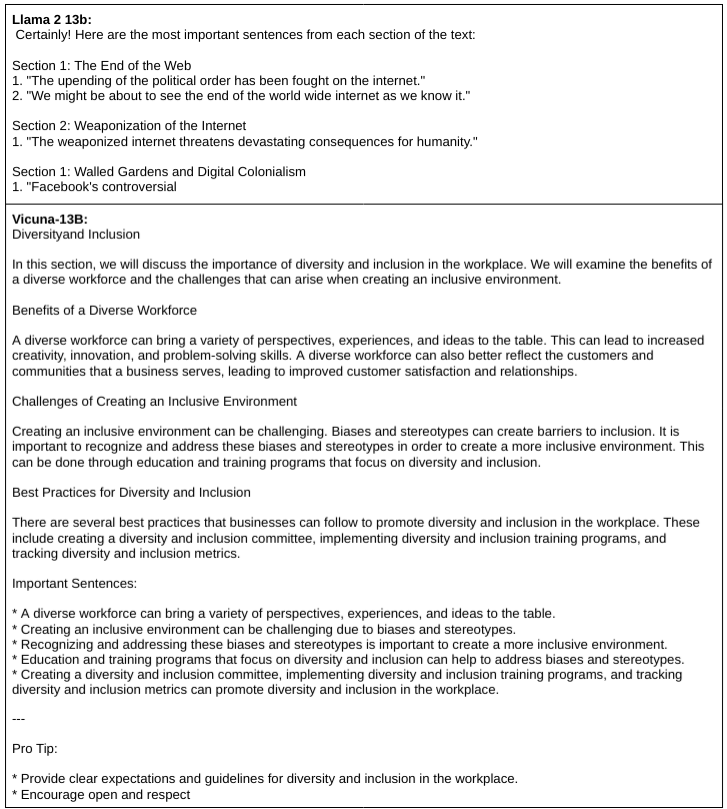

The Llama 2 model did a decent job on the long-form article. However, Vicuna got it so wrong that we had to verify if we had provided the correct article as input; we had! Exactly why it hallucinated about memory palace techniques is difficult to explain but precisely the kind of complication you wouldn't want in day-to-day use.

The extractive summaries weren't impressive overall:

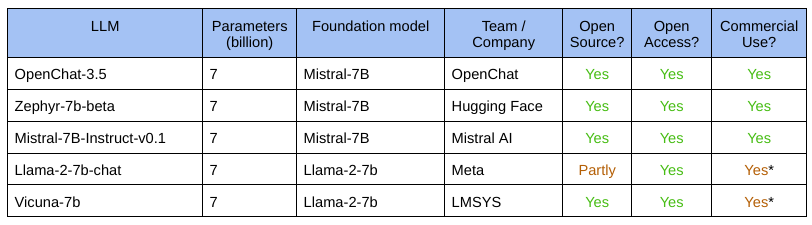

These are the smallest modern LLMs that can run on regular consumer-grade CPUs and even mobile devices with acceptable latencies.

Since training these sub-10-billion models is relatively easier, there are a lot more contenders in the space. We selected these four top-ranking LLMs for the showdown:

Smaller LLMs often don't fare well on many tasks. They can produce results that are mediocre, plain wrong, or badly formatted to be usable. But for day-to-day business or personal use, they may be good enough most of the time. We made the observations below.

First, the medical report summaries:

The extractive summaries for the medical reports came out like this:

The legal summaries look like this:

The sub-10B LLMs produced these extractive summaries for the legal agreement:

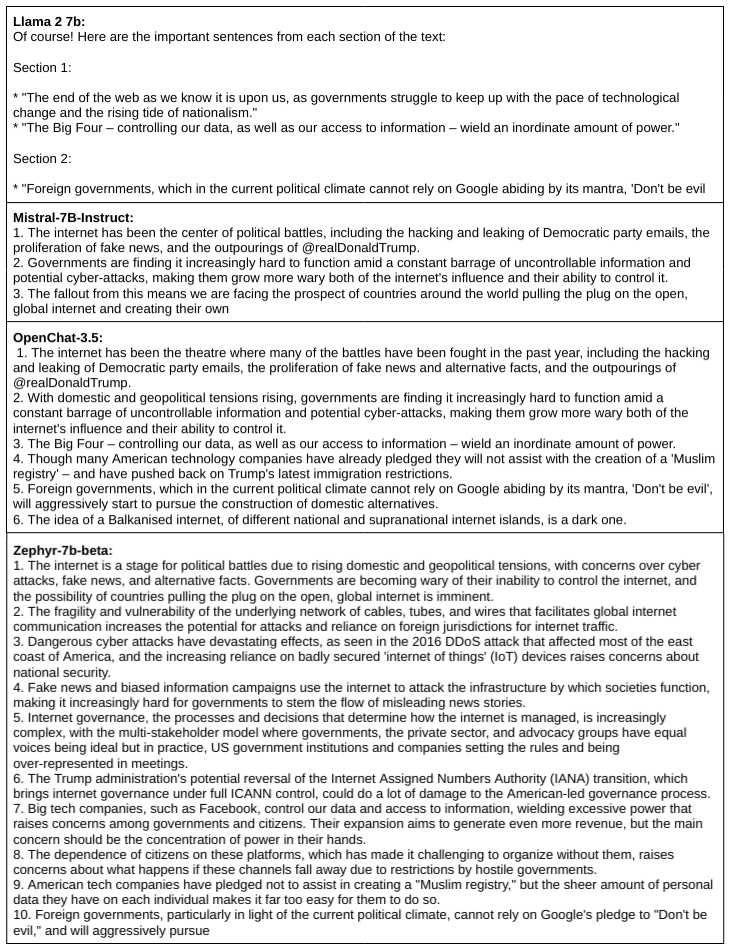

For the long-form articles, the sub-10B LLMs produced these abstract summaries:

For the long-form articles, the sub-10B LLMs produced these extractive summaries:

The qualitative results show that the Mistral-based OpenChat and Zephyr LLMs, though small, produce excellent results. If their training is scaled up to bigger LLMs, they'll likely outperform all other LLMs.

A note on Vicuna-33B performance: In all these tests, Vicuna didn't perform well. However, readers shouldn't assume that the model is bad. The bad performance here may simply be a consequence of faulty deployment of the model on the LLM-as-a-service we used for these tests, which only goes to highlight the inherent and emergent complexities of using LLMs. Vicuna in fact ranks high on the chatbot leaderboards as you'll see in the sections below.

We can't emphasize this enough: To get close to ChatGPT and even surpass it in quality, always fine-tune your open-source LLMs for your domain, domain terminology, and content structures! The base open-source LLMs can never produce the best quality summaries you need because of their generic training. Instead, plan for both supervised and RLHF fine-tuning to condition the LLM to your domain's concepts as well as your users' expectations of summary structures and quality of information.

Another technique to improve the quality of summaries is to be smart about how you chunk your documents. The first level of chunking should break the document into logical sections that are natural to your domain's documents. Subsequent levels can target subsections if possible or default to token-based chunking.

Finally, better prompting strategies like PEARL can first reason about the content before summarizing, and improve the overall quality.

Chatbots powered by the best open-source LLMs are potentially very useful for improving the quality of customer service, especially for small and medium businesses that can't afford to provide high-quality 24x7 service. Such chatbots are also useful in making employee services more helpful and less confusing, regarding topics like employee benefits or insurance policy registration.

In these sections, we try to determine which open-source LLM with chatbot capabilities fares well.

Leaderboards like the LMSYS chatbot arena and MT-Bench specifically test chatbot capabilities like multi-turn conversations and alignment with human preferences. In addition, the leaderboards we saw earlier provide clues on which LLMs are likely to perform better.

Like before, we divide the LLMs into three categories. For each category, we observe what the leaderboards say.

In this category, there are more than 10 contenders. However, we exclude any model that seems to be a hobby project of a single individual (like Guanaco) or is finetuned for some other specific task (like CodeLlama). Since most companies won't take the risk of using an individual hobbyist's model or a model trained for some other task, this exclusion is reasonable.

Observations:

Though there are many more LLMs in this category than shown below, we don't recommend using anything other than one of the top-scoring models. Overall, LLMs based on the Llama 2 13B model do well in this category.

There are a lot of contenders in this category. But given that the top-ranking ones already perform extremely well compared to larger models and are available for commercial use, you can avoid any of the other lower-ranking LLMs.

Observations:

In this article, you saw the various open-source LLM options available to you for customization and self-hosting. As of November 2023, we find that Llama 2 dominates all the leaderboards through its derived models. With a foundation model in each size category, you can expect high-quality results for any use case by picking it.

However, we find that Mistral 7B, and particularly its two derivatives, OpenChat and Zephyr, work unbelievably well in the sub-10 billion category. They're also commercial-friendly. If you want to boost your business workflows with such powerful LLMs that can run on your employees' workstations and mobile devices fine-tuned to your needs with complete data confidentiality and data security, contact us for a consultation!