The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

Chatbots with access to company specific data or company defined knowledge are continuing to grow in popularity, as users are finding out that popular models like ChatGPT and BARD are only trained up to a specific date and don’t naturally have access to the internet or your database. I’m sure you’ve gotten messages like this one:

While this isn’t a surprise given the way these models are trained or even that much of an issue, the real pressing issue for companies is how they can attach their specific data and knowledge to one of these models to take advantage of the ability of these LLMs, not as much the information they are trained one. We internally call this knowledge driven training vs ability driven training.

For these LLMs to really be useful to companies they need access to real time data and knowledge, and have an understanding of in-domain and industry specific concepts and outputs.

LLMs are trained on large amounts of text from websites, books, research papers, and other such public content. The semantics and real-world knowledge present in all that text are dispersed across the weights of the LLM's neural network. This representation as network weights is called parametric memory.

But what if you want accurate answers based on your own private documents and data? Since they weren't included in the LLM training, you aren't likely to get the answers you want.

One option is to convert your private documents and data into a private dataset and finetune the LLM on it. But the problem is that you'll have to finetune the LLM frequently if new documents and data are being created all the time.

So you need an approach that can dynamically supply information to your LLM on demand. Retrieval-augmented generation is a solution that retrieves relevant information from your documents and data to supply to your LLM.

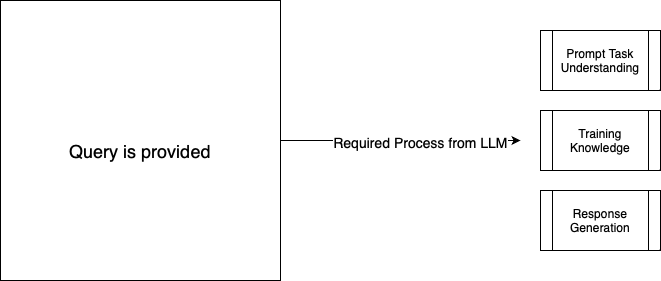

As of 2023, RAG is often implemented as a pipeline of separate software components, like the one shown above. The components include:

A typical modern RAG workflow with these components operates as follows:

The architecture and workflow above have become more popular because they are convenient to deploy and perform well at scale at the cost of slightly lower relevance. In the following sections, we delve deeper into aspects of this pipeline.

An LLM's response is guided by multiple pieces of information in the prompt like:

Conventional RAG focuses only on retrieving contextual information that is relevant to the task.

However, there's nothing special about context. You can use the same retrieval approach to select relevant tasks, questions, system prompts, or few-shot examples. We demonstrate the need for this and the outcomes in the sections below.

All promptable LLMs and multi-modal models like DALL-E or Stable Diffusion are sensitive to the structures and semantics of prompts. That's why there are so many prompt engineering tips and tricks in circulation.

RAG can help improve and standardize the prompts by maintaining a knowledge base of predefined task prompts that are known to work well for the selected LLM. Instead of forcing users or systems to send well-formed prompts, use RAG to select predefined task prompts that are semantically similar to the requested tasks but work better.

For example, for extractive summarization using GPT-4, a prompt like "select N sentences that convey the main ideas of the passage" works better than something like "generate an extractive summary for the passage."

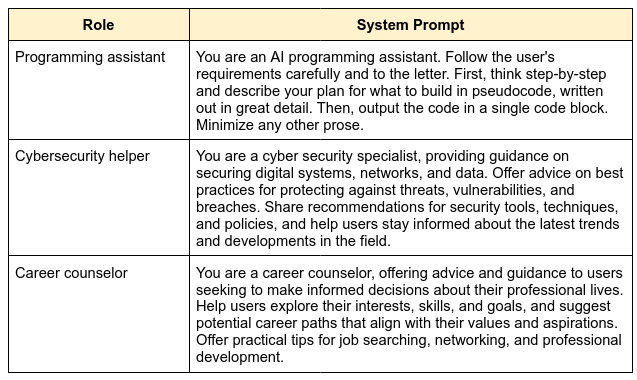

System prompts guide the LLM's behavior during a conversation, tone, word choices, or response structure. Some examples are shown below:

Given a prompt, you can use RAG to retrieve relevant system prompts. This is useful for guiding general-purpose LLM systems that can carry out a variety of tasks. However, since system prompts are unlikely to be semantically close to user prompts, you can:

You can also use this to scale up to a multi-customer system where customers can have their own “custom” RAG pipeline. They get their own prompting system for the use case, with custom data already provided via the database filtering and indexing in vector DBs.

Another common use of RAG is to retrieve few-shot examples that are relevant to the prompt. Few-shot examples demonstrate to the LLM how it should modify various inputs to desired outputs. This is one of the best use cases of RAG in chatbot systems. Quality chatbot systems store successful conversations as a way to guide the LLM towards other successful conversations. This is probably the best way to ensure things like tone,style, and length are followed in chatbot systems. These conversations can be given a goal state to reach and our system can store just those conversations that reach that point.

How we retrieve our relevant data, and what the system looks like to decide what systems we access to retrieve data, are the most important parts of RAG systems. This is usually the most upstream system in our architecture, and all downstream operations are affected by what we do at this step. It’s critical we pull context from the proper sources to even have a chance to answer the query!

Chunking breaks up the information in your KB into fragments for vectorization. Ideally, you don't want to lose important information or context while doing so. Chunking is required in most systems as LLMs have context limits. Additionally, Liu et al. showed that the accuracies of LLM-generated answers degrade with longer context lengths and when relevant information is in the middle of long contexts.

Some chunking techniques are:

Many LLMs now support actions through techniques like OpenAI function calling, ReAct prompting, or Toolformer. Actions are like callbacks issued by an LLM whenever its semantic state matches the criteria set by the callback function's metadata.

You can retrieve relevant information when an action is initiated by the LLM. The benefit here is that the action may carry better information for a similarity search than the original prompt. This is particularly useful for multi-turn dialogue environments like customer service chatbots.

In this section, we explore some aspects of embeddings that influence the quality of information retrieval.

These three factors influence the quality of semantic similarity matching.

Generally, longer embeddings are better because they can embed more context from the information they were trained on. So the 1,536-dimensional embeddings produced by the OpenAI API are likely to be qualitatively better than the 768-dimensional embeddings from a BERT model.

The volume of data that a model is trained on also matters. The OpenAI models or the T5 model are known to have trained on far more variety than BERT. Since larger models hold more information in their parameters, their embeddings too are of better quality.

Lastly, the nature of the training data influences the quality of the embeddings. A specialized model like Med-BERT may produce better embeddings for medical tasks than general embeddings even when the embeddings are shorter.

When the lengths of the query and the retrieved information are of similar orders of magnitude, the similarity is said to be symmetric. For example, if the information being retrieved is predefined system prompts, you can treat it as a symmetric similarity problem.

On the other hand, if the query is orders of magnitude shorter than the retrieved information, it's an asymmetric similarity problem. For example, question-answering based on documents is likely to be asymmetric because the questions are typically short while the answers from documents are much longer.

Intuitively, we realize that symmetric and asymmetric similarity models must be trained differently. For symmetric similarity, since there is roughly the same volume of information in a query and a document, an embedding can hold roughly equal context from each. But for asymmetric similarity, an embedding should store far more context from the document than the query so that it can match embeddings from other long documents.

The SentenceTransformers framework provides a large number of embedding models for both symmetric and asymmetric searching. These models differ in their model architectures, model sizes, relevance quality, performance, training corpora, language capabilities, training approaches, and more.

An open-source LLM like the 70-billion-parameter model of Llama 2 is far more versatile and powerful than anything available with SentenceTransformers. Its embeddings will yield much better quality.

OpenAI embeddings API is another good choice to generate your embeddings. Since it's a managed and metered API, performance will be slow and incur expenses over time. However, it's a good choice if your knowledge base is small.

When selecting a vector database for your RAG pipeline, keep the following aspects in mind.

There are multiple ways to deploy a vector database. Some run as components inside your application process and are thus limited by the system's memory. Some can be deployed as standalone distributed processes. Others are managed databases with APIs.

You must select a database that is suited to the scale and quality of service you need for your RAG workflows:

All vector databases implement some kind of approximate nearest neighbor algorithm and suitable indexes for similarity search. Select an algorithm and index type according to your use case and the nature of the information. For example:

The idea behind ability trained LLMs vs knowledge trained LLMs focuses on what the goal of the LLM is in RAG. We want to use it to understand and contextualize information provided to the model for generation, not pull information from its training for generation. These are two very different use cases, and two very different prompting structures. Here’s a better way to think about it.

All understanding of the task and what information is available to perform the task is based on what is provided. This way the model only generates responses based on this specific information, and none of its underlying knowledge, which can lead to hallucinations.This generally requires some level of extraction from the model to understand what is relevant. Although you might not actually perform an extraction step, the model has to do this with larger context.

This is what it looks like when we rely on the LLM for the knowledge used to answer the query. Everything is focused on the prompt and the knowledge the model is trained on.

This means the key focus of the LLM in RAG is understanding the context provided and how it correlates with the query. What that means for fine-tuning LLMs is the focus should be on improving the LLMs ability to extract and understand provided context, not fine-tuning the LLM to improve its knowledge. This is how we best improve RAG systems by minimizing the data variance that causes hallucinations or poor responses. Our LLM better understands how to handle context from multiple sources and sizes which becomes more common as these systems move to production use cases. This means we can spend less time trying to over optimize chunking algorithms and preprocessing to fit a specific data variance as our model is better at understanding the inputs and how they correlate to a goal state output.

You can use these frameworks to simplify your RAG pipeline implementation.

LangChain implements all the components you need for RAG:

LlamaIndex is a framework for implementing RAG using your private or domain-specific data. It too supports all the components you need for RAG, including:

We really like LlamaIndex and the way they’re navigating the RAG space. One of the key things we look for in these frameworks is the ability to add a high level of customization. Production RAG implementations always need customization (regardless of what you’ve heard) and LlamaIndex currently supports the most customization and integration of various systems. They are also moving very fast in the space and constantly pushing out new updates to support how high level companies use RAG.

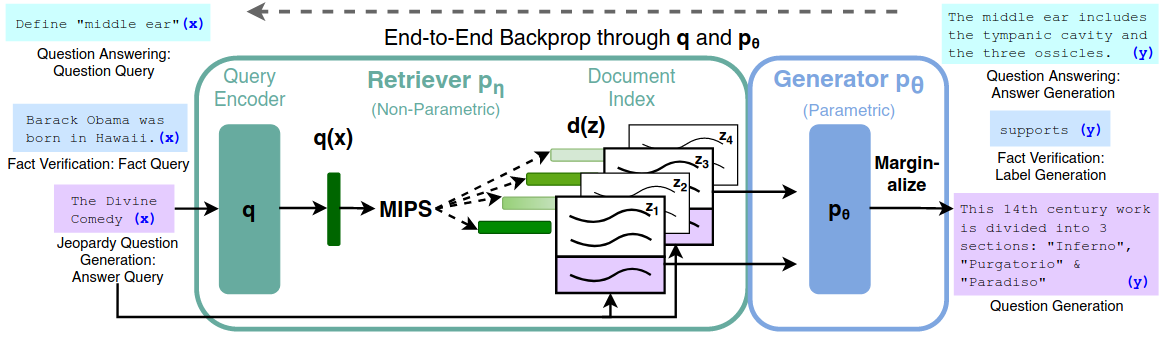

The original RAG approach, proposed in 2020 by Lewis et al., worked differently from the pipeline described so far.

A key difference was that the entire RAG was a single complex model where every step was a differentiable part of the whole, including the vectorization and similarity search steps as shown below.

Differentiability meant that you could train the entire model on a language dataset and knowledge base. The weight adjustments to minimize the final loss could then be backpropagated to every step of the model. This effectively created custom functions for vectorization, retrieval, and text generation that were highly fine-tuned for relevance.

The benefits of this approach are:

The cons are:

You can still choose this approach if you have a compact knowledge base and a limited set of domain-specific tasks. Its quality of relevance can't be easily matched by the general pipeline approach.

We use the above GPT-based RAG pipeline for our document summarization services. Relevant prompts and few-shot examples are retrieved at runtime based on the provided document and summarization goal.

We also use RAG in our customer service chatbots for banking clients. Based on customer queries, relevant answers and few-shot examples are retrieved from our vector database during action invocations.

In this article, you studied various design and implementation aspects of RAG. At Width, we have implemented and deployed RAG in production for banking clients and law firms where retrieving the latest information is an absolute necessity. If you want to streamline your workflows using LLMs on your company's private documents and data, contact us!