The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

You have probably heard of OpenAI's GPT-3 and ChatGPT by now. They're at the heart of all the news about artificial intelligence (AI) becoming sentient and taking over everyone's job. They're quite amazing, from generating poems on the fly to spitting out software code. But, being cloud-based services, they have disadvantages that make them impractical for some businesses and industries.

Imagine the possibilities for your business if you could, instead, use your own private AI that compares well to GPT-3 on some tasks and is completely under your control. In this article, you'll learn about this versatile AI model and how to use it for language tasks like document summarization.

Summarization produces a shorter version of a document, called a summary, that ideally shows the following characteristics:

In natural language processing (NLP), we use two types of summarization:

In the real world, often both are used together. You may want abstractive summarization for some sections of the document and extractive summarization for others.

In the next section, you'll learn about a deep neural network that can do abstractive summarization.

BART stands for bidirectional autoregressive transformer, a reference to its neural network architecture. BART proposes an architecture and pre-training strategy that makes it useful as a sequence-to-sequence model (seq2seq model) for any NLP task, like summarization, machine translation, categorizing input text sentences, or question answering under real-world conditions. In this article, we'll focus on its summarization capabilities.

BART is just a standard encoder-decoder transformer model. Its power comes from three ideas:

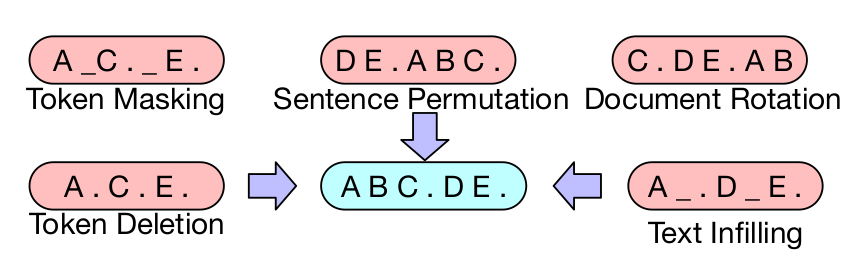

During pre-training, BART learns a language model that contains all the complexities and nuances of a real-world natural language. By deliberately introducing all kinds of noise, deletions, and modifications in its input data to make it difficult to learn, BART gains the ability to generate linguistically correct sequences even when input text is noisy, erroneous, or missing.

This denoising language model is versatile and can be adapted for any downstream text generation or understanding tasks like summarization, question-answering, machine translation, or text classification.

To learn to summarize at a high level, a pre-trained BART model is fine-tuned on a summarization task dataset. Such a dataset consists of pairs of input documents and their manually created summaries, often by subject matter experts that have a great idea of the perfect “goal state” summary for the use case. Summaries are often considered “relative” as what it means to be a good summary mostly depends on the user and the information they were expecting to see in the summary.

Fine-tuning refines the pre-trained language model's network weights to learn summarization-specific concepts like paraphrasing, saliency, generalization, hypernymy, and more. A fine-tuned bart model has learned the underlying relationships between various patterns in the input text and the goal output text.

How does the BART summarization model compare to the other summarization models out there? Research groups still compare these models using the old recall-oriented understudy for gisting evaluation (ROUGE) metrics. But ROUGE looks for common words and n-grams between the generated and reference summaries — the more there are, the higher the score. Since abstractive models paraphrase the text, they may not score well, and high scores may not result in good summaries under real-world conditions.

So, we compared these models qualitatively by reviewing their summaries, as readers in the real world are likely to. This is the preferred method of evaluating all types of natural language generation. We evaluated them in four industries where long-form texts are common and summaries are useful:

We also evaluated them on longer documents like research papers and books, a real-world phenomenon seen in all four industries.

We evaluated 12 models: four state-of-the-art (SOTA) abstractive models that can be run locally, four SOTA extractive models that can be run locally, and the four famous GPT-3 models running on OpenAI's cloud. Here are the 12:

All eight local models were run on Kaggle's CPUs without any GPUs. For the four GPT-3 models, the prompt was "Summarize this in 4 sentences:" followed by the document text.

In the sections below, we analyze the summaries generated by these models in each industry.

We started with a relatively simple use case of summarizing essays. Both students and teachers may use such a feature to improve their understanding and writing. It's useful outside the education sector too — to summarize news articles, for example.

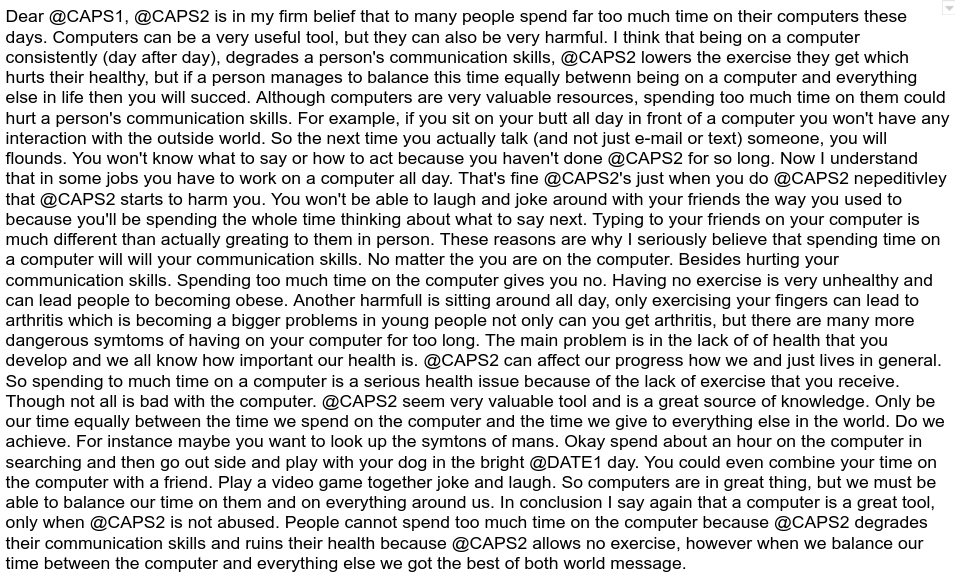

We evaluated the models on this student essay from Kaggle's automated essay scoring dataset:

As you can see, the essay has some spelling mistakes, grammar errors, and structural weaknesses. We can consider it a rather noisy input, the kind that's quite common in the real world. The student has used the first-person point of view.

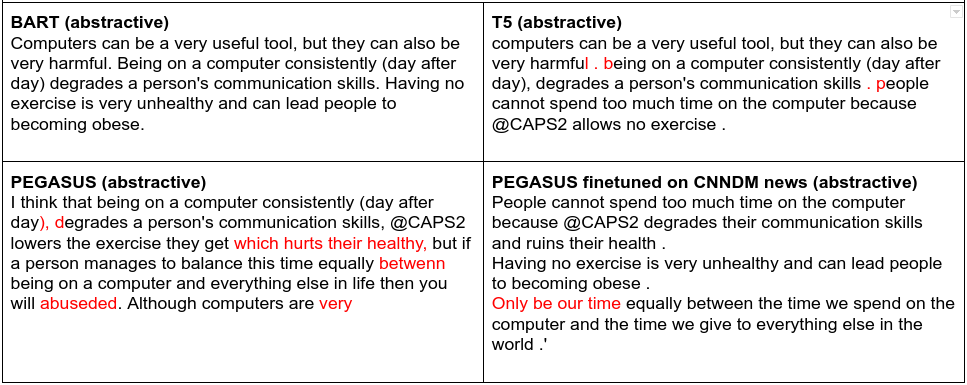

How do the models fare?

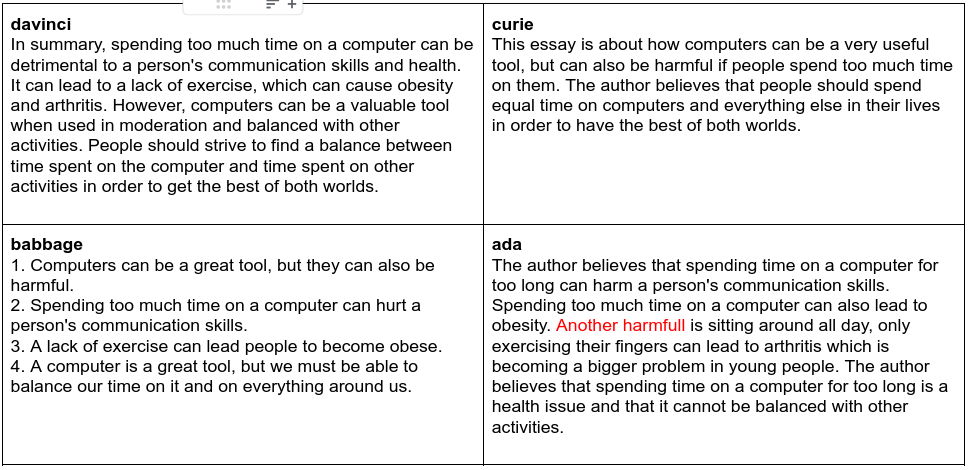

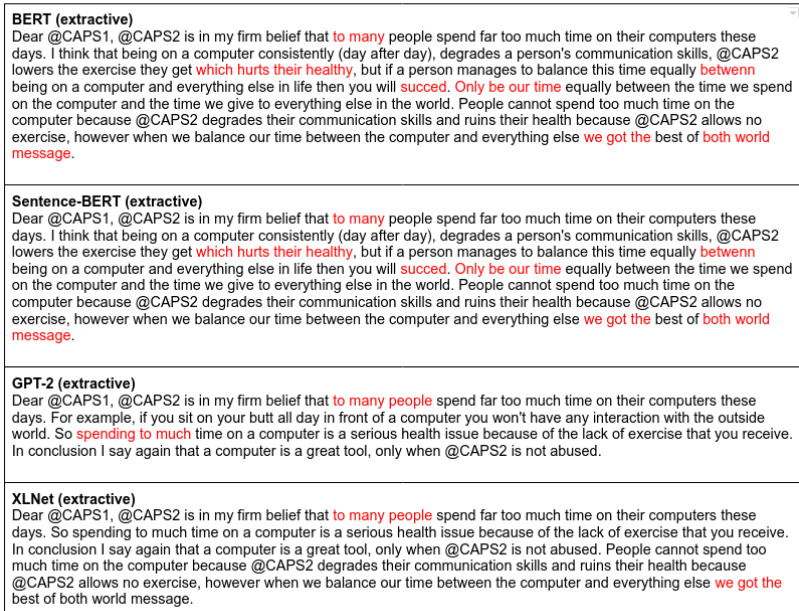

The four GPT-3 abstractive models fare well, with all of them changing the perspective to the third person and getting rid of the spurious text:

The four extractive models, though SOTA, retain too many errors and spurious text to be useful in practice. Use them only if your documents are of good quality.

From simple general essays, we move to the highly specialized domain of medical reports. Their summaries are useful to doctors, nurses, researchers, and insurers.

We use a medical report from the Kaggle medical transcriptions dataset as the input document.

The report contains multiple sections that may be important to different medical specialists. An ideal summary must retain all the sections and produce a summary per section. However, due to limits imposed by the models on the input lengths, we had to crop out the last 10% or so of the input document. These limits are not inherent and can be increased by retraining the models.

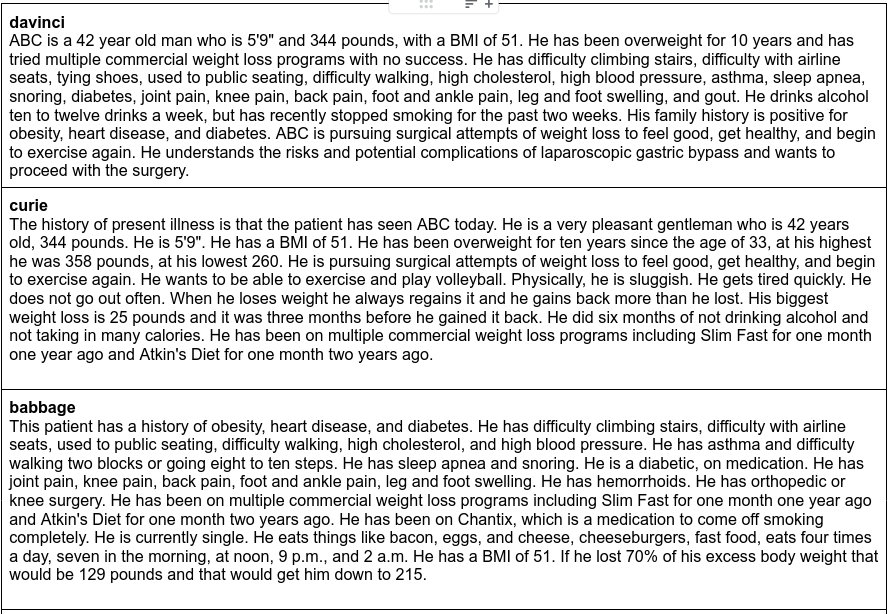

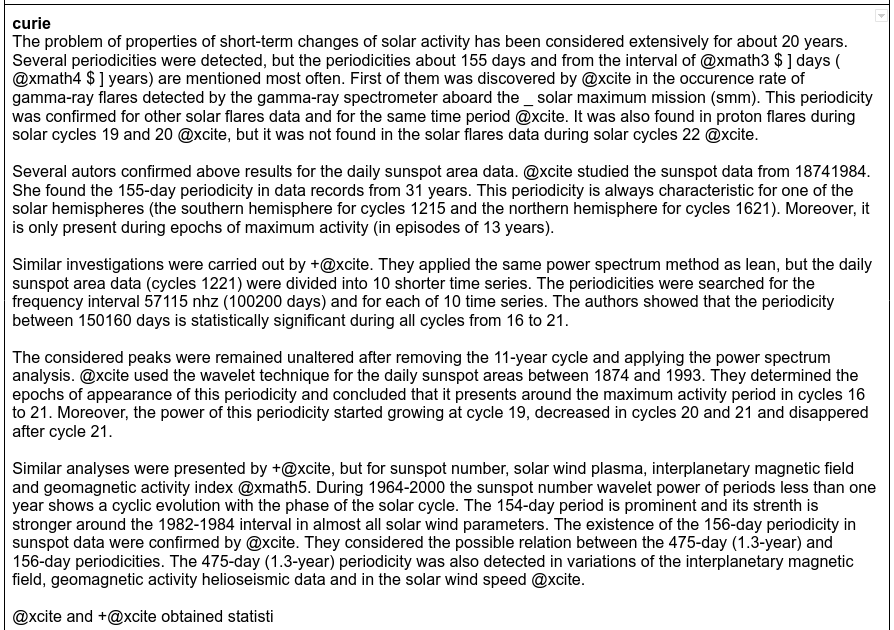

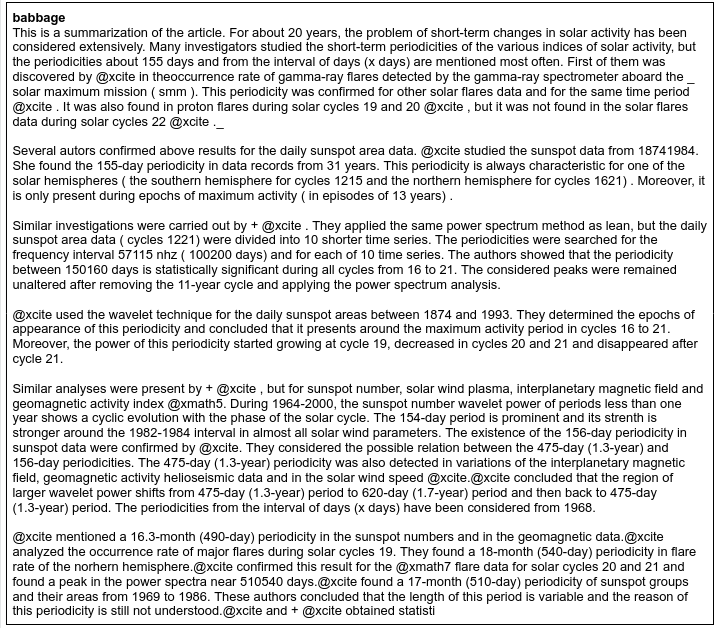

The first three GPT-3 models — Davinci, Curie, and Babbage — retain and summarize much of the relevant details (along with some irrelevant ones) though they discard the sections. Considering the size difference between these models it’s interesting to review the similarity of the outputs.

In contrast, the Ada model reproduces all the sections and also summarizes each one to some extent, but it does feel like it went off-track and retained too much.

The four extractive models discard too many details. But this is not inherent and can be overcome by increasing parameters like the number of sentences to retain.

We now move to another specialized domain: legal documents like contracts, agreements, and clauses.

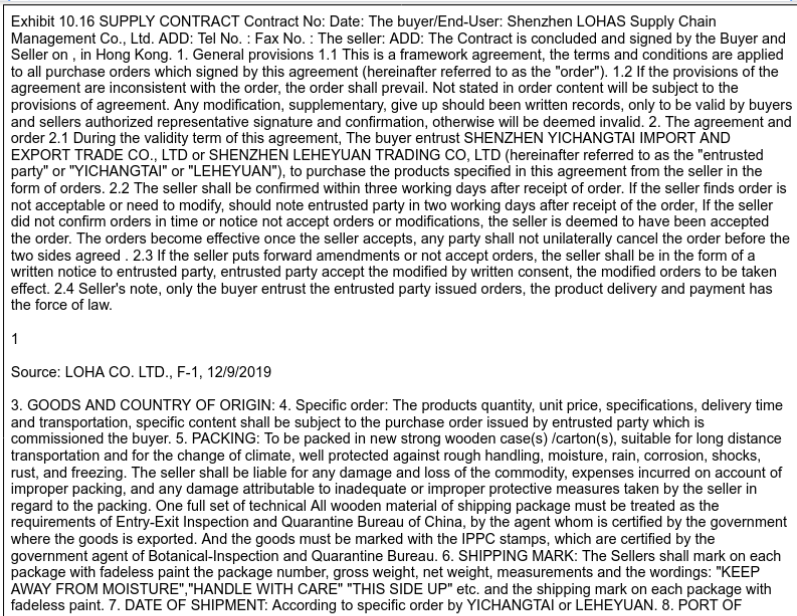

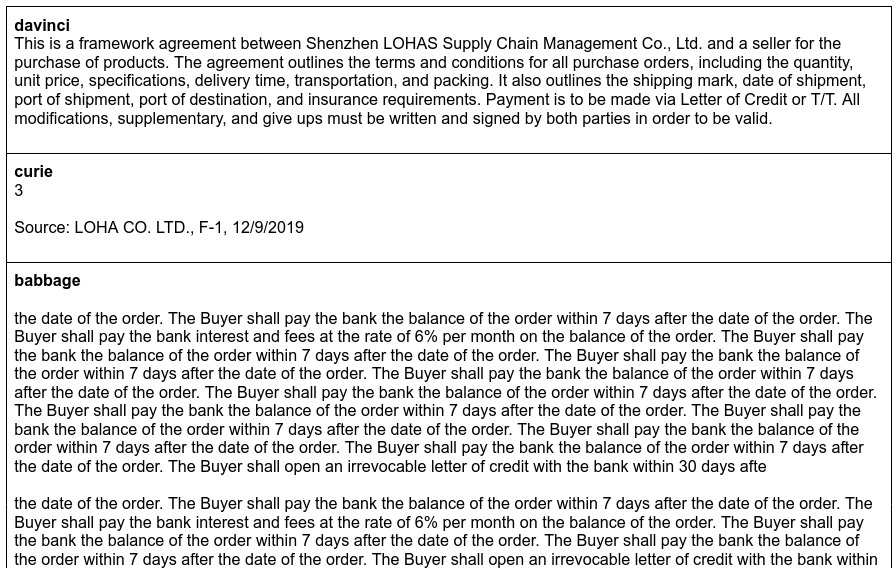

For our tests, we use a rather lengthy legal contract from the contract understanding Atticus dataset.

It has about 23 clauses covering all aspects of a business relationship. Again, due to model limitations (which can be increased), we had to crop the document to about 40%.

The Davinci GPT-3 model produces a very high quality abstract like summary with agreement details. Babbage generates a rather extractive summary while Curie fails on this document, possibly due to its odd format.

All four extractive models do well to tell us the signing parties, but the usefulness of the other details is relative as most summarization tasks of information dense documents.

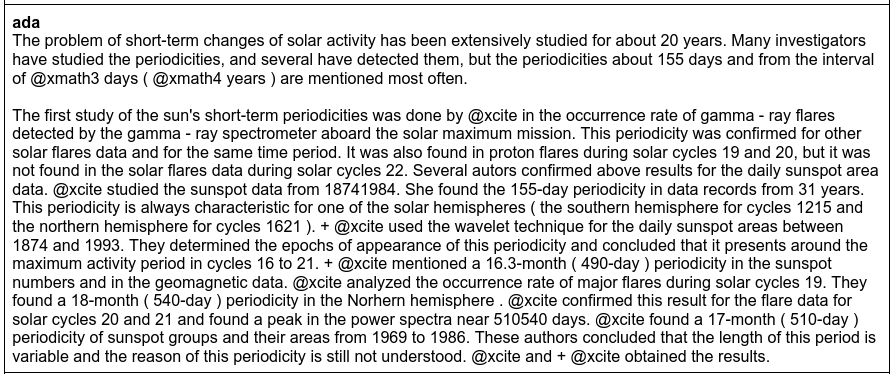

The final comparison is on the challenge of summarizing scientific research papers. We use an astronomy research paper from the arXiv summarization dataset as our test sample.

It's a lengthy document full of jargon, numbers, and noisy text like LaTeX symbols. Like the previous two domains, we had to crop it, retaining just about 12% of the full document but that includes the introduction and overview parts of the typical research paper.

All four GPT-3 do a fairly good job of summarizing the paper's chosen topic. Davinci takes the task quite literally and summarizes everything, but leaves out the most important one-line summary. Curie, Babbage, and Ada include a one-line summary of the paper and the first two even retain considerable detail.

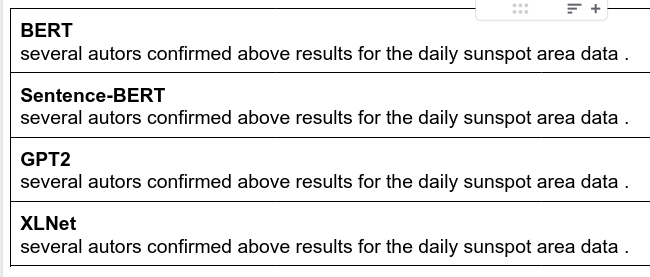

All four extractive models fare very poorly. It looks like they are tripping over some spurious text.

Three of the four analyses above used long documents that went beyond the limits imposed by the models. For example, the text supplied to BART must not go beyond 1024 subword tokens and longer documents have to be cut. Important context and details that the summarizers can use are lost forever.

In the real world, most documents like contracts and research papers breach these rather small limits. So, what can you do to retain all the information without cutting out anything? There are a couple of approaches you can take:

Based on the comparisons above, we can observe many benefits (and potential benefits) of using BART for your summarization needs.

BART's not the only model that learns to generate text when text is noisy or missing. BERT deliberately masks tokens, and PEGASUS masks entire sentences. But what sets BART apart is that it explicitly uses not just one but multiple noisy transformations. So, BART learns to generate grammatically correct sentences even when supplied with noisy or missing text. That's why it's the only model in our experiments that manages to get rid of most of the spurious text in every domain.

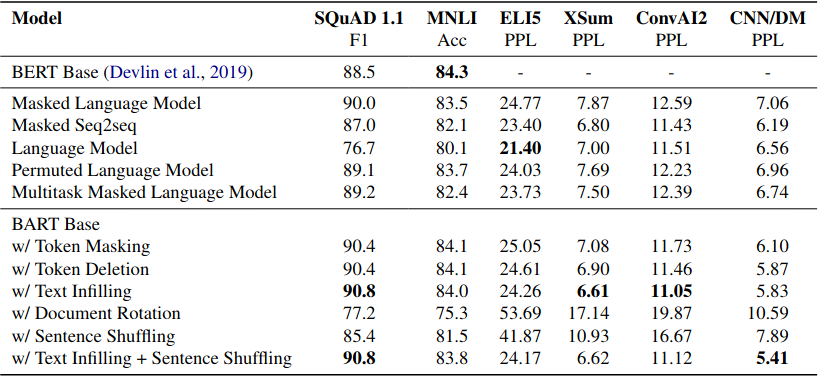

The BART paper demonstrates by scoring better than other comparable models on most benchmarks:

As we saw above, BART produces good results on a number of input types for advanced model summarization. The other models are more finicky, faring very well sometimes and very poorly other times. Such inconsistency generally requires more post-processing of the generated text using complex rules. BART doesn't suffer those problems as much.

Related to the previous point, grammatical errors of the other models may be particularly difficult to eliminate during post-processing. BART manages to generate grammatically correct text almost every time, most probably thanks to explicit learning to handle noisy, erroneous, or spurious text.

As we saw, BART's summaries are often comparable to GPT-3's Curie and Babbage models. So, businesses can get close to GPT-3's quality and versatility but with lower costs and more flexibility regarding fine-tuning and deployments, since BART can run locally on private servers and GPUs.

Some industries are just not comfortable about uploading their internal sensitive documents to a cloud provider like OpenAI or AWS. BART enables them to summarize their documents securely with good quality on their private servers.

GPT-3 is an amazing service but also has some limitations:

A model like BART that you can fine-tune and run on your servers doesn't suffer these problems as badly.

The HuggingFace library provides excellent pre-trained models and scripts to help you fine-tune BART on your custom data.

BART and PEGASUS are the most common backbones on which more advanced models implement their improvements. These advanced models, like MoCa, BRIO, and SimCLS, that head the SOTA leaderboards on various datasets are using BART as their backbone.

Among the abstractive non-cloud models, BART often takes less time compared to T5 or PEGASUS. The summarization is near real-time.

BART inference runs fine on CPUs without requiring any GPUs. For training, of course, you'd want a GPU.

If we compare model file sizes (as a proxy to the number of parameters), we find that BART-large sits in a sweet spot that isn't too heavy on the hardware but also not too light to be useless:

A versatile language model like BART is essentially a private, self-managed GPT3-level model for your company's needs. You can use it for intelligent document understanding, machine translation, summarization, document classification, and much more while keeping all your sensitive business information secure and private. Contact us for expertise in building up your AI and machine learning capabilities!