The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

Generic and “chatbot sounding” chatbots come with the risk of damaging your customer retention, especially if it's pretty easy to tell you’re talking to a bot that has limited knowledge and ability. The number one limiting factor customers come to us with in terms of building chatbots is the worry that they won’t be able to take on the persona of their current customer service.

However, modern chatbot frameworks powered by large language models (LLMs) & elite prompting language provide opportunities to deliver high-quality customer service that interact just like customer service agents, because they’re trained just like customer service agents.

In this article, we explore building a chatbot that's powered by open-source LLMs to provide highly relevant and even personalized customer service. This chatbot has an exact gameplan built into it for how to talk to customers and overcome deflections, pain points, and generic responses from customers. It also has access to real time customer data and accounts to fully engage customers and figure out solutions to customer service problems.

Most typical customer service chatbots result in weaknesses both in the customer experiences they provide to customers and the business value they generate for businesses.

Some weaknesses in the customer chatbot experience include:

These weaknesses force many customers to quickly switch to human service agents, or simply exit the service.

But if chatbots can provide far more helpful and personalized customer service, both customer satisfaction and business value can go up. Chatbots powered by large language models (LLMs) hold some promise in their ability to achieve this.

We explore such an LLM-powered chatbot system architecture and its step-by-step implementation in the sections below.

The overall system architecture of an LLM-powered customer service chatbot is shown below:

We'll explore each component in later sections. But at a high level, the chatbot application programming interface (API) service consists of a chatbot pipeline that generates replies to customer queries. To do so, it uses knowledge bases and external data sources to enhance its knowledge and the amount of information it knows about.

You can provide this chatbot service to customers through multiple channels including text, voice, and text-to-speech as shown below:

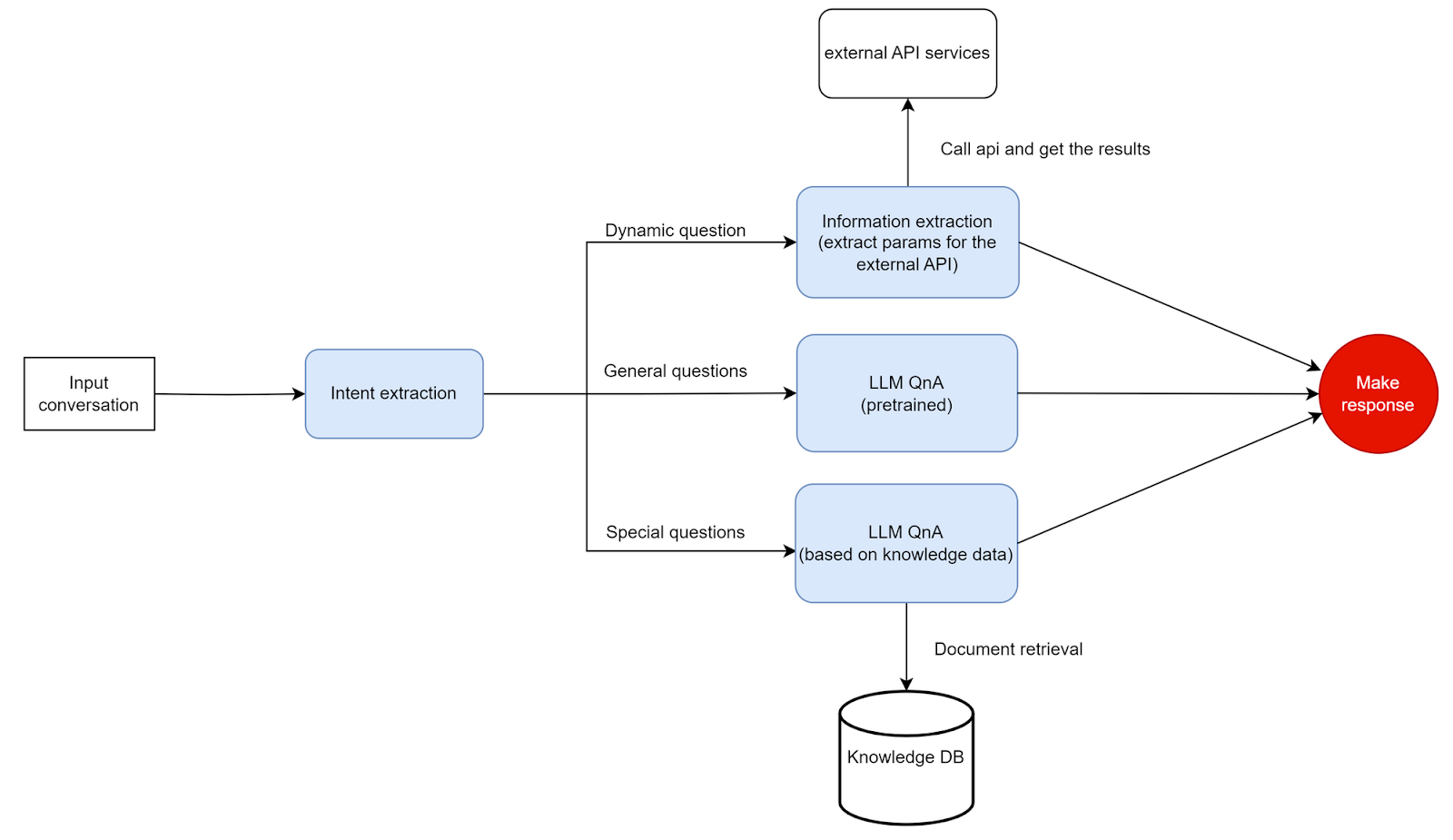

The LLM-powered chatbot pipeline is the heart of this system. Its question-answering (QnA) architecture is shown below:

The three LLM QnA components correspond to the three sources of information that are helpful to customers:

Intent recognition routes each customer query to the most suitable chatbot pipeline. In the next section, we give some details about the LLM we're using for this implementation.

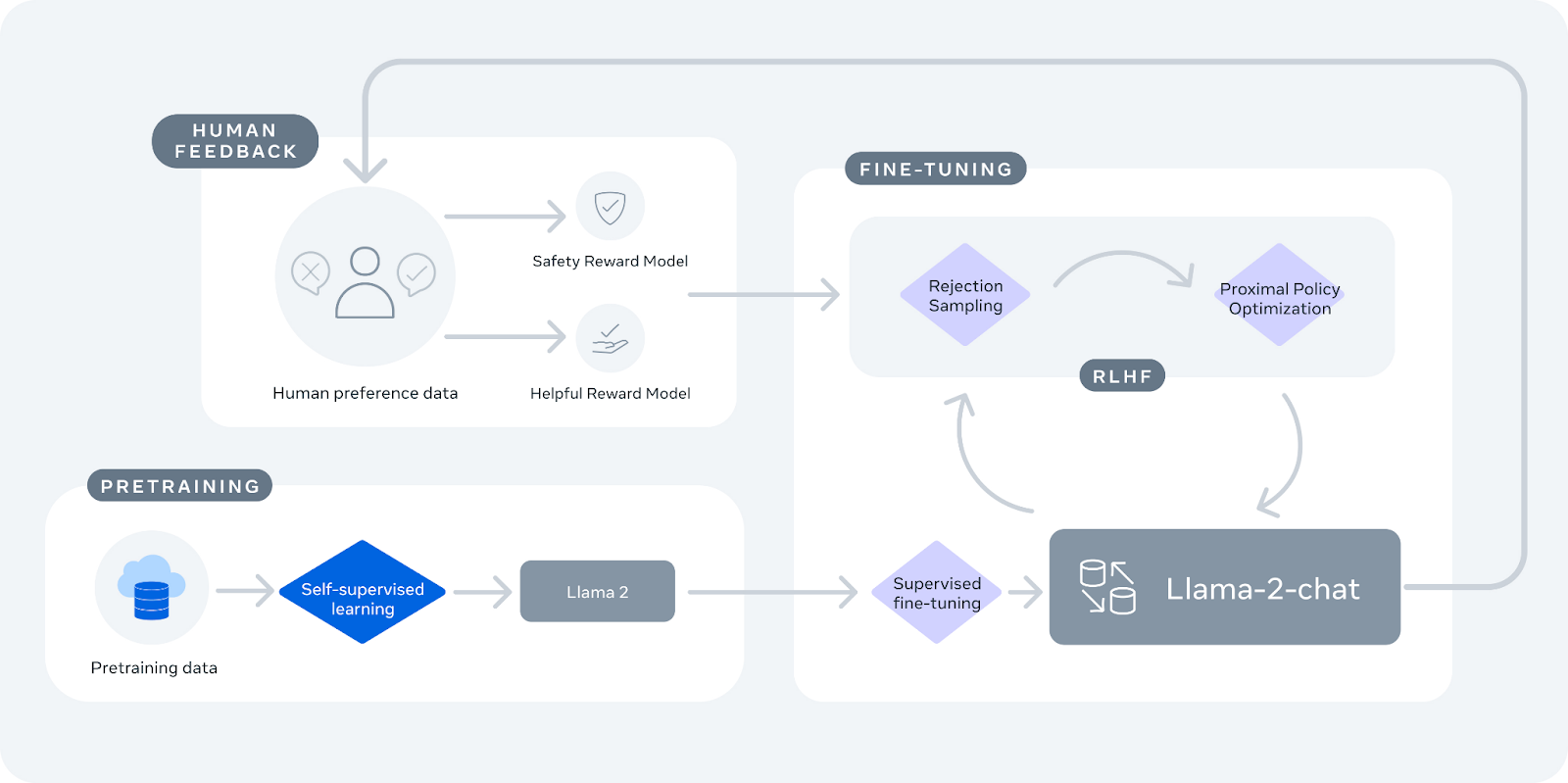

As of August 2023, Meta's Llama 2 has emerged as one of the most versatile and popular LLMs among the open-source ones with ChatGPT-level quality.

It's also one of the few open-source ones that have been fine-tuned using both the common supervised fine-tuning as well as the less common (and more effort-intensive) reinforced learning with human feedback methods. As a result, the quality and relevance of its chat responses are good right out of the box.

Plus, unlike many other open-source LLMs, its permissive license attracts businesses to use it for commercial purposes.

An example chat with a pretrained Llama 2 model is shown below:

We'll start by further fine-tuning this LLM on base knowledge and the ability to extract information from the provided context.

FAQs, like the banking examples below, can clarify many common doubts in the minds of your customers.

But a list of FAQs on your website can make for a poor customer experience for many reasons:

LLMs are a great solution to these problems. Since they can semantically understand complex information needs and combine multiple answers on the fly, you can alleviate all three problems by training an LLM-powered chatbot on your FAQs.

Specifically, you can bake in the FAQ knowledge into the LLM's internals using fine-tuning techniques. There are many approaches and third-party services to help you fine-tune an LLM.

In the steps below, we demonstrate fine-tuning your LLM chatbot using a service called MosaicML.

Transfer your FAQ training data to AWS S3, Azure, or a similar S3-compatible provider.

MosaicML expects the training data to be set up using its Streaming framework. Provide the S3 bucket URL to the StreamingDataset object.

Since MosaicML orchestrates the training using Docker, create a container to host the Llama 2 model and run it using the Hugging Face transformers framework.

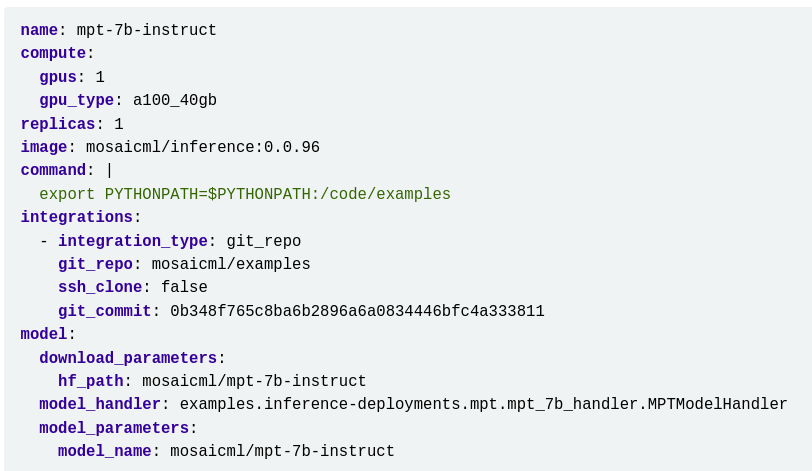

Create a configuration file similar to the one shown below, changing the details to match your environment and Docker images.

Infrastructure provisioning is as simple as two or three lines in that file. How many GPUs or TPUs do you need? And what type? MosaicML handles the rest behind the scenes.

Use the MosaicML command-line utility to start the fine-tuning run:

Use the same utility to monitor your runs:

Once the run ends, MosaicML uploads the trained model to the Hugging Face Hub or another repository.

How much difference does fine-tuning make? Below is the reply to a banking-related query from a stock Llama 2 seven-billion-parameter chatbot:

We fine-tune the model on a bank's small FAQ (about 250 questions) using this strategy:

With such careful fine-tuning, the reply for the same question is shown below:

The chatbot reply is now more specific and contains relevant details from the FAQ. Plus, the chain-of-thought instruction in the system prompt tells the LLM to combine information from multiple FAQs when answering complex questions.

FAQs are just one type of business knowledge. Your business probably has many other types of knowledge documents that straddle the line between static and dynamic information.

For example, loan or insurance products are accompanied by policy and offer documents that contain too many details for the average FAQ page, such as this sample insurance document:

Information from such knowledge documents is often very relevant for answering customer queries meaningfully. In the following sections, we explain how to incorporate their information into LLM chatbots using a technique called retrieval-augmented generation (RAG).

The key principle behind RAG is to find fragments from your knowledge documents that are semantically related to a customer's query. These fragments contain the relevant information that the LLM can use as context to meaningfully answer the customer's question.

To implement RAG, you need these three components:

Let's understand each of these components a bit more.

The vector DB is used to store and look up millions of embedding vectors generated from your knowledge documents. A vector may be for an entire document or each chapter, page, paragraph, or even sentence.

The vector database can be a self-hosted database like Qdrant or Weaviate or a managed third-party service with an API, like Pinecone.

The embedding service has two functions:

We use the SentenceTransformers framework for calculating and matching embeddings, specifically its asymmetric semantic search models. That's because user queries are typically much shorter than document fragments, requiring asymmetric rather than symmetric matching.

Finally, you need a component that allows your business operations teams to modify (add, update, or delete) your knowledge documents. When a document changes, this component modifies its related embeddings in the vector DB using the embedding service.

Documents are considered non-dynamic data as they do not change frequently and do not contain information about ability or changing information.

We bring in dynamic data such as inventory data, external API services, constantly changing prices, and other key information we want to be able to answer questions about and use in our conversation, but changes too frequently to store in a vector database like documents. If we were to try to vector index inventory data we would have to update the database and reindex every single time that data changed. It makes more sense to store data like product specifications and product tags in the vector DB and then access an API for inventory and pricing information.

This also allows us to add a level of personalization to the chatbots ability by pulling in customer specific data from their account. This can help guide our responses and provide questions that better reach our end goal of the conversation. This ability to skip some questions and steps improves retention.

A good chatbot should aim to identify every customer's exact information needs and guide them toward it one step at a time by asking for specific details. This is like chain-of-thought prompting but with more awareness about your industry and specific business.

For example, a mortgage business' chatbot must elicit key details like the loan amount and term that a customer is looking for. With those details, it must fetch dynamic information like the customer's credit score and suitable interest rate, calculate the monthly payment, and convey all these details as shown below.

Or take the example of an e-commerce chatbot. It must guide a customer into revealing the kind of product and product characteristics they have in mind to find a close match. The choices at each step are dynamic because they're based on the customer's previous choices as shown below.

For such elicitation of a customer's exact need, your chatbot must issue the relevant commands and fields expected by the API services that manage your dynamic information. Some popular approaches to implement this are:

We have demonstrated ReAct prompting’s ability in chatbots elsewhere. In this article, we demonstrate the Toolformer approach.

Toolformer is a technique to equip LLMs with the ability to invoke external tools by issuing API-like action commands and including their results in the generated text. I love toolformer for chatbots as the ability to place the dynamic text inline with model generated text makes it very easy to blend the response. Many approaches require you to pull the dynamic knowledge into the prompt then generate everything in one go. This lets our model focus on the conversational text and just create simple tags when we need dynamic data. These examples illustrate the idea, with the tool actions and results highlighted:

In the example above:

A critical detail to understand about the Toolformer approach is that the LLM itself probabilistically decides when to insert a tool action, which action to insert, and what inputs to give it. The application that receives the LLM's output (a chatbot in this article) is responsible for detecting the tool actions in the text and accordingly executing them.

Equipping an LLM with Toolformer involves both in-context learning and fine-tuning as shown below and explained in the next sections.

The first stage of Toolformer training involves in-context learning (i.e., generating prompts containing tool action examples). For each tool required by your chatbot, a suitable prompt is provided along with a few in-context input-output pairs as examples.

A typical tool action prompt with two in-context examples is shown below:

The inputs contain a factual statement and the outputs contain tool actions and inputs that are likely to generate the inputs.

For a mortgage business, the examples might resemble these:

The in-context learning prompts above are combined with several samples from a dataset like CCNet. Samples are selected based on some heuristic relevance to an action and then randomly annotated with tool actions at different positions.

For every generated sample above, the training script executes the tool actions it finds in the samples. Running a tool may involve some custom logic (like a loan calculation) or calling an external API (like getting a product price from an inventory API).

The result is a dataset where tool actions have inserted tokens at different random positions.

Next, the training script evaluates each sample in the dataset, the generated tokens, and the expected tokens to identify whether a tool execution increased the loss function of the subsequent tokens. Any sample that didn't reduce the loss is eliminated.

So you're left with a dataset where tool actions produced expected and semantically correct results.

Finally, this remaining minimum-loss dataset is used to fine-tune the LLM to equip it with Toolformer capabilities. The fine-tuning follows the same MosaicML workflow as the FAQ fine-tuning above.

The first component to process each customer query is the intent extraction component. Before the query is processed by the LLM-based question-answering pipeline, this component attempts to classify the nature of the query as:

After determining the nature of the query, it's dispatched to the appropriate pipeline. For the actual classification, this component just uses another LLM instance that's fine-tuned for query classification tasks.

This section provides details on the databases that support your chatbot's operations.

If you're a mid-sized business, you probably want not just one but multiple customer chatbots to cover each of your major products or business lines. For example, if you're a bank, you may want a chatbot for retail banking and another for your mortgage business. They can share the same front-end user interface but route the queries to the relevant business line's chatbot in the backend.

Similarly, if you're a software-as-a-service providing an online employee payroll and benefits management service, you'd want to provide a separate chatbot to each client company because their FAQs, knowledge documents, and dynamic information will all be different.

For such multiple chatbot arrangements, you need a multi-tenant relational database where each chatbot's knowledge and interactions are separated by grouping them under a client or business line. Such a database schema is shown below:

This database groups each chatbot's target users, documents, and interactions using a group ID. When a client's website calls the chatbot API, it routes the interaction to the chatbot instance created for that client.

Your dynamic data consists of all your existing databases that provide dynamic information like:

These are modified using proprietary APIs in response to actions generated by the LLM's ToolFormer (or ReAct prompting or equivalent) implementation.

In this section, we explain some critical deployment aspects you must take into account when going to production.

You can deploy the LLM you fine-tuned using MosaicML with these steps.

The configuration file tells MosaicML:

An example deployment configuration is shown below (for another model; change it to match Llama 2):

Use the mcli utility to deploy the model. MosaicML automatically provisions all the resources it needs for inference:

The model is deployed and published at an API endpoint.

MosaicML gives a unique name to each deployed model. You need to know it to send requests to your fine-tuned model. Run this command to list all active deployments:

It'll list your active deployments:

Try submitting prompts to your deployed model using code like this:

The LLMs, databases, chatbot API, and other self-hosted services run as a mesh of microservices in Docker containers.

For customer-specific dynamic information, the chatbot system must authenticate your customer. We implement this using single sign-on so that chatbot services are available seamlessly to a customer who's already signed in.

Additionally, to prevent unknown applications from accessing your chatbot APIs, authorization checks are enabled for each microservice and API.

The overall system architecture depends on the following cloud services:

In this article, you saw how to implement an LLM-powered chatbot. A major problem of customer service chatbots was their inability to understand natural language well unlike their human counterparts. With the help of LLMs and their powerful natural language processing capabilities, chatbots can overcome these deficiencies and provide far better customer experiences.

Contact us to find out how we can help you equip your business with high-quality customer service chatbots.