The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

A call to action is used in website copy to guide the reader to the preferred next step in your funnel. A compelling CTA is a written directive that blends well with the information that is read above and how the information makes the audience feel. What leads to well blended CTA copy and reaching the "goal" feelings can be broken down into things such as tone, target audience, personal connection, and other points.

A call to action generator allows you to generate these text fields used in subtitles and buttons instantly based on a few inputs. Many of these inputs focus on the key ideas by having you choose the tone of voice, what you're selling (or where you want the reader to go), and what industry your target audience is in.

Most of the call to action generator tools on the market are okay. They do a decent job at taking whatever inputs you provide and giving you a few options for some nice button text or product landing page subtitles. Does this copy actually convert well? Is it actually useful to have a tool generate "Buy Now" for an ecommerce landing page? Here's a short list of improvements that we'll look at building into a custom call to action generator using our Width.ai state of the art GPT-3 pipeline.

1. More valuable/higher ROI calls to action. I don't need to pay for a tool to generate "Start my free trial". Let's look at how we can generate the subtitle section and a better button.

2. Auto optimize our generated CTAs based on real feedback and valuable metrics. This allows us to constantly be improving the generations based on actual results. We'll create an internal feedback loop that adjusts our generated content ideas based on the impact of our copy. This also helps stay away from trying to build a huge generalized product. Niched, refined, user focused ai products > ai models generalized for everyone.

3. Higher quality use of niches and use cases with expanded data variance. This provides a real difference in CTAs used for an ecommerce store's product page vs a real estate company's home page. Most of the copy generation tools try to do this, but the CTAs aren’t much different and don’t rely on specific information from the rest of the copy to vary the CTA. We're going to focus on working through this to make CTA generation actually useful for high quality copy.

4. Create structure around the expected use case of a generated CTA. This idea piggybacks off the above to focus on making the generated CTAs more specific to exact use cases.

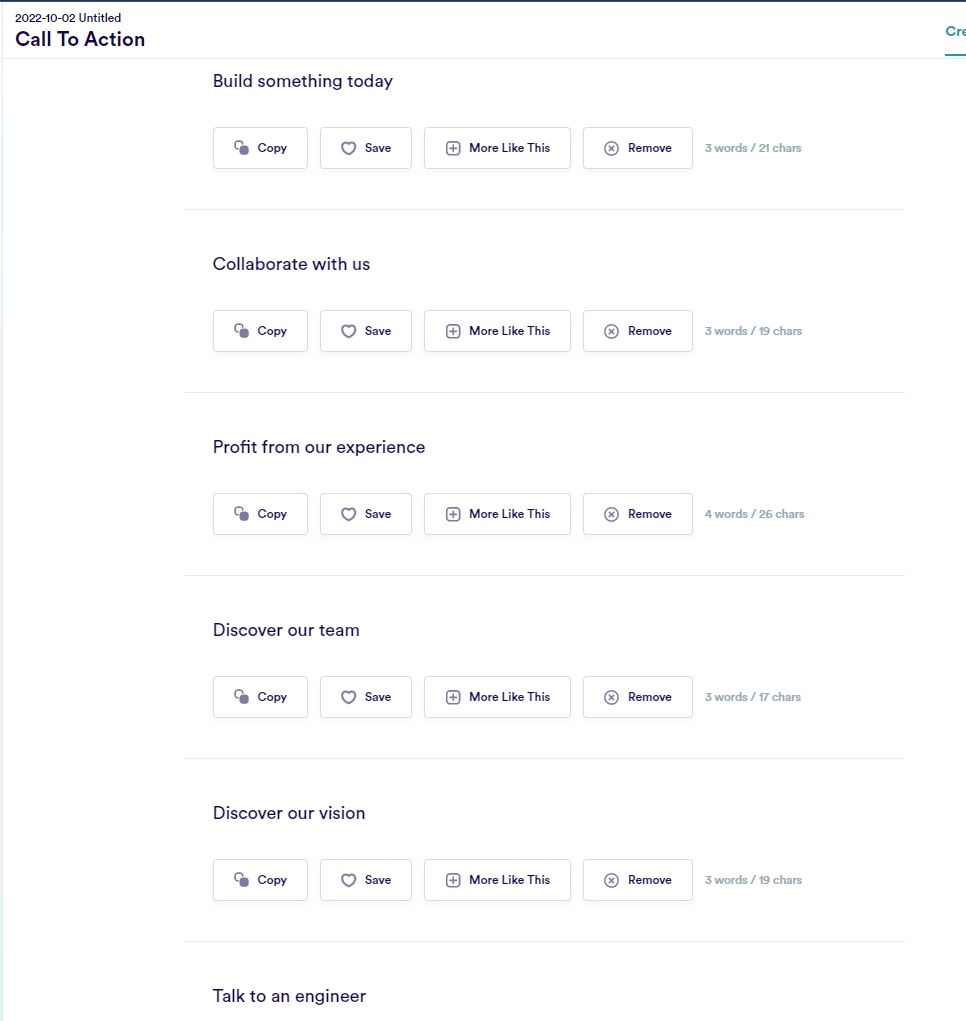

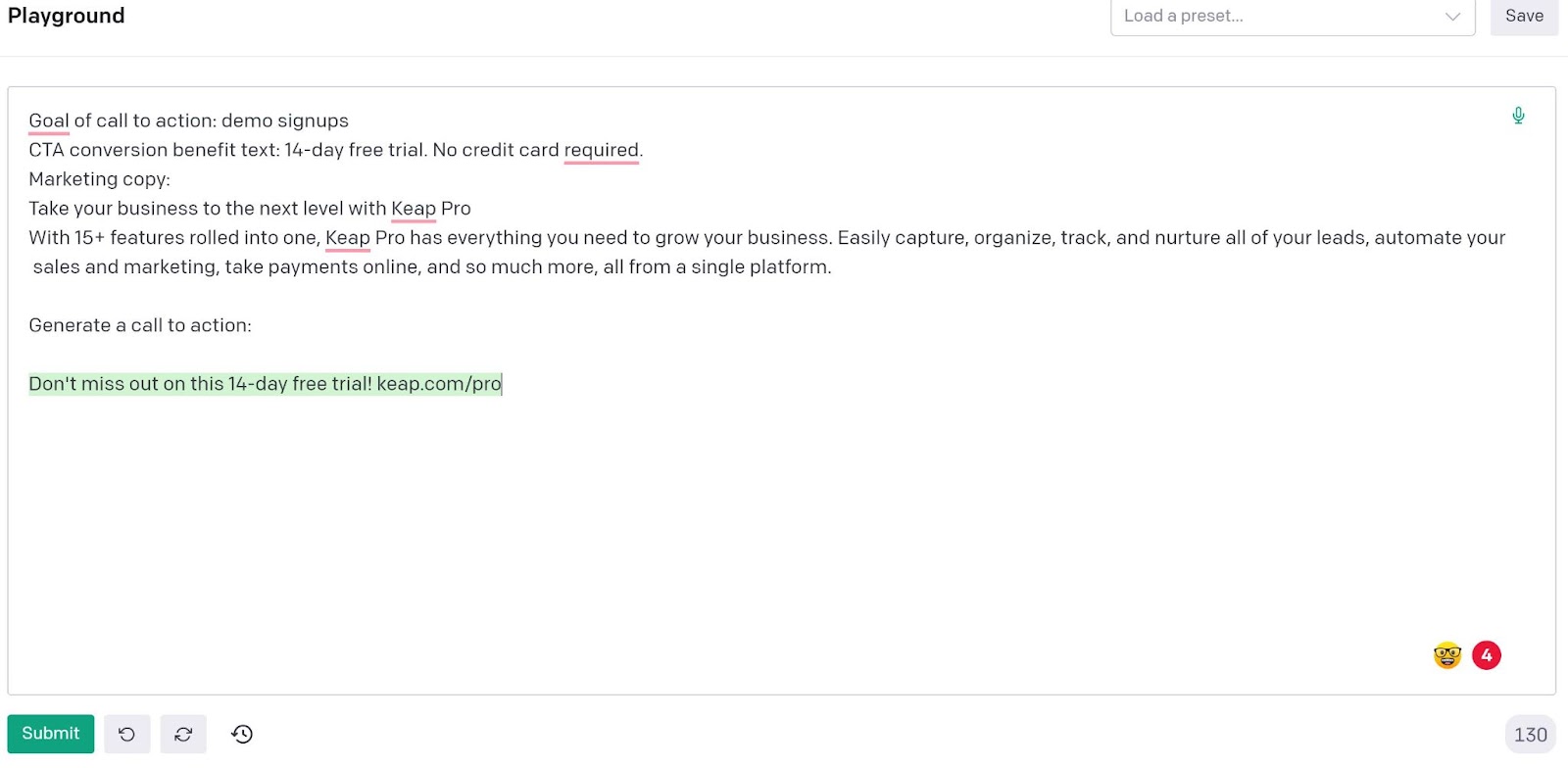

Here are some inputs to generate a call to action from an ai marketing copy product.

The output CTAs aren’t terrible considering the amount of information I provided, but how is this useful? Most of these CTAs would be used in different funnels or different parts of the funnel. If I was writing copy and had a very specific requirement I can’t provide more information to get CTAs relevant to the copy I’ve written and the goal of the copy.

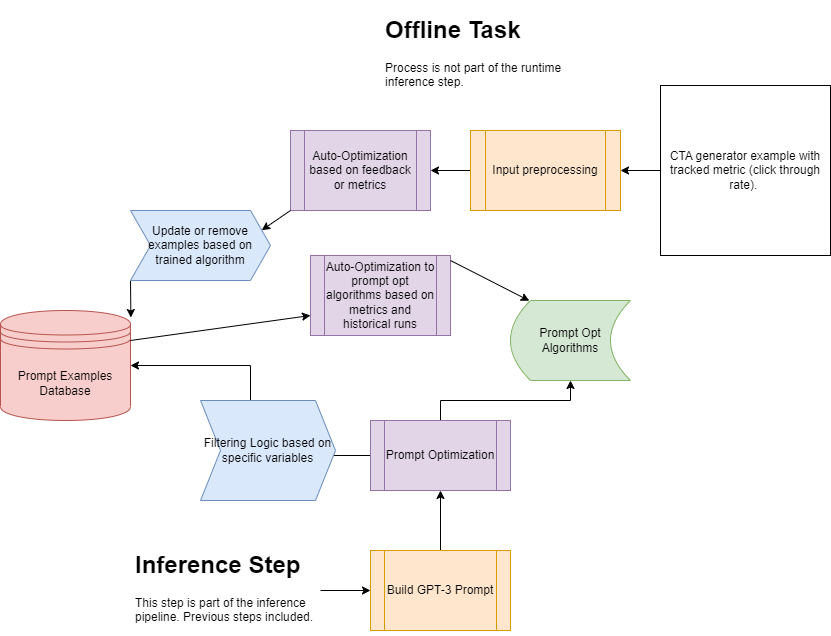

The architecture used for generating a call to action is a slightly modified version of our Width.ai GPT-3 pipeline architecture. The key difference is the first and last modules for inputs and copy storage updating, which are use case specific. The above pipeline does not contain the steps for updating the required database information to automatically optimize. Let’s take a look at each of the steps in our custom CTA generation tool.

How we set up and structure our inputs is the most important part of any production level GPT-3 product. GPT-3 is essentially a language API that reacts to the information we provide it, and not much more than that. The real equation is what we do upstream to understand what information is valuable to produce strong results, structuring that information, and how we maximize the probability of a strong result with varying levels of input data variance.

We’re going to focus on SaaS product specific copy and CTAs to make sure the examples and pipeline modules used stay consistent across sections. The key variance will be various parts of the funnel and how the equation changes over these different use cases (blog post, landing page, email, footer etc). Here are the inputs we’ve had great success with for these tools.

This field is required although not commonly used in shorter CTAs. We’ll also use this field to do a bit of auto optimization to focus on your specific copy examples and saved successful copy. When looking to generate longer CTAs for subtitles or popups it can be a useful field to have.

This is a variation of the popular and generic “describe your product” field commonly seen. We want to make the description of what you’re selling actually valuable by including a value breakdown that better helps NLP models understand what the end reader is looking for. This field can also be focused just on what the end reader is looking for, but I find it easier to write about what value we provide than trying to get fully into the mind of the reader and ensure the rest of the fields are reader focused.

“Pumice.ai is a SaaS product focused on completely manual product information management tasks 17x faster using Ai. Ecommerce brands use ai models with over 90% accuracy for tasks such as automated product categorization and product attribute tagging.”

Most CTA generation use cases won’t find this field particularly useful if long-form copy is being used before and after the CTA. Information from the copy will be much more relevant and do a better job steering our generated CTAs towards something that fits better with what you’re trying to use it for.

What are we generating this CTA for? This is one of the key components of generating CTAs for specific parts of the copy. This can be multiple inputs to generate CTAs for the different spots in the same copy depending on how you want to use a custom CTA generator.

“Button CTA used to drive users to webinar”

“Button CTA going to product page”

“Short subtitle text above buttons”

This text can be as specific as you want it to be. We’ve seen a pretty strong correlation between this field and how much text is provided in the copy section. If you don’t have a lot of copy or the use case doesn’t require much (emails) then it’s better to be a bit more descriptive. I recommend playing around with this and evaluating based on how you like the output.

The text required to generate either of the buttons or button subtext objection handler) would be different as they have different objectives. Generating the objection handler is probably the most valuable part here and contributes more to CTA conversions than the button text itself. We can specify generating different fields like objection handlers, trust badges, supporting text here as well.

We’re going to use an improved version of this field that helps GPT-3 in situations where not much copy is provided. If you’re using this optional field it should be multiple sentences explaining the tone required instead of using keywords such as “Luxury” or “Relaxed”. Copywriters don’t know what that means, and it doesn’t help a model actually understand generating better responses.

We can also use this field to filter between different internal copy methods to try to generate based solely on our own copy. This is useful if we’re using this tool for various companies and want to be able to focus the generations on just one company and one use case.

“CTA for Width.ai blog posts”

The most important field for generating high quality calls to action and being able to constantly improve the generations over time. This field is what we prompt engineer as the highest correlation between input text and a generated CTA. Through both prompt optimization and example optimization GPT-3 will learn a deep relationship between the long form text provided here and what successful outputs look like.

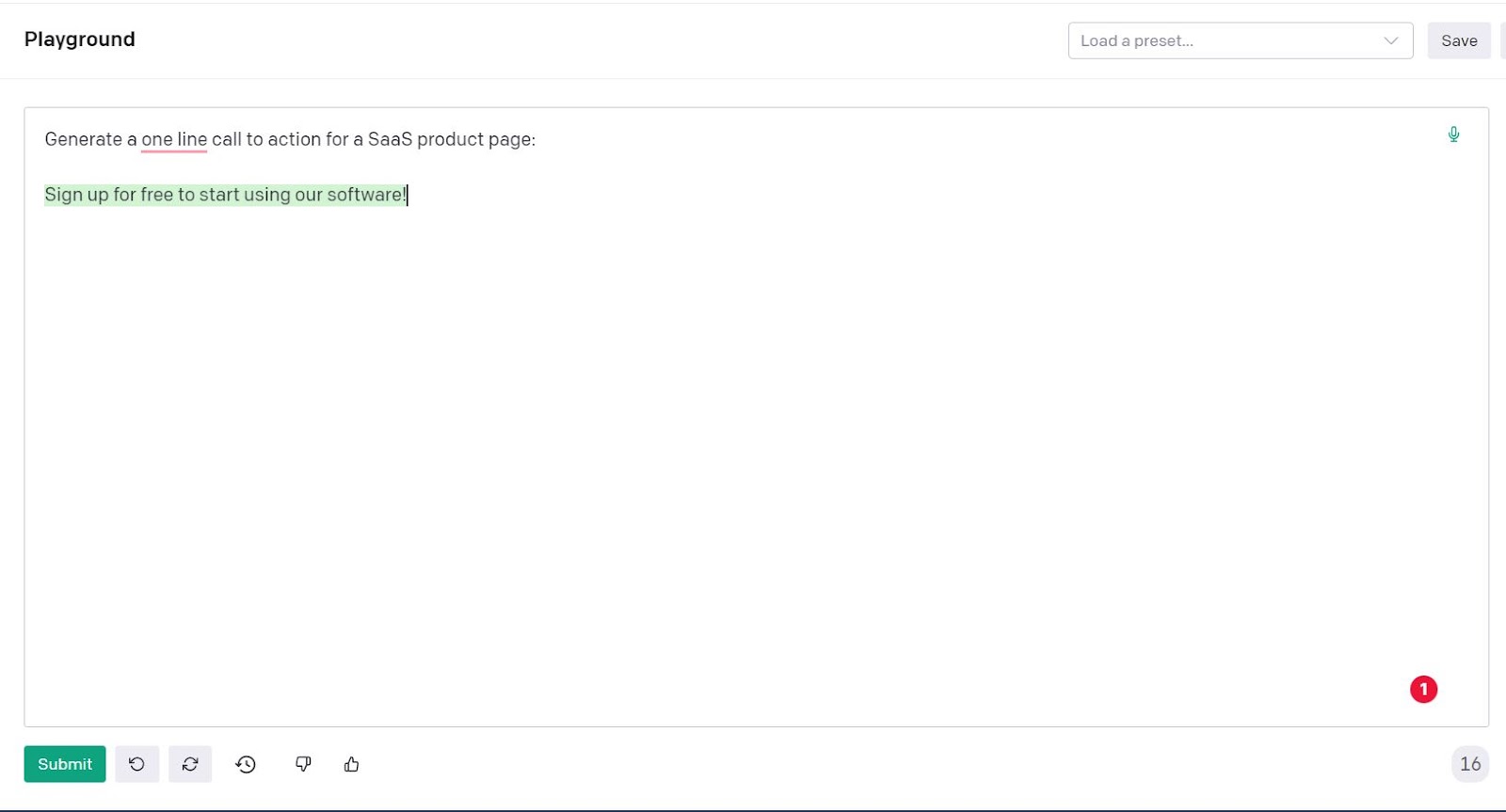

The same prompt header is used with both prompts being zero shot. You can see how much adding the marketing copy makes a difference even in this simple example. All we used was just a header and marketing copy with zero examples!

This module in the pipeline focuses on transforming raw input variables into an assigned format for GPT-3. This transformation is developed through our prompt engineering and testing to understand how to best display the input information to GPT-3, and what has to be done to reach the goal prompt information. Our prompt engineering phase of development is a rigorous step in the process but is a key part of the equation to reach a production level system with high accuracy and data variance coverage. The prompt engineering phase helps us work backwards to understand what tools to build to transform the inputs.

One of the key input variable tools we use allows users to tag specific lines of text in the “copy” variable to specific information such as headers, button text, titles and more. This gives GPT-3 a bit more context around the raw copy text and helps refine the output CTAs. This has been shown to improve the models ability to provide valuable language variation in CTAs and avoid those generic “Buy Now” generations.

Examples of user defined copy tags before input preprocessing removes the tags and adds prompt language

This input processing module handles going from these raw tags that users provide to optimized prompt language used to tell GPT-3 how to use the text, and what matters in this sea of marketing copy. These descriptors really affect the ability of GPT-3 to use the marketing copy without just repeating parts of the input copy.

Generic example where the button CTA is just a repeat of the landing page title. This is pretty common and most generations will be some form of a variable

Generated button CTA with our custom prompt language. Model is able to easily generate its own language and understands what Quicklines is. The most important part of this generation isn’t the actual button CTA, as that can be tuned to your liking with optimization, but the ability to go off script and leverage the guiding prompt language.

This module also uses simple text input cleanup functions that improve the quality of generations in a production setting. GPT-3 (and NLP in general) works better when the inputs are consistent in structure and don’t contain noise. We’ve deployed a few functions to remove things such as HTML tags, bad characters from scraped data, and other notable patterns.

The process for designing and iterating the prompt used to interact with GPT-3 has the highest correlation to successful and stable production use. This becomes especially relevant when using longer form text to generate outputs and trying to take advantage of key biases in the underlying model’s learning. GPT-3 in its novel playground free trial version is light years different from what experts are doing in a production setting.

We’ve already seen above the incredible difference in outputs between simple prompt language and expert prompt design. GPT-3 is able to have a much deeper understanding of what a good completion is across a wider range of data variance and fewer “crutches” such as fine-tuning and a huge prompt. The focus of building solid prompt language is to be able to generate correct outputs with as little prompt example guiding as possible in a test environment. The reason is that it becomes more difficult to control the process leading to accurate generations across data variance as the requirements to generate a single accurate example grows. The idea at a high level is similar to that of overfitting a model. Leaning more on GPT-3s ability to actually understand the task and use as much of its pre-training as possible before brute forcing with larger prompts usually results in higher quality products.

This use case sees this prompt language optimization idea be even more relevant as the long form nature of the variables required makes certain prompt biases more valuable to take advantage of. These biases can help or hurt a model's performance if not optimized for during development. We’ll focus on optimizing the prompt variables and headers for accuracy by split-testing variations just as you would different NLP models.

These examples from our GPT-3 summarization article show the sheer power and value of prompt language. Both of these examples are zero shot prompts which rely heavily on the prompt language to understand the task. The above example is a bad summary and poorly uses the prompt language, the below example is a blog post summarization prompt that picks up on two separate topics in the same chunk and summarizes. On top of that ability, it recognizes a proper noun and is able to correlate the information to the proper noun correctly.

Another key focus during the prompt framework building process is the variable order for each input or prompt example. When you have as many user inputted variables as our CTA generator has it’s important to understand that variable order will impact how GPT-3 understands the importance of information. This is a natural bias that occurs in prompt based LLMs. We’ll include this as a step in the prompt design process.

Great example of the generation change when changing variables.

Prompt optimization frameworks are one of the most important parts of moving to a production GPT-3 system and are used in similar auto-optimization pipelines. We’ll spend a decent part of our development process working to build these frameworks no matter the GPT-3 use case.

Prompt optimization is a trained algorithm built with the focus of being able to build a relevant prompt dynamically with prompt examples and language based on a provided input. The goal of this is to go from a static prompt for every user input to a prompt that best fits each input.

The goal of using a prompt optimization framework is to improve accuracy by optimizing GPT-3s ability to handle a wider range of data variance provided in the input. Prompt examples are used to create a “few shot” environment and show GPT-3 how to complete the task correctly. In this case it’s to guide GPT-3 to a generated call to action that we deem correct.

Prompt optimization will address the two main issues that static prompt building cannot fully address in a production setting. The first is that our examples provided in the prompt are not always relevant to a given user input. It’s impossible to account for every user input out of the infinite variations a user could provide in a static prompt. Here are a few common variations that cause GPT-3 to generate less than stellar CTAs.

1. Wide shifts in the marketing copy length

2. Changes to the niche or industry between examples and input

3. Different ways the users provide variable inputs. I’m sure you’ve seen the different ways someone can answer a question in a fill-in box.

4. Incorrect usage of the variables

Here’s a quick look at the difference in generated output between how the variable is used by a user. Let’s look at the second main issue that prompt optimization frameworks allow us to address.

The dynamic nature of prompt optimization provides GPT-3 prompts with the goal of giving the underlying model an easier path to what we consider a strong generation can be taken advantage of. Once we’ve chosen a metric that we feel is a strong representation of a quality generation we can leverage that as the final decider of when to make optimizations.

The prompt examples database is the most important factor behind the quality of our prompts relative to a given input set. This is our entire possible set of what we consider to be good examples of going from inputs to generated CTAs, and we leverage custom filtering logic and prompt opt algorithms to help us formulate this. These two algorithms make these two sides (GPT-3 and a database) useful to each other and are the deciding factor for how a prompt is structured and what “guidance” is provided to the base GPT-3 model. That being said, If we don’t have solid examples of how to complete the task for a given user variable input then these two algorithms will only be able to do so much with what they have to offer.

We have developed a system that allows us to constantly optimize these two keys to prompt optimization based on our chosen metric. Each time a new CTA generator example with our chosen metric is uploaded to our “/optimize” endpoint two things happen:

First we use an algorithm to evaluate this uploaded example to the ones in the database and decide if we should remove or update any previously existing ones. This lets us get rid of worse examples that are relevant to this new one so future user inputs that would use this deemed “better” example. This algorithm handles both evaluating based on the key metric and other related factors to filter down to in-domain relevant examples.

This optimization quickly affects future generations given the strong correlation to output that each example has on CTA generation. Not every prompt example will have a key metric score and are not required to.

The second task performed when a new example is uploaded is to optimize the prompt optimization algorithms used to decide how to build our GPT-3 prompt. This fine-tuning method allows us to constantly be updating the algorithm's ability to correlate the database examples to a high quality CTA. This real user feedback is vital when tuning our models' ability a bit more towards how users actually use the product, which is always less clean than how our data is structured in a development environment.

These are key tasks when we talk about constantly improving our call to action generator tool. The ability to be able to auto improve the two key components of what leads to a high quality generation is a gamechanger for a production system of a prompt based generation LLM. Real life metrics are the best way to understand the actual accuracy of these te4xt generation models. These metrics should be whatever the goal is of your generation, and can be as simple as user acceptance of results. Say for instance you generate 5 different summaries for a legal document. If your goal is to improve the user's experience then your goal metric can be the number of summaries selected by the user. Depending on the goal of your CTA generation tool, your goal metric might be something different.

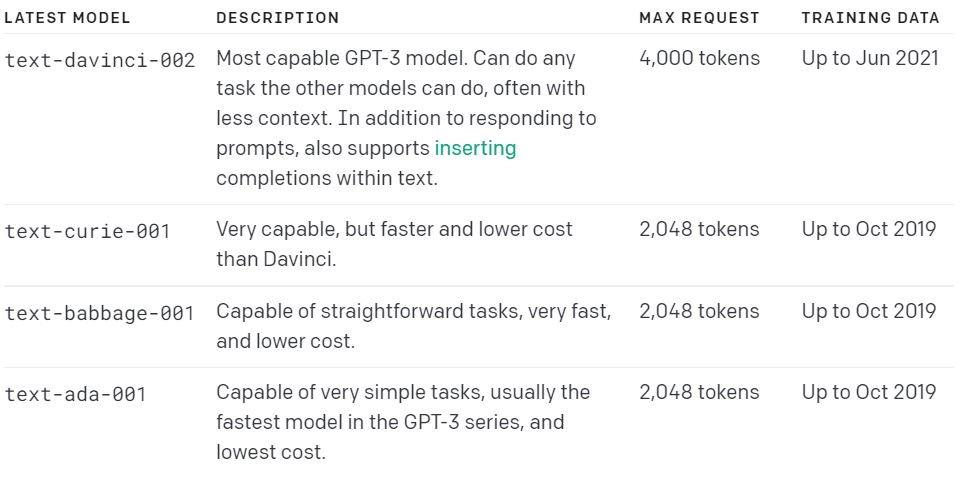

This might be the easiest step of them all! Now that we’ve built our GPT-3 prompt, we can run through GPT-3. We’re going to use the latest davinci model that supports up to 4,000 tokens as input.

The postprocessing module focuses on taking our raw GPT-3 text generation output and performing any cleanup or downstream tasks. We use this module for just about any GPT-3 pipeline as most use cases require cleanup or formatting of outputs.

Token based confidence metrics allow us to put a reasonable confidence value on the outputs of our CTA generator. This is incredibly useful in allowing you to regenerate poor results, change runtime variables, collect feedback, assist in auto-optimization, and understand exactly how well your GPT-3 pipeline is performing in production. This is a custom ML algorithm that evaluates the CTA results relative to token sequences and pattern recognition for the same use case.

Now that we’ve run our GPT-3 model and generated a few CTAs, we want to store a few key database variables that make our auto-optimization and general product development process easier. These variables focus on creating as much context as possible around this exact run.

- User inputs

- Prompt used

- Generated outputs

- Metric scoring used to build the prompt

- Confidence metrics and a few other key values around the generated tokens

The first token tells you a ton about your generated CTA, and can be used to further understand the correlation between model variables and a quality output.

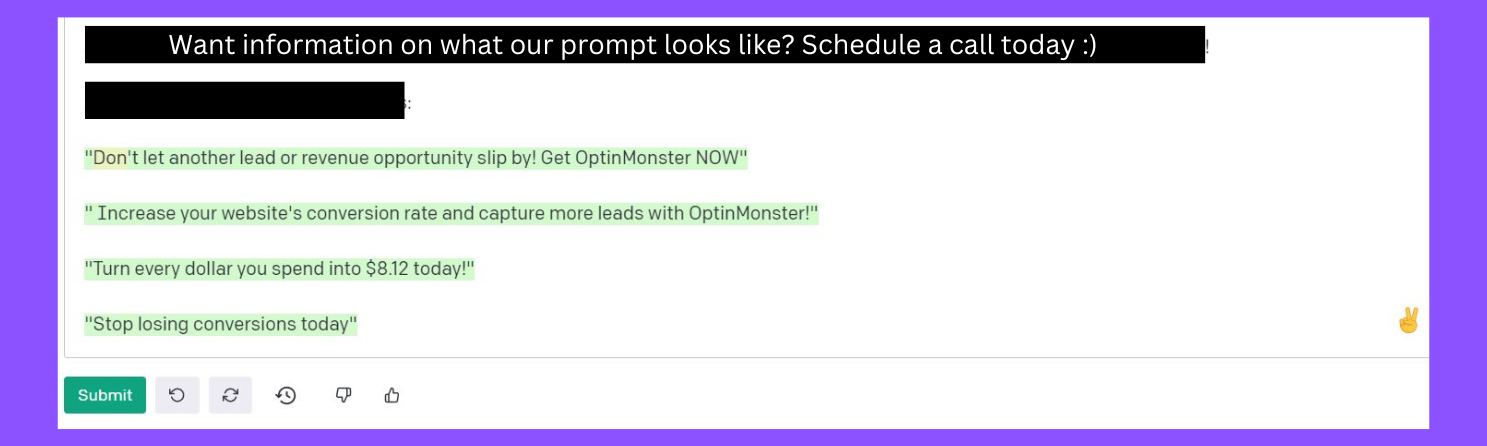

Easily one of my favorite CTA generations from the product. Focused on the OptinMonster homepage and generating a couple high variance CTAs. Goal is different tones and lengths to provide options. These could be used at different points in the landing page copy based on the information surrounding them and where the reader is in the copy.

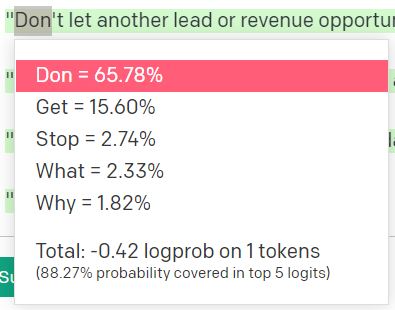

The most important parameter offered by GPT-3 and other prompt based models is the temperature. Temperature is used to decide the frequency of GPT-3 choosing a lower logit for the next token in the sequence. The higher the temperature, the more likely it is that the model chooses a lower probability token. The percentages are how confident GPT-3 is of this token being the best option for the next token in the sequence.

This has a huge impact on the copy generation of our pipeline. Generally speaking sequences with lower probability tokens are more unique than the examples used or any fine-tuning data. This means you usually get more variability in the output compared to what you’ve seen before, which can be a good thing or bad thing.

Frequency penalty is a parameter that focuses on decreasing the chance that phrases are repeated verbatim. This works by penalizing new tokens based on the frequency that they already exist in the text. This can be a valuable parameter to leverage if you are looking to add a bit of variability when you generate content in a list format.

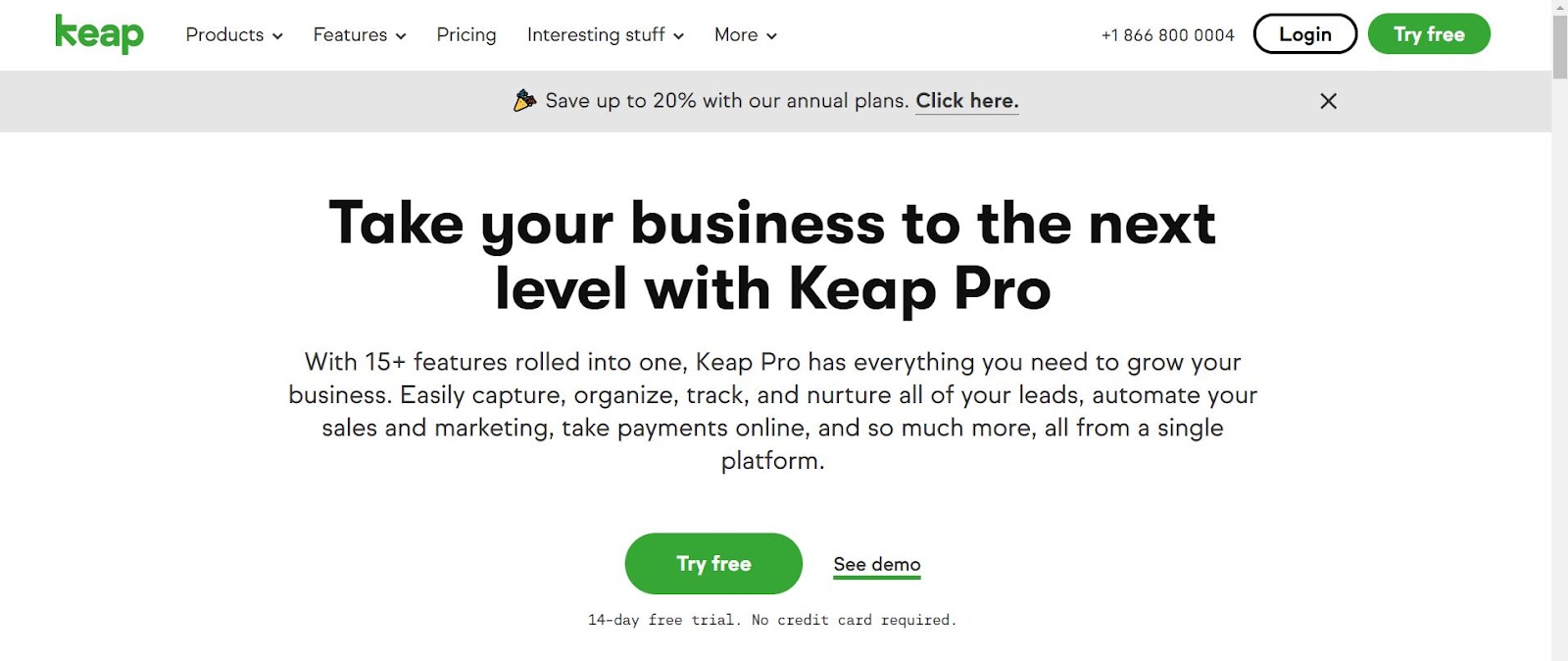

Example CTAs generated for an ecommerce product page. These are longer CTAs with the goal of being subtitles to help drive the buyer closer to the actual button (except for the one button CTA at the bottom).

Width.ai builds custom NLP software (like this CTA tool!) for businesses to leverage internally or as a part of their product. Schedule a call today and let’s talk about how we can get you going with this exact CTA generator, or any of the other popular GPT-3 based products.