The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

The growth of the use of LLMs with domain-specific fine-tuning for NLP workflows has allowed for the growth of a new focus on ease of fine-tuning and open source LLMs. Fine-tuning is the best way to steer your model towards your specific use case, and open source LLMs make this as cost effective as possible and improve the clarity around how the model was trained and what data was used, which is valuable for this domain steering process.

In this article, we dive into DollyV2, a self-hosted and open-source LLM that's completely free for commercial use. We cover:

DollyV2 (or more formally, Dolly 2.0) is a set of three LLMs from Databricks that are tuned to follow human instructions, just like OpenAI's ChatGPT.

But unlike ChatGPT and other models that tend to have restrictive licenses or are managed services, DollyV2 and its base models are licensed under the Apache 2.0 license which makes them completely unrestricted for absolutely any kind of commercial use.

The DollyV2 models use EleutherAI's Pythia models as their backbones. EleutherAI is also the company behind the GPT-J and GPT-Neo models.

To create the DollyV2 models, Databricks fine-tuned three of the pre-trained Pythia models on a custom human instruction dataset, databricks-dolly-15k, prepared by their employees through gamified crowdsourcing.

All three DollyV2 models are available from the Databricks Hugging Face Hub. They differ in their parameter sizes and, therefore, generative capabilities:

DollyV2 offers multiple benefits to any company that wants to integrate private LLMs into their NLP systems.

Unlike ChatGPT and similar managed LLMs, you control the fine-tuning process for the pre-trained open-source models. You no longer have to pay per token or record and have full access to metrics and evaluation. Your data scientists will feel more comfortable with the flexibility and clarity around what is going on.

You can deploy the DollyV2 models on your preferred cloud or on-premise infrastructure. When you need better latency or throughput, you have the freedom to scale up or scale out on demand by deploying additional cloud infrastructure. For example, if you're an e-commerce company, you can scale up your DollyV2-based customer chatbot during peak shopping periods and scale it down later. You can't do that with managed service LLMs like ChatGPT.

If you're a financial institution or a health care service where compliance with data privacy and confidentiality are mandatory, models like DollyV2 are easier to secure than externally hosted managed service LLMs. You can fine-tune DollyV2 without exposing any confidential data to any other company. This also goes the same for inference time. The data never needs to leave your servers.

With managed services like ChatGPT, you are forced to upload your data to their systems and trust them to maintain your data security posture.

DollyV2's Apache 2.0 license permits you to use the models for any commercial purpose without any restrictions. You are free to sell products or deploy services that use these models. This is not the case with all open source LLMs, as many have royalties or lack the very open license for commercial use.

In this section, we examine how the most capable DollyV2 model, the dolly-v2-12b, fares in common natural language processing use cases.

The illustration below depicts a chat session with the DollyV2 model:

Some positive observations:

Some negative observations:

What's not so obvious in the illustration above is that DollyV2 isn't capable of conversations out of the box. It's a stateless model which means it doesn't remember any previous queries and responses. Every query is treated as a new conversation.

To overcome this, you can use LangChain's conversation chain and buffer memory. It stores the sequence of queries and their replies in your system's memory. With every new query, it includes the entire previous conversation. This is how DollyV2 can understand the previous context and resolve the anaphora correctly.

When reviewing LLMs I like using the medical report use case as they often have multiple page formats in the same report and have a high noise level relevant to the important information. It gives me a good gauge of the model's ability to handle rough inputs.

For the medical report below, we asked it to generate a simple summary:

It generated the following summary:

Observations:

Other prompts also generated summaries that contained grammatical errors or hallucinations (marked in red in the illustrations below):

Overall, DollyV2's chatbot capabilities work OK. However, its summarization capabilities are not ready for production. They tend to have syntactic flaws, grammatical errors, or hallucinations. We suggest additional fine-tuning on summarization datasets.

The DollyV2 and Pythia models are all based on the GPT-NeoX model architecture and modeled by the Transformers framework's GPTNeoXForCausalLM.

The three DollyV2 models differ in their internals as shown in this table:

Each hidden layer consists of a multi-head attention unit and a multi-layer perceptron (MLP). Since each model has a different number of attention heads and MLP neurons, the total number of parameters per hidden layer is different. The dolly-v2-12b model has almost four times the number of parameters per layer as the dolly-v2-3b model.

The layers of the dolly-v2-12b model are depicted below:

Two datasets are in play when training or using DollyV2. One is the dataset used to train the underlying Pythia language model from scratch. The other is the dataset used to fine-tune it to follow instruction prompts. We explore both datasets and their implications on the model's capabilities below.

The Pile consists of diverse English (mostly) text corpora, weighing around 880 gigabytes in total. It includes:

Databricks-dolly-15k is a dataset for instruction prompting created by Databricks employees through an internal gamified crowdsourcing process.

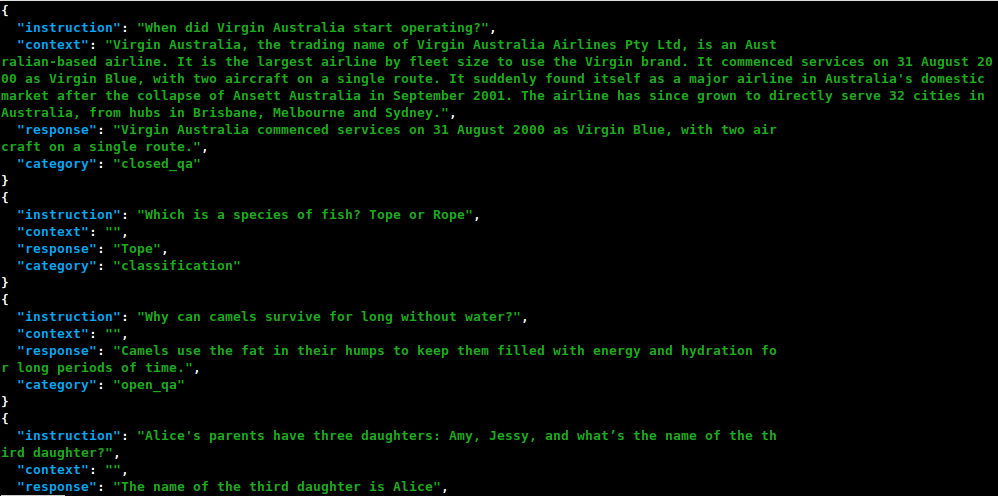

It contains 15,000 entries that conform to the recommendations of the InstructGPT paper. They include conversational categories like:

Each entry consists of:

Some examples are shown below:

Sample entries in the databricks-dolly-15k dataset (Source: databricks-dolly-15k)

The dataset is available under the Creative Commons Attribution-ShareAlike 3.0 Unported License which allows modifications for commercial use as long as they're also distributed under the same license.

The entries in this dataset are used to fine-tune a pre-trained LLM like Pythia toward instruction following. After fine-tuning, the model weights are modified such that they learn to generate appropriate responses following the user's instructions.

LLMs can be fine-tuned for instruction-following using one or both of:

Supervised fine-tuning, using the databricks-dolly-15k dataset, is employed to create the DollyV2 models from their Pythia backbones. The instructions and contexts in the dataset are the inputs and the responses are the expected responses.

Token-level, cross-entropy loss between the expected and generated responses is sought to be minimized using the AdamW optimizer algorithm. This modifies the attention and multi-layer perceptron weights in the model's layers toward generating the expected responses.

Since these are large models, the training must be distributed across a couple of GPUs. To do this efficiently, the DollyV2 training process relies on the DeepSpeed library.

The available pre-trained DollyV2 models are not ideal for some use cases without fine-tuning:

In addition, because DollyV2 is a research-oriented model under development, it may show the following problems listed by Databricks:

Knowing its training dataset details and known shortcomings, here are some use cases for which DollyV2 may work well after some more fine-tuning on related datasets:

While ChatGPT is good for many use cases, most businesses will have some unique needs and workflows that ChatGPT can't satisfy. Free, open-source LLMs like DollyV2 are critical to making highly customized models practical for businesses.

At Width, we have years of experience in customizing GPT and other language models for businesses. Contact us!