The Exact Steps to Implement Custom Ai Shopify Chatbots for Customers

Use AI-powered chatbots and the power of large language models to help your e-commerce customers find the exact products they have in mind in your shop.

Using large language models (LLMs) to process long-form content like legal contracts, financial reports, books, and web content brings enormous productivity and efficiency improvements. Unfortunately, it's a problem that's not been completely solved. LLMs still face difficulties and drawbacks when processing lengthy documents.

In this article, we review the kinds of problems LLMs face and explore a technique that prompts large language models to plan and execute actions over long documents.

When using LLMs for long document processing, you often see issues that lead to the development of “workarounds”. Some of these are due to context length limits but you can overcome those to an extent using chunking strategies.

However, there are other semantic-level drawbacks even when the document satisfies token limits. For example, if a particular concept is referred to in many sections due to its connections with other concepts, we find that straightforward prompts often ignore entire connections and sections.

Additionally, Liu et al. showed that the accuracies of LLM-generated answers degrade with longer context lengths and when relevant information is in the middle of long contexts.

In this article, we explore planning and executable actions for reasoning over long documents (PEARL), a technique that addresses such drawbacks through more sophisticated reasoning than naive prompts.

PEARL is a strategy to reason over long documents using specially crafted prompts and a small number of few-shot, in-context examples.

PEARL combines task-specific decomposition with prompt generation to create specially crafted prompts that help LLMs process complex semantic connections across the document.

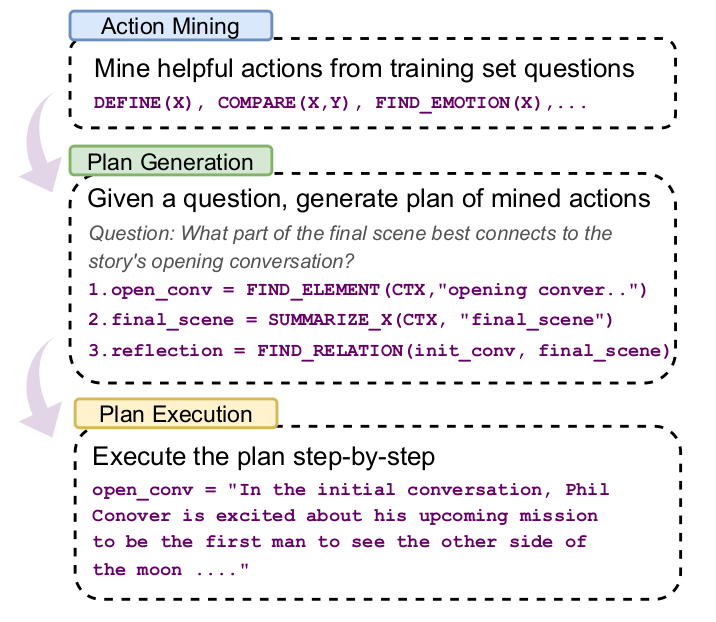

Briefly, PEARL consists of three high-level stages:

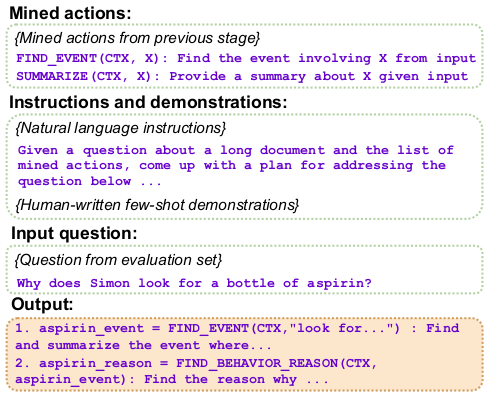

The illustration below shows one such generated plan consisting of mined actions:

We first provide some intuition into why PEARL's approach works.

Since PEARL's outputs come across as unnecessarily complicated at first glance, building some intuition may help you implement it better for your use cases.

Instead of sticking to natural language, PEARL surprises you by switching to text that resembles pseudocode or a high-level programming language:

But why go to all this trouble? Why not stick to natural language, like chain-of-thought prompting and other strategies? Why simulate a virtual machine to run the plan?

Here's a key insight that might help: Think of PEARL as a generator of task-specific text processing pipelines.

If you've worked on traditional search or text processing, you'll be familiar with expressing high-level semantic goals using low-level processing pipelines that:

PEARL essentially automates the generation of such text-processing pipelines. But it goes a step further.

Recognizing that LLMs are inherently better at semantics, PEARL completely ignores low-level approximations like regular expressions. Instead, it directly uses semantic criteria and goals in actions like these examples:

Notice how the actions are expressed in terms of high-level semantic concepts like emotions, conclusions, events, and so on.

For example, to detect emotions, most human developers would use regular expressions or string matching to search for phrases like "I/he/she/they felt angry/sad/happy." Some may use better semantic techniques like sentence-level embeddings but use conditional logic for matching. Unlike them, PEARL leverages the strong semantic capabilities of LLMs to directly search for the abstract concept of emotion.

But why use pseudocode to represent these pipelines? That's to reduce ambiguity.

When conversing with LLMs like ChatGPT, we refer to earlier contexts using vague expressions like:

When a conversation has multiple contexts (which is common with text processing pipelines and long documents), such vague references can confuse or mislead the LLMs. PEARL's formal pseudocode syntax eliminates such ambiguities.

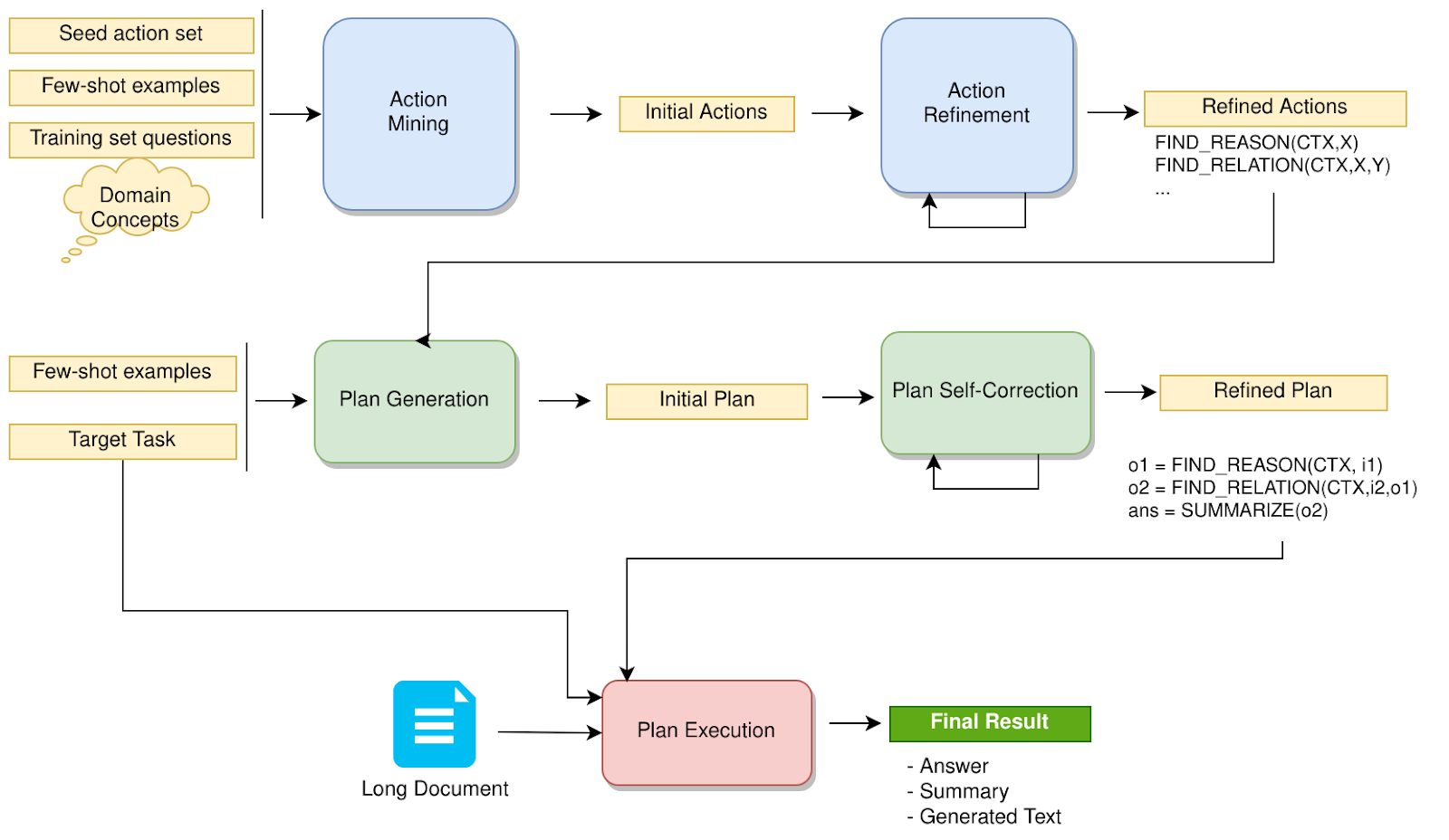

PEARL consists of three primary stages but requires some secondary stages to work well.

In the following sections, we get into the mechanics of each stage, especially the input aspects and prompt engineering. But before that, it helps to understand the dataset used by the PEARL paper.

Though PEARL is a general-purpose prompting approach, the generated actions are specific to the domain, training dataset, and task. In particular, the PEARL research paper and code are customized for a question-answering dataset called the QuALITY dataset. Since the sections below show GPT-4 prompts and results generated from this dataset, let's get to know it first.

QuALITY is a human-annotated comprehension dataset based on long-form content. It contains fiction and nonfiction content from Project Gutenberg, Slate Magazine, and other sources.

Each article is accompanied by a set of related human-written questions with multiple-choice answers and best answers. The questions tend to be complex and can't be answered trivially by skimming or searching keywords.

PEARL makes use of QuALITY's articles and questions. However, instead of selecting one of the given answers, it generates a detailed long-form answer using information and relationships from all parts of the article.

In the action mining stage, the LLM is asked to generate a comprehensive set of actions that are likely to help the task at hand. It's supplied with the following information:

This prompt is repeated for each question in the training set.

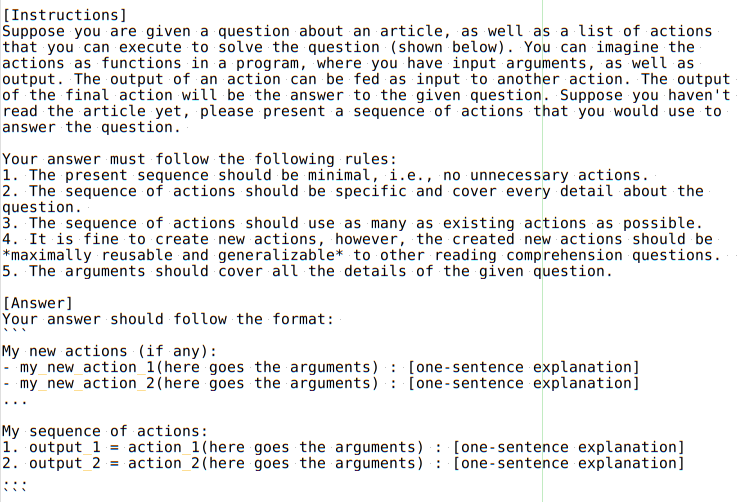

The prompt template for action mining is illustrated below.

Since action mining is the most important stage in PEARL, a detailed explanation for each component of the prompt follows.

The main purpose of the seed set is to demonstrate the pseudocode syntax for actions using function names, function call syntax, function descriptions, and arguments.

They are mostly general-purpose text processing or semantic actions, like concatenating text or finding relevant information. However, some, like FIND_MORAL, can be dataset-specific.

These instructions guide the LLM on using existing actions and, if necessary, generating new actions. Plus, they demonstrate the desired format for the results.

Using sample questions and actions, the few-shot examples clarify what the LLM must do. Crucially, these must be relevant to the domain and task at hand.

Notice how, unlike the seed set, the action arguments and output variables are sometimes actual examples relevant to the dataset rather than generic terms.

The last component of the prompt is the training set question for which we want the LLM to discover plausible new actions while formulating an answering plan.

The illustration below shows some results from action mining the QuALITY training dataset.

In this example, the LLM discovered that it needs a new action, FIND_FEELINGS, to answer question 22.

Action mining is run on every question in the training dataset. In QuALITY's case, we're talking about 2,100+ questions. With such a large number of questions, some generated actions may be faulty or duplicated as shown below:

PEARL gathers the results of action mining all the training questions. It then relies on the semantic strengths of the LLM to find duplicated or faulty actions and produce a final set of refined actions. This is the prompt for action refinement:

The final consolidated set of actions for the QuALITY dataset contains about 80 actions. This limit is configurable. The paper experimented with different limits and found that for this particular dataset, 80 is an optimal number to get the best results.

Let's examine some of these 80 actions. Some are general-purpose actions:

Others are dataset-specific:

The next stage in PEARL focuses on making the LLM generate an answering plan (or task plan, in general) for a given question using the mined actions.

The prompt template for plan generation is depicted below:

We supply the full list of mined actions in the prompt.

It's followed by these detailed instructions:

Notice how the instructions now focus on the plan and urge the LLM to try to use only the provided actions. However, it remains open to the possibility of new actions as well.

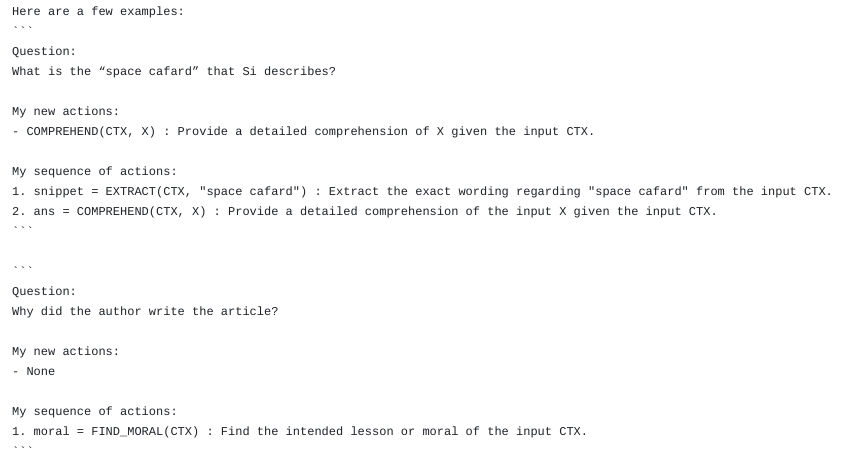

PEARL then includes some few-shot examples with questions and their answering plans:

The default prompt for QuALITY contains 11 few-shot examples. Ideally, they must be written manually and suitable for the domain and task. However, if you prefer, the PEARL code has a "refine" function to autogenerate these few-shot examples from a dataset.

Finally, we append the question for which we want the LLM to generate a plan using the mined actions.

Importantly, we do not provide the full article as input at this stage. The plan is generated using just the mined actions, the target question, and the few-shot examples.

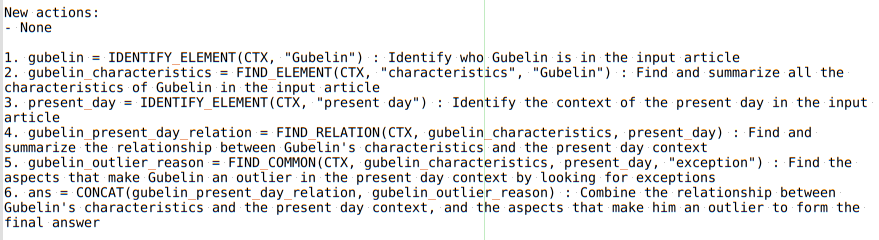

An example question from QuALITY and its generated plan are shown below:

In this example, the LLM has expressed the plan using only the mined actions. Further, it has replaced the action parameters with question-specific arguments.

With just a small number of few-shot examples and complex instructions for the LLM, a generated plan may have problems like:

So, PEARL follows this iterative self-correction strategy:

At the end, you'll have a self-corrected plan that has the correct syntax and grammar. Note that even at this stage, the long document isn't used.

The prompt for plan self-correction is very similar to plan generation but with these self-correcting instructions:

In this example, the LLM finds syntax errors over two iterations:

In the end, it generates this correct plan:

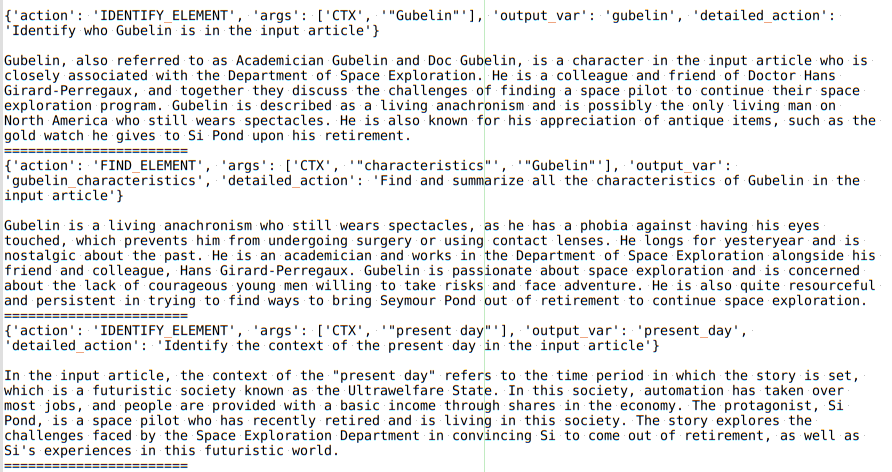

The last stage is to apply the plan's actions to the supplied document to get the result. PEARL examines the actions of the plan and replaces all CTX arguments with the supplied document.

After that, each action is run by the LLM one by one based on the action description, and its results are assigned to generated output variables.

The execution trace below shows the first three actions and their results for our example plan:

Here are the next two steps of the example six-step plan:

The final execution step produces the result for the question we asked ("What makes Gubelin an outlier in the present day?"):

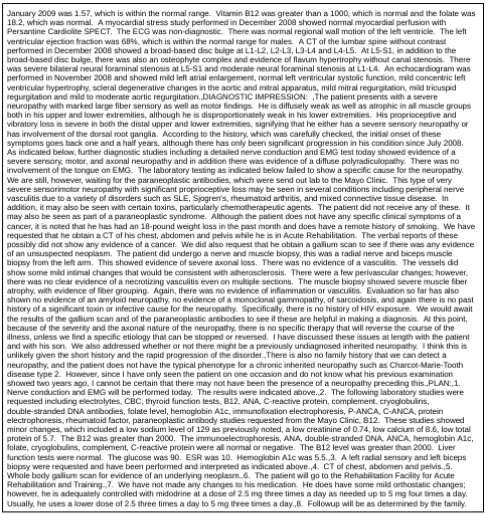

In this section, we explore how PEARL performs in the question and answer domain. This is a pretty common use case for longer context windows. Engineering these systems spends a ton of time on adjusting both the chunk parameters (size,splitting,keywords etc), and the structure of how these chunks are pulled into the context window. Some systems struggle due to the fact that the most relevant chunks aren’t always the ones that best answer the question. If the database has multiple medical reports for different patients, or the same patient but different visits, the similar chunks that get retrieved might all be exactly the same section, when the query actually requires various parts of the document.

We know the inputs that are either domain-specific or manually supplied:

To prepare these inputs, we first look for suitable medical datasets.

We get our long documents — medical reports — from the Kaggle medical transcriptions dataset that we have used earlier for summarization work:

We choose the longest reports that are 12,000-16,000 tokens.

The input question will be a complex one: How is the medical condition affecting their lifestyle and what changes should the patient make in their lifestyle?

I love this question for evaluation because it is abstract enough that it causes a few key issues that you see with these Q&A systems:

- The specific medical condition is not specified. Keyword heavy systems or systems with limited domain knowledge will struggle with this.

- The first question is vague and does not give a direct correlation to anything in the document. This means the LLM requires in-domain knowledge of how to correlate different parts of the context to the query.

- The model has two options for what makes the most sense to answer the second part of the question. Either it can find a summary in the provided context and perform an answer extraction, or if this cannot be found, summarize multiple sections into an answer. This requires a deep level of understanding of the input text relative to the query.

- Multiple questions in the same query. One of the places where RAG based systems struggle. The model has to pull in context for both parts of the question.

That leaves us with questions and few-shot plans for action mining and plan generation. We use the MEDIQA-AnS (MEDIQA-Answer Summarization) dataset by Savery et al. because:

A sample entry from MEDIQA-AnS is shown below:

For the medical seed set of actions, we reuse most of the seed set as PEARL since they're generic except for FIND_MORAL which isn't relevant for medical data.

For the few-shot examples, we extract questions from 30 random entries in MEDIQA-AnS primary dataset file and apply action mining to them. The results look quite promising:

We supply these few-shot examples in the plan generation prompt:

For a test question, "What blood tests confirm celiac disease," it generates this plan:

It's not a valid plan because FILTER isn't on the list of refined actions. Nonetheless, it's a promising start.

For our actual question on connections between the patient's medical condition and their lifestyle, PEARL generates this final plan after refinement:

For a qualitative evaluation of the approach, we applied this lifestyle recommendation plan to the top five longest medical reports from the transcription dataset. These reports contain multiple sections for different medical specialties. We want the recommendation plan to extract relevant information from all sections.

Here are some of the results. Below is the longest and most complex report:

The lifestyle recommendation plan for it returns this comprehensive answer:

Notice how the plan includes information from all sections of the report.

The next longest report contains complex information and connections:

The lifestyle plan for it is shown below:

Again, the answer is comprehensive with information from all sections of the report.

These results show that PEARL can be easily adapted for multiple domains.

In this article, you saw how PEARL can dynamically generate pipelines to solve complex tasks. In addition, it does so using just prompts and few-shot examples without requiring any fine-tuning. This is a capability that was previously not possible with other prompting strategies or task-planning techniques. Contact us to discover how you can apply PEARL for your difficult business problems.