Automate Health Care Information Processing With EMR Data Extraction - Our Workflow

We dive deep into the challenges we face in EMR data extraction and explain the pipelines, techniques, and models we use to solve them.

Does your business routinely remove, redact, or censor information from images and videos for business or legal reasons? Perhaps you redact people's names or photos from all your scanned documents for privacy reasons? Or censor competitors' products from your marketing videos? Or maybe you run a tourism site that gets thousands of image uploads from your users, and you want to give them a way to remove unwanted people and objects from their photos.

All these workflows can benefit from a computer vision task called image inpainting. In this article, you'll learn what image inpainting is, what it's used for, and how to implement it using a technique called recurrent feature reasoning for image inpainting.

Image inpainting fills in any holes or gaps in an image with artificially generated patches that seem visually and semantically coherent with the rest of the image to an observer. The holes may arise due to deliberate editing to remove unwanted people or objects from images, or as a result of damage to old photographs and paintings over time.

Some use cases of image inpainting:

In the next section, we'll dive into one of the deep learning-based image inpainting methods, called recurrent feature reasoning.

Recurrent feature reasoning (RFR) is a progressive inpainting approach, proposed by Li et al. in 2020, to overcome the problems of prior traditional and deep learning approaches. The word "recurrent" here refers to refining the quality of inpainting over many steps and using the previous step's output for each subsequent step.

The proposed network model, RFR-Net, uses only convolutional and attention layers, not any standard recurrent layers. Also, RFR-Net is not a generative adversarial network like many other inpainting models; it has no discriminator network and doesn't use adversarial training.

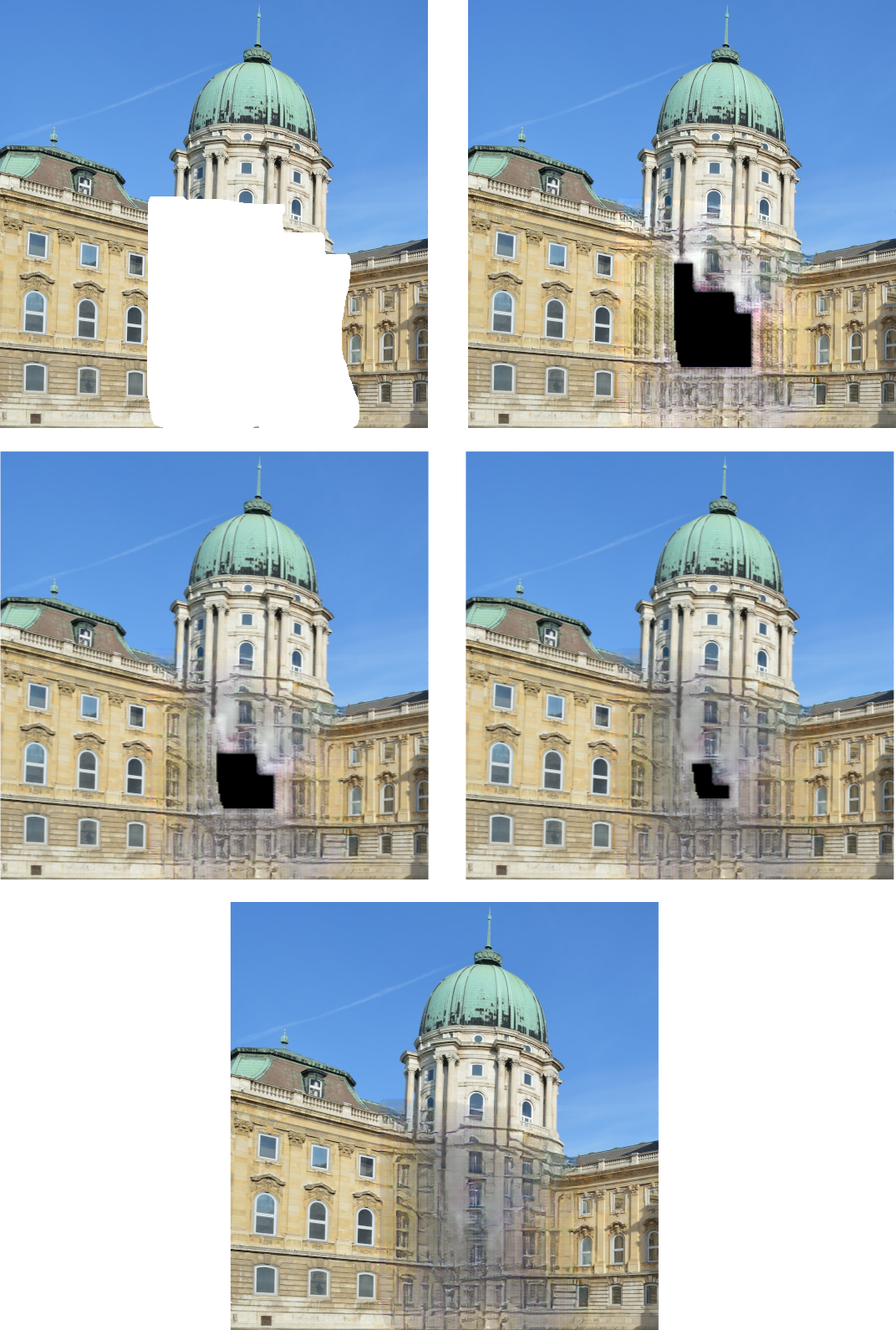

The main intuition behind RFR is that it progressively replaces the regions of a hole with high-quality visual information, starting from the boundary areas of the hole and incrementally moving in toward the center.

The other important aspect to know is that its inpainting is done on the features derived from pixels and not directly on the pixels. These features include intuitive ones like hues, intensities, textures, contours, or shapes. But they also include less intuitive, nonlinear features that are abstract mathematical combinations of simpler features but that help to faithfully generate the visual aspects seen in real images.

The benefits of RFR include:

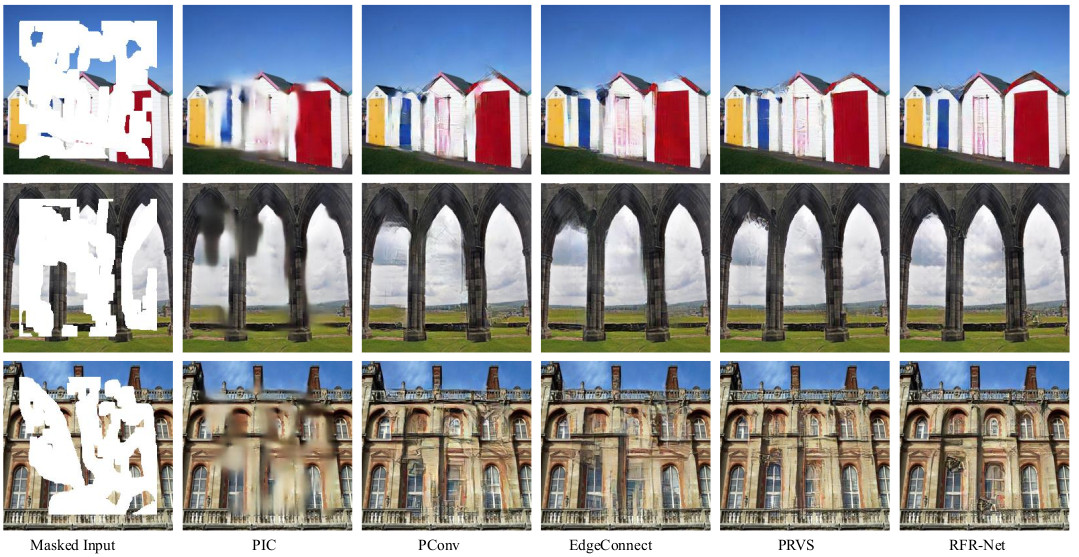

The panel below shows inpainting with recurrent feature reasoning in action, with the hole getting filled incrementally.

In this section, we'll explain the logic of recurrent feature reasoning. Just remember that whenever we use the term "inpainting" here, it's referring to the image inpainting on the feature maps extracted by convolutional layers and not directly on the image pixels themselves. The final image is generated only at the end from the inpainted feature maps.

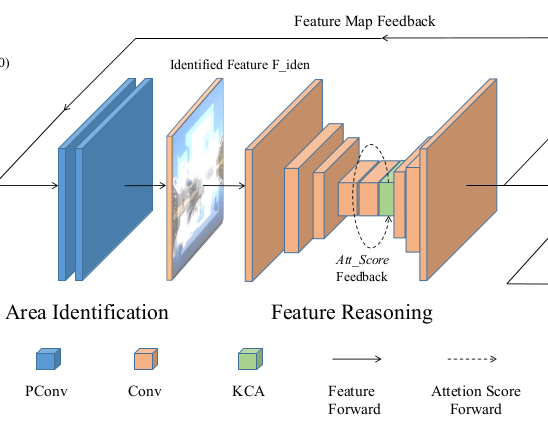

RFR's inpainting refines the feature maps by running these two steps in sequence multiple times (six by default, but you can customize it to your data):

1. Identify the target area for inpainting: Starting from the hole's boundaries in the current iteration, identify the next set of regions near the boundary to inpaint. Any area with at least one unmasked pixel is included, while areas with only masked pixels are excluded. This is similar to the mask-awareness concept.

2. Feature reasoning: Paint high-quality features for the identified areas using a standard set of encoder and decoder convolutional layers, with a special attention layer included for long-range dependencies.

At a high level, the RFR module recurrently infers the image hole outer boundaries of the convolutional feature maps and then uses those same feature maps as “clues” for further inference.

RFR progressively fills in holes, starting from their boundaries and moving inwards. At each step, it must identify the boundary patches with at least a few useful features that it can use for the inpainting.

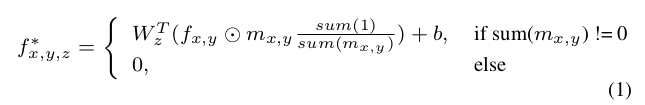

It does this by building new feature maps using partial convolutions, first proposed by Liu et al. Unlike standard convolution, a partial convolution first multiplies a feature patch and the corresponding region of the mask, then applies the convolution to their product, and finally normalizes the result using the sum of valid masked values under that patch.

This is followed by a mask update step where that region of the mask is marked as completely valid for subsequent convolutions and iterations.

RFR-Net uses many such partial convolutional layers to update both the feature maps and the masks. The feature maps are then passed through a normalization layer and a nonlinear activation like a rectified linear unit to prepare them for the next step.

The difference between the updated mask and the input mask is the target area identified for inpainting.

This step generates high-quality feature maps for the identified target area using a stack of regular convolutional encoders and decoders with skip connections. The intuition here is that during training, these convolutional weights acquire values such that the generated feature maps are tuned to the visual aspects and other patterns — colors, textures, contours, shapes, sizes, and more — prevalent in the training images.

The recurrent feature reasoning module also includes a special attention layer called the knowledge-consistent attention (KCA) layer. As you probably know, a major weakness of convolutional layers is their inability to model the influence of more distant regions on an area. Distant regions can influence an area only when a layer's receptive field becomes large enough to include them. But even then, the quality is compromised by the pooling layers that discard a lot of useful information about the distant influence.

Attention mechanisms overcome this weakness by modeling the influence of every feature on every other feature at every layer. So even a standard attention layer can improve the quality of features generated within an iteration. However, though it improves the features in each iteration, it doesn't work well overall specifically for RFR-Net.

That's because the attention scores are independently calculated in each iteration. If we select two random locations, they may have a high attention score in one iteration but score very low in the next, due to some side effects of the feature reasoning and mask update steps. Such inter-iteration inconsistency results in odd effects like missing pixels or blurs.

To overcome this, the KCA layer caches attention maps from the previous iteration and uses a weighted sum of the current attention scores and the previous iteration's scores. This ensures that all knowledge about current features is passed down to the next iteration too, making the feature maps consistent with one another across any number of iterations. The weights of the weighted sum are learnable parameters that are inferred during training.

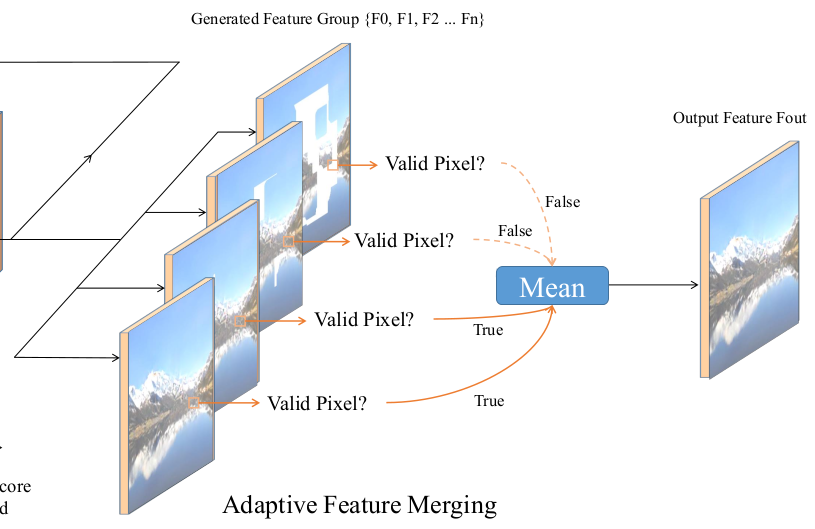

The last stage of RFR-Net is creating the final image from the inpainted feature maps generated during the multiple iterations. Each iteration creates inpainted feature maps that fill more holes than the previous iteration. However, if you use just the final inpainted feature maps and discard all the earlier ones, you lose a lot of useful intermediate features.

Instead, RFR-Net prefers to consider all intermediate feature maps for a location that has some unmasked details. It averages them to calculate the final inpainted feature maps.

To these final inpainted feature maps, a convolution is applied to calculate the pixels that make up the final image.

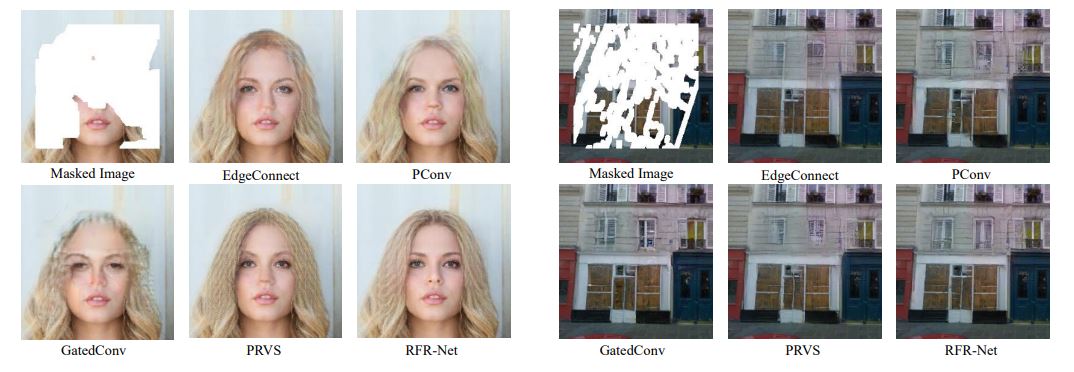

The GitHub project for the RFR-Net paper provides links to two RFR-Net models pre-trained on the CelebA and Paris StreetView datasets. These are suitable for inpainting on faces and buildings respectively.

But if you want to inpaint on other types of images, should you train a custom RFR-Net model? The small size of the model works better for domain-specific training images with less to medium diversity rather than a general model capable of inpainting on any kind of image.

The loss function used for training RFR-Net is a combination of four losses:

You can make the training completely self-supervised since the images you collect serve as both ground truth images and training images. After collecting your training images, add a custom transform to the PyTorch data loader to draw random masks and streaks over the images.

Execute run.py to train a custom model from scratch or to fine-tune an existing one. The masks must be 8-bit grayscale images with black (zero) for unmasked areas and white (255) for masked areas.

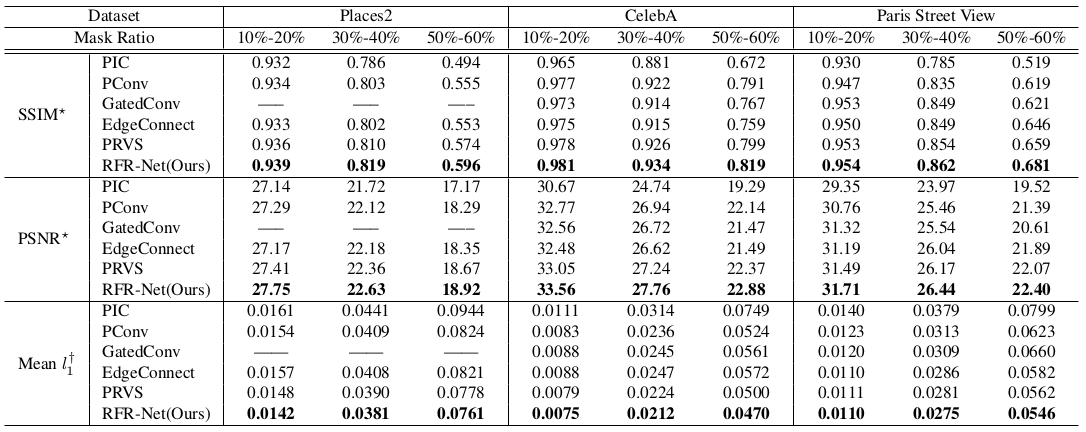

The metrics used for quantitative evaluation were:

RFR-Net scores better than many other convolutional deep models on all the metrics at different masking ratios!

Stable diffusion (SD) and DALL-E 2 are currently very popular for inpainting. Here are some aspects to consider when evaluating them against recurrent feature reasoning for your use cases.

Both SD and DALL-E 2 inpainting are guided by instructions you give in natural language. They tend to generate images that are creative by misunderstanding the meaning of your instructions and also because they've been trained on large datasets that may match the instructions in multiple ways. You may find unexpected objects or styles included in the generated image. Getting to your desired image may require several rounds of prompting and editing. For some use cases, like blog image generation, that may be acceptable.

But for more straightforward business use cases, like product image generation, you may prefer the realism of RFR. Since it's a small model, it only generates images that are close to what it saw while training.

Both DALL-E 2 and SD are large models that are trained on large and diverse image and language datasets. They're capable of general inpainting on any type of image.

In contrast, RFR is a small model that works best when trained on domain-specific datasets that are quite homogeneous with less diversity in the classes. For example, if you want to generate images of women's garments for your online store, train it only on women's garments without including any other classes or even images of other clothes.

DALL-E 2 is available as a web application for human use and via an application programming interface (API) for automation. However, its model and weights are not available to you for deployment on your infrastructure. Other restrictions include:

In contrast, you can deploy SD and RFR on your server or even your laptop. SD can run on consumer GPUs with about 10GB of video memory, and some of its optimized clones require even less. Meanwhile, RFR's paper used a medium-configuration machine from 2020 with i7-6800K and 11G RTX2080Ti GPU. RFR is small enough to optimize and run even on modern smartphones.

All three are fine for near-real-time generation and generate images for any resolution within milliseconds. However, end-to-end time consumption may be quite different. As stated earlier, both SD and DALL-E 2 can get quite creative and may require multiple rounds of editing and prompting. In contrast, RFR just generates images quite close to what it saw during training and requires less editing time.

DALL-E 2 is a diffusion model, while Stable Diffusion is a latent diffusion model. Diffusion is a type of denoising that progressively removes noise to guide an image from a noisy start to the user's desired image. SD's latent diffusion just means that it does the denoising in a latent space rather than directly on images. Both are text-guided, and text is a fundamental constituent of their inputs.

RFR is a vision-only convolutional network. The two aspects that are slightly different about it are a specialized attention layer and partial convolutions.

The recurrent feature reasoning approach is a simple and intuitive technique, and at the same time, effective and efficient. The model's size and computational simplicity allow it to be ported to smartphones where automated image editing and inpainting have many more potential uses, including speech-guided editing. Contact us to learn more about the many products we've already built with image inpainting and outpainting for things like marketing image generation, product image generation, and more!