How to Build a Custom Salesforce Chatbot with our Powerful Framework

Using custom Salesforce chatbots, delight your customers with comprehensive and detailed answers to all their complex questions and issues.

Many businesses have to frequently identify, count, and track things on their premises. Retail is an obvious example, but others like logistics, remote sensing, and autonomous vehicles also have similar needs. Segmentation is a critical computer vision task used for such problems. In this article, learn about semantic segmentation vs. instance segmentation, their uses, and modern approaches.

Segmentation is the computer vision task of identifying and locating an object (or objects) in an image by dividing it into irregularly shaped regions, called segments, that correspond to the shapes of different objects or parts of the scene.

It's similar to object detection, but while detection is satisfied with rectangular bounding boxes around objects, segmentation traces out the contours of objects at a pixel level.

There are many segmentation tasks depending on the nature of your input and the information you want out of them.

Based on their core functionality, the three types of segmentation are semantic, instance, and panoptic.

Semantic segmentation is only interested in the classes of objects, not individual objects. Each segment corresponds to one class covering the objects of that class, even if they're far apart in the scene. It assigns a class label to each pixel and uses the same class label for the pixels of any object of that class. Each segment is output as an image-sized overlay mask covering all the pixels of that class.

Instance or object segmentation not only identifies the classes but also differentiates each object within a class. Each segment corresponds to an individual object instance. It assigns both a class label and a unique object identifier to each pixel. Uncountable background elements, like the sky or the ground, are usually ignored.

Panoptic segmentation just combines semantic and instance segmentation. Like instance segmentation, it differentiates each object within a class. Like semantic segmentation, it labels the background elements, too.

Based on the nature of their inputs, other types of segmentation tasks are:

Let's see how segmentation is applied in some verticals.

Segmentation is frequently used in medical and biological research to isolate organisms, cells, tumors, or other areas of interest. For example:

Image segmentation techniques are useful for retail tasks like:

The highly irregular shapes of natural elements make segmentation an indispensable tool in remote sensing and its applied areas like agriculture. Segmentation is used for purposes like:

In the next section, you'll find out the modern approaches being used for segmentation.

Transformers and convolutional neural networks (CNNs) are the two broad approaches to modern segmentation. CNNs, like U-Net and the fully convolutional network for semantic segmentation and mask R-CNN for instance segmentation, remain popular. But a big problem with CNNs is their limited ability to model long-range and global relationships between distant parts of an image. They can't do it until the receptive field is large enough, and even then, pooling discards a lot of useful information. Transformers surpass them by using multi-head self-attention in every layer.

Recent innovations in CNNs like ConvNeXT improve on this weakness using ideas like group-wise and depth-wise convolutions that behave like self-attention. Some new segmentation models have started using ConvNeXT as their backbone network.

Unlike CNNs, transformers are not limited to operating on local grids. Their ability to combine irregular regions from nearby and distant parts of the image, using self-attention at every layer, makes them powerful segmenters. In this article, we'll focus on transformer-based segmentation to bring you insights into this new area that's rather under the radar.

What architectural traits do we see in transformer approaches to segmentation?

First, the vision transformer (ViT) or its improved variants are the most common backbone networks. The improved variants include the following:

A second trait is the use of different encoder-decoder architectures:

The most impactful recent innovation in computer vision is the use of natural language to guide vision tasks, pioneered by OpenAI's CLIP and DALL-E. Segmentation, too, has benefited in the form of referring segmentation where natural language is used to guide the segmentation.

Some notable language-image models for semantic referring segmentation:

If you don't need natural language prompts, you can use one of the state-of-the-art vision-only unified architectures capable of all three segmentation tasks — semantic, instance, and panoptic:

Next, we'll explore the CLIPSeg language-image architecture in depth.

CLIPSeg is a language-image semantic segmentation model that segments images based on your text prompts or example images. That means it's capable of one-shot and referring segmentation. Plus, its use of CLIP's powerful language model equips it for zero-shot segmentation, too. It's a straightforward and lightweight model, making it a good example of the language-image approach.

For this demo, we asked CLIPSeg to segment an image according to two prompts — "orange bottles" and "boxes."

For the prompt "orange bottles," you can see how CLIPSeg segmented mostly just the orange bottles:

For the prompt "boxes", it segments just the boxes in the bottom shelf:

CLIPSeg, too, uses an encoder-decoder architecture. Its encoder is just a pre-trained CLIP ViT-B/16 model. The decoder is a simple stack of just three standard transformer blocks to output an image-sized binary segment mask that isolates the matching class.

The inputs to the model are the target image and a segmentation class described either with a text prompt or an example image+mask whose analogy exists in the target image. A text prompt is converted into an embedding vector by CLIP's text encoder. An example image is converted into an embedding by CLIP's visual encoder. These embeddings are from CLIP's joint text-image embedding space.

To influence the segmentation, CLIPSeg uses two innovations. First, just like U-Net, its three decoder blocks have skip connections to three of the encoder's transformer blocks. They let the decoder use the encoder's attention activations to detect both nearby and distant regions of the same class.

The second innovation pertains to how it uses the input text or image prompt to activate specific regions. CLIPSeg uses the feature-wise linear modulation (FiLM) technique where the prompt's embeddings influence the decoder's input activations through a simple affine transform with two learnable parameters per feature. That's how the input text prompt or image prompt exerts influence on the decoder's segmentation outputs.

Through such simple ideas and architecture, CLIPSeg achieves zero-shot, one-shot, and referring segmentation. If its output masks are not precise enough for your requirements, you can add PointRend for more precise segmentation masks.

Video segmentation is a very useful solution for video search and editing tasks in any industry. But it's a conceptually and computationally difficult problem because of the additional time axis and the volume of data. The possibility of users referring to actions and not just appearances adds to the complexity of text-guided video segmentation. However, the incredible power of transformers is set to revolutionize this area. The multimodal tracking transformer (MTTR) is one of the new models that can do text-guided instance segmentation on videos.

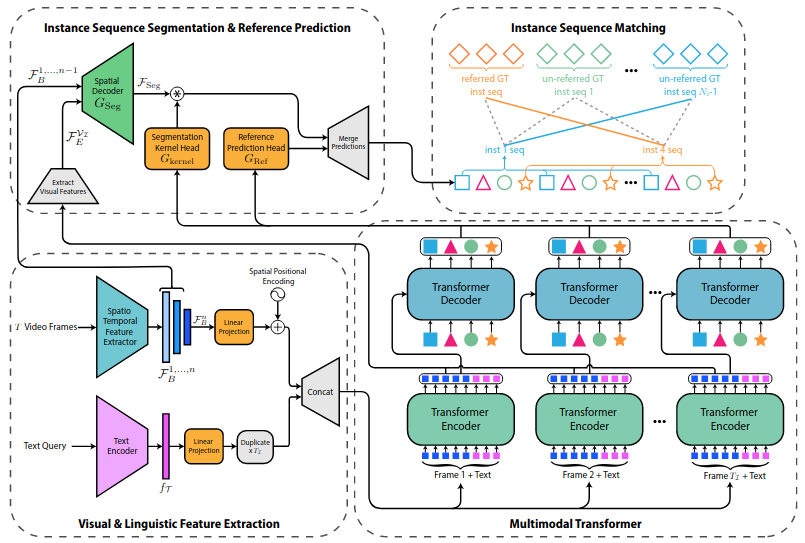

At a high level, it has a multimodal transformer network with an encoder and a decoder, a spatiotemporal feature extractor, a text feature extractor, and a segment voting module at the end that decides which output sequence best matches the text prompt.

MTTR extracts visual features from frames using a spatiotemporal encoder and text features from the text prompt using RoBERTa. From these features, the multimodal encoder produces a spatiotemporal representation of the video with information on which regions within and across frames are related to one another.

The multimodal decoder then uses that representation to generate several candidate sequences of segment predictions. Finally, the segment voting module identifies the segmentation sequence that best matches the text prompt and outputs its segmentation masks.

In these sections, you'll learn the details about major deep-learning frameworks that are relevant to segmentation.

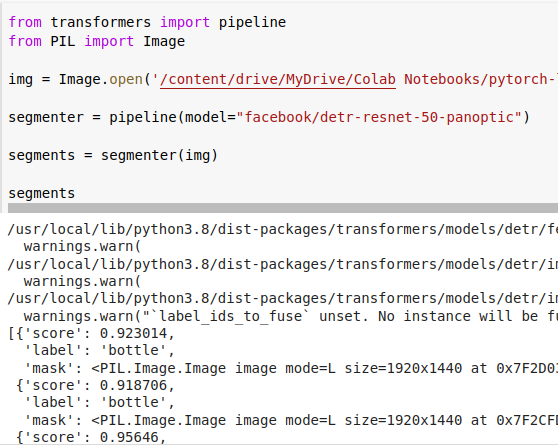

Hugging Face transformers have extensive support for transformer-based segmentation, with features like:

Torchvision gives you a set of pre-trained CNN models like FCN for semantic and mask R-CNN for instance segmentation. You can download more models from the PyTorch hub or Hugging Face. Useful utilities for manipulating and visualizing segmentation masks are also available.

The TensorFlow ecosystem has many features for segmentation:

Detectron2 is Facebook's PyTorch-based framework and ecosystem that provides implementations for segmentation models like TensorMask, Panoptic-DeepLab, PointRend for precise segmentation, MaskFormer for unified segmentation, and BCNet for occlusion-aware instance segmentation.

For transformer-based segmentation, you don't need to look beyond Hugging Face. Its capabilities and simplicity are far ahead of all the other choices. For CNN-based segmentation, TensorFlow's ecosystem has the most reliable and extensive set of models. If you want real-time segmentation on mobile phones, go with TensorFlow Lite or TensorFlow.js.

In our experience, Detectron2-based custom implementations were difficult to maintain because code changes to its interfaces or dependency upgrades between versions often broke code that previously worked.

Although zero-shot and unsupervised segmentation are gaining favor, real-world requirements like domain-specific prompts or low false positives may require you to train custom models from scratch or fine-tune existing models. In this section, you'll learn some essential information on training custom, supervised segmentation models.

You normally train a system on an existing public dataset and then fine-tune it on your business-specific or task-specific proprietary dataset. Some essential public datasets you should know:

For creating your custom datasets, you'll need some good labeling or annotation tools. Since segmentation masks are irregular, drawing them accurately can be time-consuming. That's why an ideal tool should have the following essential features:

Some good tools with these features are:

All the frameworks support fine-tuning pre-trained models. See Hugging Face's fine-tuning tutorial to learn how to train transformer-based PyTorch and TensorFlow models on your custom datasets.

The common metrics for evaluating segmentation are the pixel-wise intersection-over-union (IoU), mean IoU, and mean average precision (mAP) calculated from the IoU. In addition, more specific metrics like IoU over foreground objects and IoU over background elements are also used.

The introduction of transformers to vision tasks has single-handedly advanced possibilities in just a few years. The breakthroughs in generative artificial intelligence like OpenAI's CLIP and GPT are also helping to improve vision tasks like segmentation. With years of experience in computer vision and natural language processing, we can assist you in introducing these capabilities to your business. Contact us to get started with these state of the art models.