How to Build a Custom Salesforce Chatbot with our Powerful Framework

Using custom Salesforce chatbots, delight your customers with comprehensive and detailed answers to all their complex questions and issues.

JVector is a high-performance Java embedded vector search engine, designed to offer a unique blend of speed, efficiency, and flexibility. It utilizes advanced graph algorithms inspired by DiskANN and related research, ensuring high recall and low latency for quick and accurate search results on large scale data with multiple data types and data sources. In terms of efficiency, JVector compresses vectors using product quantization, allowing them to remain in memory during searches. This feature, combined with its disk-aware design, ensures minimal iops at query time. JVector's flexibility is evident in its concurrent and incremental nature, allowing index builds to scale linearly to at least 32 threads and enabling queries during index build.

JVector's API is designed for easy embedding that making it an elite tool for integration into large scale Java systems. It is currently used by platforms like DataStax Astra DB and is set to be integrated with Apache Cassandra. This guide will delve into the features and performance of JVector, providing a comprehensive understanding of this powerful vector search engine. Let’s take a look at what JVector is and how it can be used as a vector search engine.

To truly understand JVector’s search capabilities,we can compare it with other search engines in the market on large scale databases. One such comparison is with Lucene, a popular open-source search library.

In a comparative study using the Deep100M dataset, which comprises about 35GB of vectors and a 25GB index, JVector outperformed Lucene by quite a large margin. JVector's superior performance can be attributed to its state-of-the-art graph algorithms which allow it to reach the high recall and low latency metrics seen above. Additionally, its use of the Panama SIMD API accelerates index build and queries, contributing to its impressive performance.

Another aspect of JVector's performance is its scalability, particularly in terms of updates. The ability to scale is crucial for any search engine as it directly impacts its efficiency and usability. JVector excels in this area as well, with its updates scaling linearly to at least 32 threads. This means that doubling the threads results in halving the build time, a feature that significantly enhances its performance. This scalability is a result of JVector's concurrent functionality, which allows for simultaneous operations, thereby increasing efficiency.

To harness its full potential, it's crucial to understand how to properly integrate JVector into production search workflows. The first step is to add JVector as a dependency in your project. This can be done by adding the following lines to your project's pom.xml file, replacing `${latest-version}` with the latest version of JVector available on Maven Central.

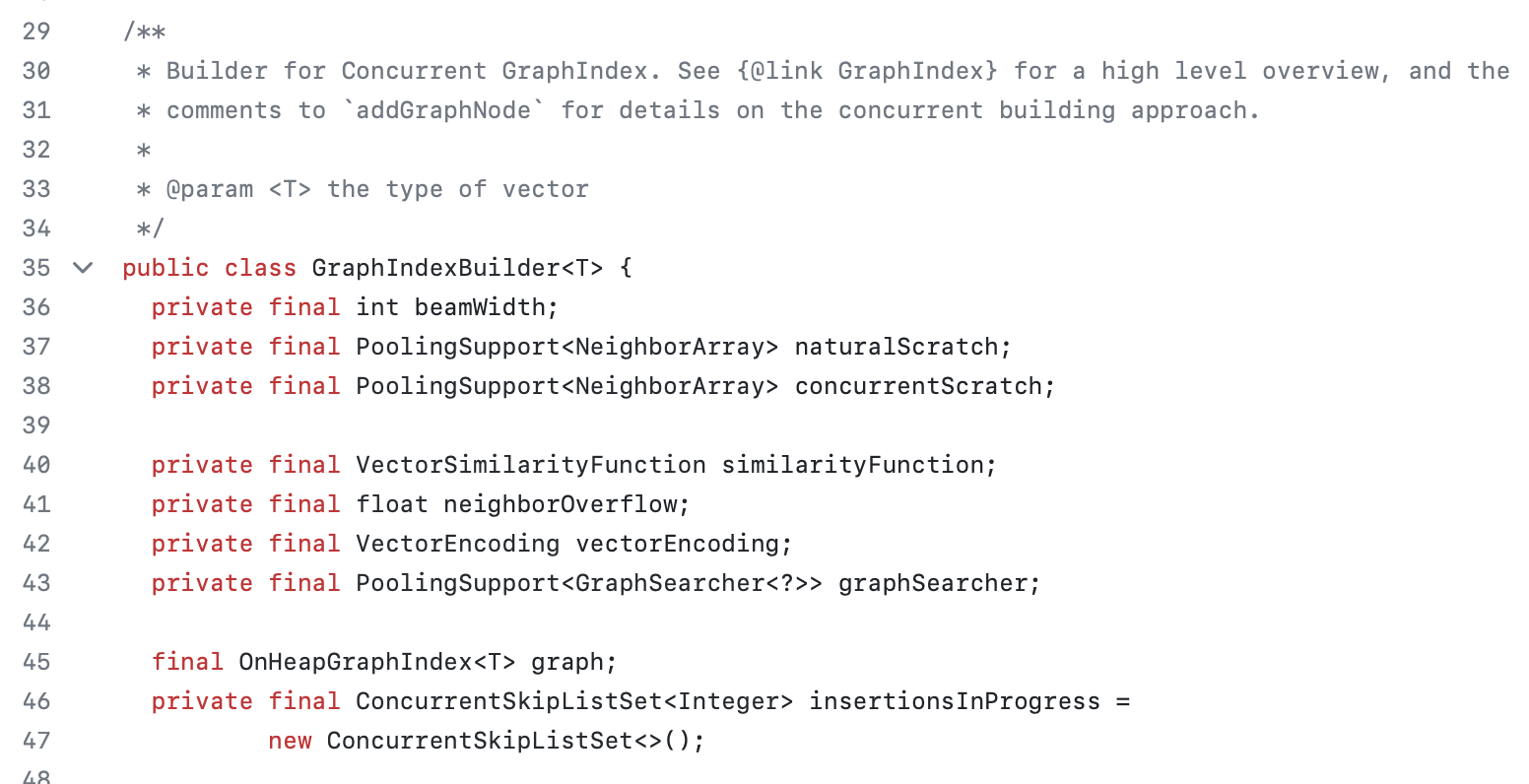

Once JVector is successfully integrated into your build, the next step is to build the index. The `GraphIndexBuilder` class serves as the entry point for this process. You will need to implement the `RandomAccessVectorValues` interface to provide vectors to the builder. If all your vectors are in the provider upfront, you can simply call the `build()` method, which will parallelize the build across all available cores. Alternatively, you can call `addGraphNode` as you add vectors. This method is non-blocking and can be called concurrently from multiple threads. Once you're done adding vectors, call `GraphIndexBuilder.complete` to optimize the index and make it ready to write to disk.

Searching the index is another critical aspect of using JVector. The `GraphSearcher` class is the entry point for this process. Results are returned as a `SearchResult` object that contains node IDs and scores, in descending order of similarity to the query vector. `GraphSearcher` objects are reusable, so unless you have a very simple use case, you should use `GraphSearcher.Builder` to create them. JVector represents vectors in the index as the ordinal (int) corresponding to their index in the `RandomAccessVectorValues` you provided. You can get the original vector back with `GraphIndex.getVector`, if necessary, but since this is a disk-backed index, you should design your application to avoid doing so if possible.

JVector implements a DiskANN-style search which means that searches can be performed using the compressed representation that is kept in memory. This approach is not only efficient but also significantly enhances the speed of searches.

DiskANN, or Disk Aware ANN, is a disk-based Approximate Nearest Neighbor (ANN) search library that provides high recall and low latency. It is designed to handle large-scale datasets that cannot fit into memory. By utilizing DiskANN-style search, JVector is able to handle large volumes of data efficiently, making it an ideal choice for projects dealing with substantial data. Product Quantization, on the other hand, is a method used for compressing vectors. It involves dividing the vector space into a Cartesian product of low dimensional subspaces and then quantizing each subspace separately. This method allows for efficient storage and computation, which is crucial for handling large datasets.

Enabling the DiskANN-style search in JVector involves a few steps. First, a `ProductQuantization` object is created with your vectors using `ProductQuantization.compute`. This process may take some time as it computes the codebooks. Next, the `ProductQuantization::encode` or `encodeAll` is used to encode your vectors. A `CompressedVectors` object is then created from the encoded vectors. Finally, a `NeighborSimilarity.ApproximateScoreFunction` for your query is created that uses the `ProductQuantization` object and `CompressedVectors` to compute scores. This is then passed to the `GraphSearcher.search` method.

One of the key aspects of working with embedded vector lookup databases is the management of indexes. This involves saving and loading states to and from the disk, which are crucial operations for maintaining the efficiency and performance of the system.

Saving the state of an index to disk is a straightforward process in JVector. The `OnDiskGraphIndex` and `CompressedVectors` classes both have `write()` methods that allow you to save the current state of the index to disk. This is particularly useful when you want to preserve the current state of your index for future use. It's worth noting that writing to disk only requires a DataOutput, making the process relatively simple and straightforward. Loading the state from disk is equally simple. The `OnDiskGraphIndex` and `CompressedVectors` classes initialize from disk using their constructor and `load()` methods, respectively. However, reading requires an implementation of `RandomAccessReader` and the related `ReaderSupplier` to wrap your preferred i/o class for best performance. An example of this can be seen in the `SimpleMappedReader` and `SimpleMappedReaderSupplier`.

While managing indexes in JVector, it's important to consider performance. Building a graph does not technically require your `RandomAccessVectorValues` object to live in memory, but it will perform much better if it does. On the other hand, `OnDiskGraphIndex` is designed to live on disk and use minimal memory otherwise. You can optionally wrap `OnDiskGraphIndex` in a `CachingGraphIndex` to keep the most commonly accessed nodes (the ones nearest to the graph entry point) in memory. This can significantly improve the performance of your system.

Another key feature of JVector is its utilization of the Panama Vector API. This API is a part of Project Panama, an initiative by the OpenJDK community to improve and enrich the connections between Java and native code. The Panama Vector API, also known as SIMD (Single Instruction, Multiple Data), provides a way to perform operations on multiple data points simultaneously. This is particularly useful in the context of JVector, as it accelerates the indexing and search processes, leading to faster and more efficient results. The API is heavily used in JVector for Approximate Nearest Neighbors indexing and search. However, it's important to note that during indexing and product quantization, memory bandwidth can become saturated, causing the process to slow down.

To mitigate this, JVector provides an option to limit the number of operations to the physical core count. This is done using the `PhysicalCoreExecutor` class. By default, the value is set to half the processor count seen by Java. However, this may not be suitable for all setups, especially those without hyperthreading or hybrid architectures. In such cases, JVector allows you to override the default physical core count. This can be done by using the `-Djvector.physical_core_count` property.

JVector's practical application as an embedded search system can be best understood through its sample code. Two classes, in particular, SiftSmall and Bench, offer a comprehensive view of how to use JVector effectively.

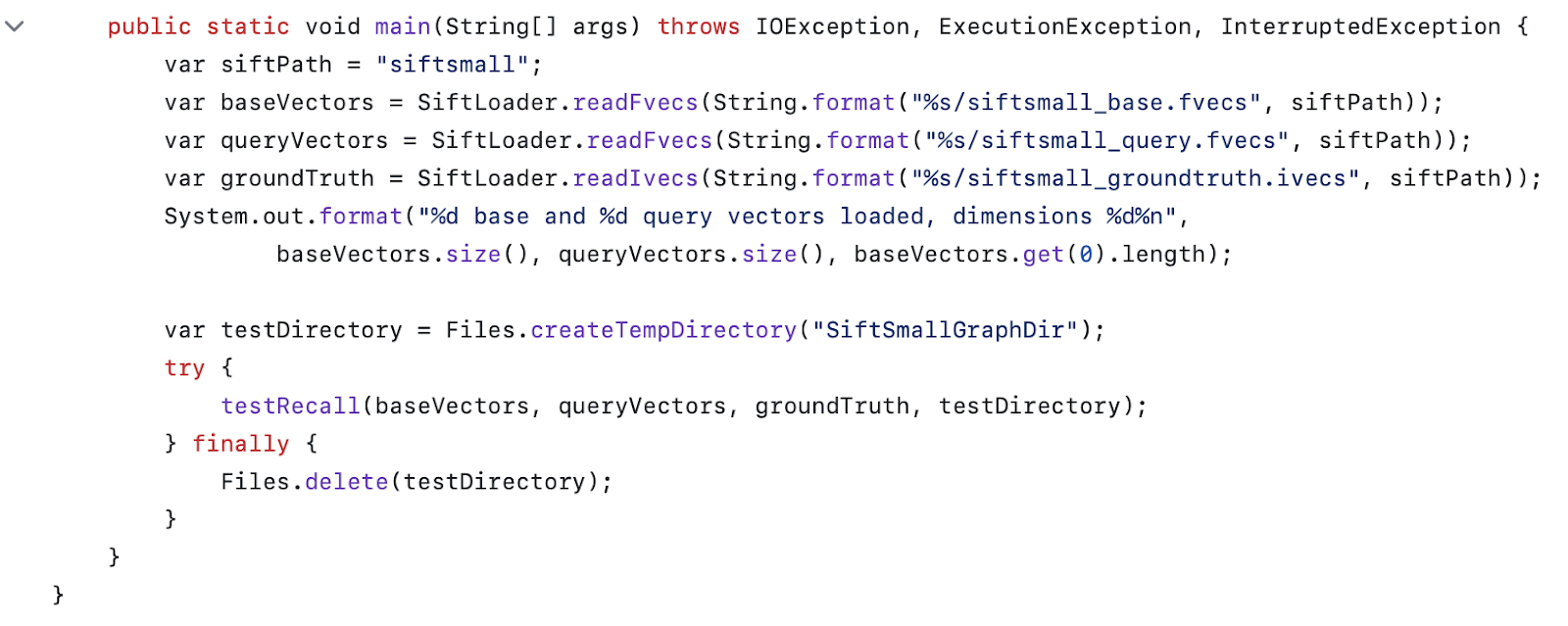

The SiftSmall class is a demonstration of how to index and search a small SIFT dataset of 10,000 vectors. This class provides a practical example of how to implement JVector in a real-world scenario. It showcases how to build an index, add vectors, and search the index using the GraphSearcher class. The SiftSmall class is an excellent starting point for anyone looking to understand the basics of JVector.

On the other hand, the Bench class performs a grid search across the GraphIndexBuilder parameter space. This class is designed to find the best trade-offs between recall and throughput. It provides a more advanced example of how to use JVector, demonstrating how to optimize performance by adjusting parameters. The Bench class also includes a script to graph the pareto-optimal points found during the grid search, providing a visual representation of the trade-offs.

Testing JVector with various datasets is another crucial aspect of understanding its functionality. The library provides several KNN datasets for testing based on ada-002 embeddings generated on Wikipedia data. These datasets are available in ivec/fvec format and can be downloaded using the AWS S3 CLI. Testing JVector with these datasets can provide valuable insights into its performance and efficiency.

The project is organized as a multimodule Maven build, which is intended to produce a multirelease jar suitable for use as a dependency from any Java 11 code. When run on a Java 20+ JVM with the Vector module enabled, optimized vector providers will be used. The base code is in the jvector-base and is built for Java 11 releases, restricting language features and APIs appropriately. The code in jvector-twenty is compiled for Java 20 language features/APIs and included in the final multirelease jar targeting supported JVMs. The jvector-multirelease packages jvector-base and jvector-twenty as a multirelease jar for release. For developers looking to test the functionality of JVector, the SiftSmall and Bench classes can be run directly. The Bench class requires some datasets to be downloaded from ann-benchmarks. The files used by SiftSmall can be found in the siftsmall directory in the project root. To run either class, you can use the Maven exec-plugin.

When it comes to releasing the project, you need to configure ~/.m2/settings.xml to point to OSSRH and run `mvn -Prelease clean deploy`. This step is crucial for making the project available for other developers to use as a dependency in their own projects.

Bench.java is a crucial component of the JVector library, serving as a testing ground for the library's functionality. It's designed to test GraphIndexes against vectors from various datasets, providing a practical demonstration of the library's capabilities. The code is structured to build and test graph indexes, perform queries, and execute grid searches. The recall testing in Bench.java is a critical aspect of its functionality. It's designed to test the recall of the GraphIndex against the base vectors of the dataset. The recall test is performed by building a GraphIndex, writing it to disk, and then querying it. The results are then compared to the ground truth to calculate the recall. This process is repeated for different configurations of M (the number of neighbours to consider during construction) and efConstruction (the size of the dynamic list during construction), providing a comprehensive analysis of the library's performance. Query execution in Bench.java is another key feature. It's designed to perform queries on the GraphIndex, either using the exact vectors or the compressed vectors. The query execution process involves searching the GraphIndex for the closest neighbours to the query vector, and then comparing the results to the ground truth. This process is performed multiple times to calculate the average recall and the average number of nodes visited during the search.

The main function of Bench.java serves as the entry point for the testing process with “main” functions. It sets up the parameters for the grid search, loads the datasets, and then performs the grid search. The grid search function is a comprehensive testing process that tests the recall of the GraphIndex for different configurations of M, efConstruction, and efSearch (the size of the dynamic list during search). It also tests the performance of the GraphIndex when using disk storage versus memory storage."

Width.ai builds custom NLP products that often leverage real time data lookup for use in LLMs and Q&A systems. JVector is a great option for systems that need web sized search lookups that contain multiple data types from various sources. Let’s chat today about how we can implement this system in your existing stack.