How to Build a Custom Salesforce Chatbot with our Powerful Framework

Using custom Salesforce chatbots, delight your customers with comprehensive and detailed answers to all their complex questions and issues.

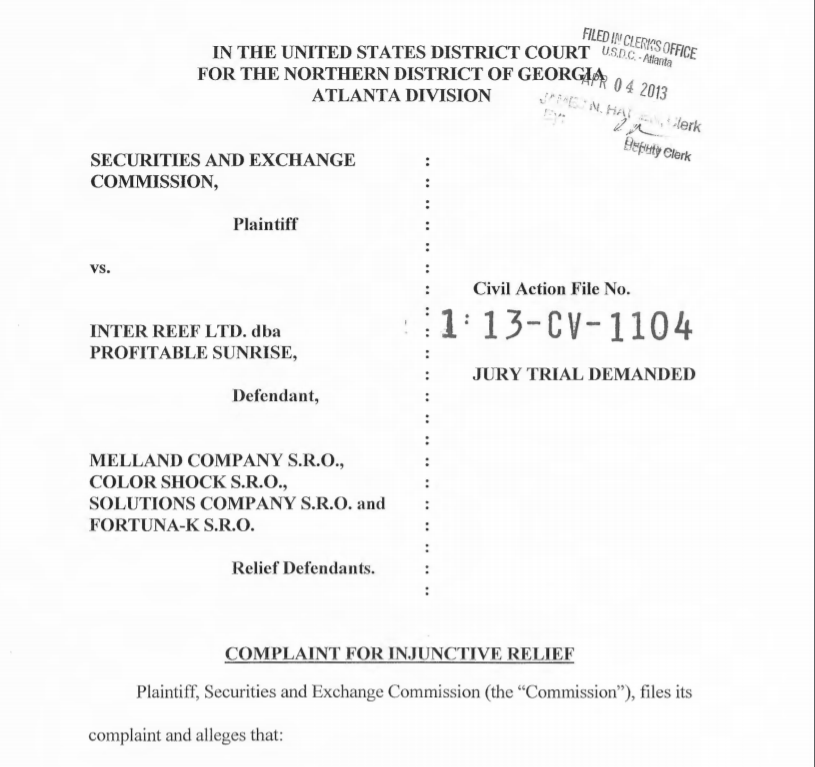

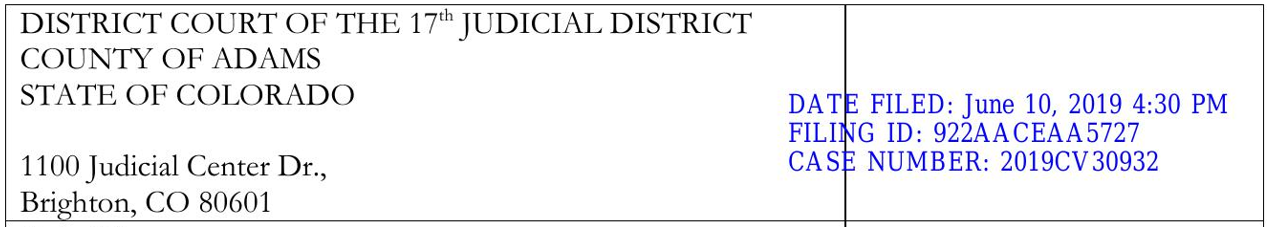

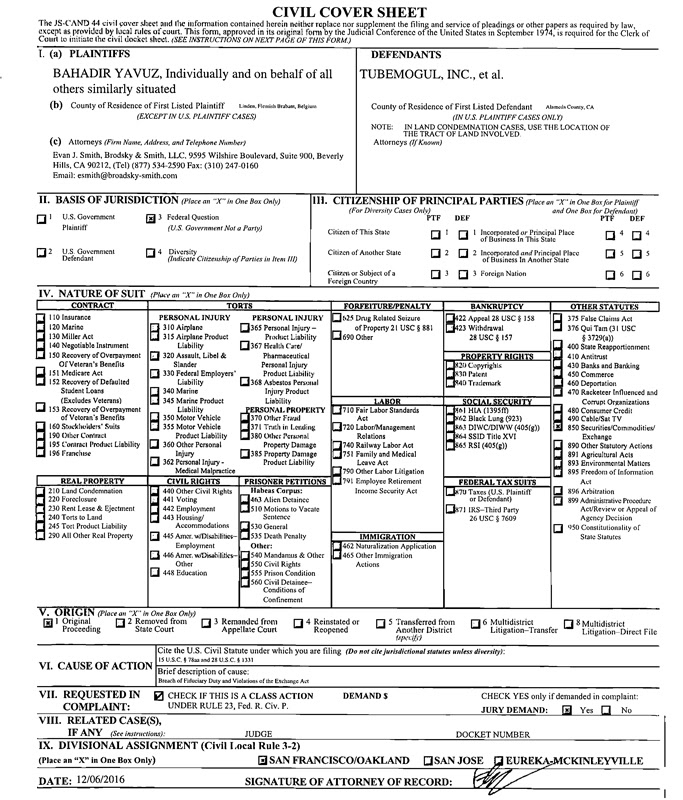

The cover sheets for legal documents contain a ton of information about people in a legal case, dates and locations for hearings, case numbers, and attorneys for each side. This information can be tedious to record by hand, and requires reading through these cover sheets and finding the information you care about. Processing servers can spend 100s of hours looking at these documents to extract the information they need to move the documents along, and can often make human error mistakes. By using a custom built software pipeline consisting of natural language processing, computer vision, and OCR Width.ai has automated this process and allows you to return the key information you’re looking for in a matter of seconds, for any format in the USA used for these cover sheets.

Width.ai has used this machine learning software to automate this process for many different legal document use cases, and has achieved 90+% accuracy for a production pipeline that returns your results in seconds.

Legal document cover sheets contain personal names, addresses, numbers, dates and times that map to different important keywords in legal cases. For instance, we have personal names that refer to plaintiffs, defendants, judges, attorneys, debtors. Not only is it enough to just pull the text off these cover sheets, but we have to assign keywords to these for entities. An added complexity is we want this software to be able to handle any legal document cover sheet from any state or federal form, and always correctly extract the correct information.

Processing servers spend a ton of money and time processing these legal documents and extracting the key information from them to process the court case documents. This software allows us to fully automate that operation, with near human error levels of accuracy. While this pipeline was built to handle these documents from a processing server, this software can easily be used by lawyers, attorneys, and anyone else looking to extract the key information from legal document cover sheets.

Issues come up when processing lots of these documents at an enterprise level, while trying to account for the generalization required to extract from any cover sheet format.

1. They can get in the way of our text we are trying to read and analyze. Stamps can cover important text like the judge's name and parts of the address.

2. Sometimes they contain the text we actually care about reading in. Lots of cover sheets stamp the case number on instead of typing it, so we can’t just remove stamps and seals altogether.

We’ve built a proprietary machine learning based software pipeline that handles all the parts of extracting the key information. We leverage open source and other proprietary models along with custom fine tuning to tweak for our domain use case. The complexity needed to extract from both form formats and long form sentences in the same document is the main reason you can’t use other document extraction software's and tune them for your domain.

After converting the pdf format document to an image that can be processed by common computer vision libraries, we pass our image through a custom built image preprocessing module that enhances the focus points of our cover sheet. This process is common in computer vision and OCR, and allows us to complete two important steps.

One of the parts of this equation that is most difficult to solve is dealing with the many different document attributes that show up in the same document. These documents are not laid out in one single popular format (invoice, check box fields, long form text, text boxes with input, receipts and many more). Most of the time they contain multiple parts of other common extractable documents and not only do we have to account for each of these, but we just generalize the entire software pipeline to account for how the data comes in from these different document formats. The difficulty with this does not end here, as not only do we account for the mix of popular formats, but with the ability to read handwriting and machine text from them. Most solutions trying to be generalized fall right onto their face when approached with this.

Text extraction needs to keep the sentence structure and text structure of the originally intended layout. When it comes time to figure out our named entities in the text, the intended layout of the sentences and forms matters to make sure we have any keywords and names in the right layout. Let’s take a look at an example to fully understand this point.

Here’s an easy example to understand text structure. Text extraction reads from left to right, and top to bottom. If we just read this text through there's a few things that cause an issue.

We built a trained and domain specific machine learning based solution to parse these cover sheets apart in a way that takes into account any format that appears in the main cover sheet, even when there are multiple. The machine learning model is trained on 100s of different formats and can recognize and extract these formats from our main document to ease the later models. This model achieves over 87% accuracy on the set of requirements assigned to it, and includes backup coverage when the model cannot detect a specified format.

Want to learn more about the proprietary model we built for document parsing? Let’s talk about intelligent document processing for your specific domain.

We’ve parsed our full legal document cover sheet in a way that keeps the intended document format and text keyword layout. Now let’s extract the text from our document.

For text extraction we built a custom OCR model that extracts both handwritten and machine generated text at a high level. We also built a custom text processing module that cleans up our collected data and optimizes the text for our domain specific use. This processing module greatly enhances our accuracy later in the pipeline, as we’ve optimized it based on patterns we found in these documents.

Our model is fully optimized for GPU use and offers parallel processing to run huge batches of document formats. Many worry that parsing documents leads to exponential runtime given the larger batch of images, but we’ve rewrote the processing of an industry standard model to handle this. Although this model is fine tuned and domain specific, we can easily retune for any domain and document type, as this model works outside of any of the other processes in the pipeline.

As we already talked about, and as you might already know, these documents have different formats embedded in them, especially in terms of sentence structure. Forms, long form sentences, check boxes and much more make up different types of word formats. This variety of data inputs makes generalization difficult in the above steps, and this is no different here. NLP models learn best when the input sentences fit a similar size and word vocabulary. Considering we have input images to extract from that originated from very different formats, the sentences vary quite a bit for an NLP model to learn from.

We ended up building a custom NLP pipeline that has the ability to handle any sentence input and extract the exact key information we’re looking for in these legal document cover sheets. The model uses a mix of domain specific part of speech based extraction components, and domain specific named entity recognition pieces. We ended up using spaCy as our base framework for our NLP pipeline, and their release of 3.0 fit nicely with our timeline. The pipeline is proprietary to give our amazing clients the absolute highest level of software in the industry, but here’s a look at the baseline spaCy pipeline.

We’ve extracted our key information we’re looking for in our legal document cover sheet, and are now looking to give it to a user. There is one step in the larger process that nobody talks about, but is the most important part of automating this process and making this entire thing useful for your business, and we can’t believe it’s overlooked.

Not only do we have to worry about if the information is actually correct, but how do we make sure the names, people, addresses, numbers that we’re looking for are who we think they are? If a name shows up twice in a document, say “John Williams”, and one is labeled as the plaintiff and one is labeled as an attorney, how do we know which is correct? If a case number is 2019CV000820 in a text box, but 106279428 is said to be a case number as well from the header, which is right? This part of NLP actually gets passed over often, and written off as just how the model works.

Using metrics like confidence intervals, beam search keyword mapping, and surrounding keyword information we built a custom algorithm that makes decisions to remove false positives and ensure the named entities the model returns are what they are labeled as. This is a surprisingly difficult task, as it requires a deep understanding of the domain specific information that makes up the named entities, past just the keywords themselves. This module can be built to adjust to changes in the data automatically with reinforcement learning.

With our software pipeline built, we can deploy an API implementation to allow users to automatically run documents through this intense machine learning based pipeline. One of the best parts is that the entire pipeline runs on average in 3.5 seconds, with times as fast as 2.7 seconds for easier documents, on just a single GPU. This is a huge runtime upgrade over other implementations, as we achieved this we detailed gpu processing and a custom built gpu implementation for the OCR. Runtimes of this speed lets us take what would be considered a pipeline too large for smaller commercial use and offer it to anyone regardless of domain. Once the API is deployed this pipeline can be added to any parts of a business process workflow including backend database systems to real time user use.

Each software pipeline supports connecting our training modules to them to connect new training data to them. This allows us to easily move the pipeline to another domain with similar inputs. With our focus on the pipeline's ability to handle many different document types we can quickly handle invoices, receipts, forms, and other legal documents.

For industries looking to build out a pipeline with a little more power and customization, our models in this pipeline can be adjusted and customized and plugged back into the pipeline. By adjusting the rules in the NER model, we can easily pick up quick accuracy for any document feed into the pipeline, with a simple understanding of the document layout.

Interested in learning more about how you can deploy our custom machine learning pipeline for your legal documents or automating other document processing workflows? Let’s talk about how we can automate your processes ->Width.ai